Depth sensors like LiDARs and Kinect use a fixed depth acquisition strategy that is independent of the scene of interest. Due to the low spatial and temporal resolution of these sensors, this strategy can undersample parts of the scene that are important (small or fast moving objects), or oversample areas that are not informative for the task at hand (a fixed planar wall).

We've developed an approach and system to dynamically and adaptively sample the depths of a scene. The approach directly detects the presence or absence of objects at specified 3D lines. These 3D lines can be sampled sparsely, non-uniformly, or densely only at specified regions. The depth sampling can be varied in real-time, enabling quick object discovery or detailed exploration of areas of interest. The controllable nature of light curtains presents a challenge: the user must specify which regions of the scene light curtains will be placed.

We have designed novel algorithms using a combination of machine learning, computer vision, planning and dynamic programming that program light curtains for accurate depth estimation, semantic object detection, obstacle detection and avoidance. Please look at our publications for more details.

A light curtain consists of an illumination plane and an imaging plane. In a traditional safety light curtain, such as those used in elevators, these are precisely aligned facing each other to detect anything that breaks the light plane between them. These traditional light curtains are very reliable, but only detect objects in a plane, and are difficult to reconfigure.

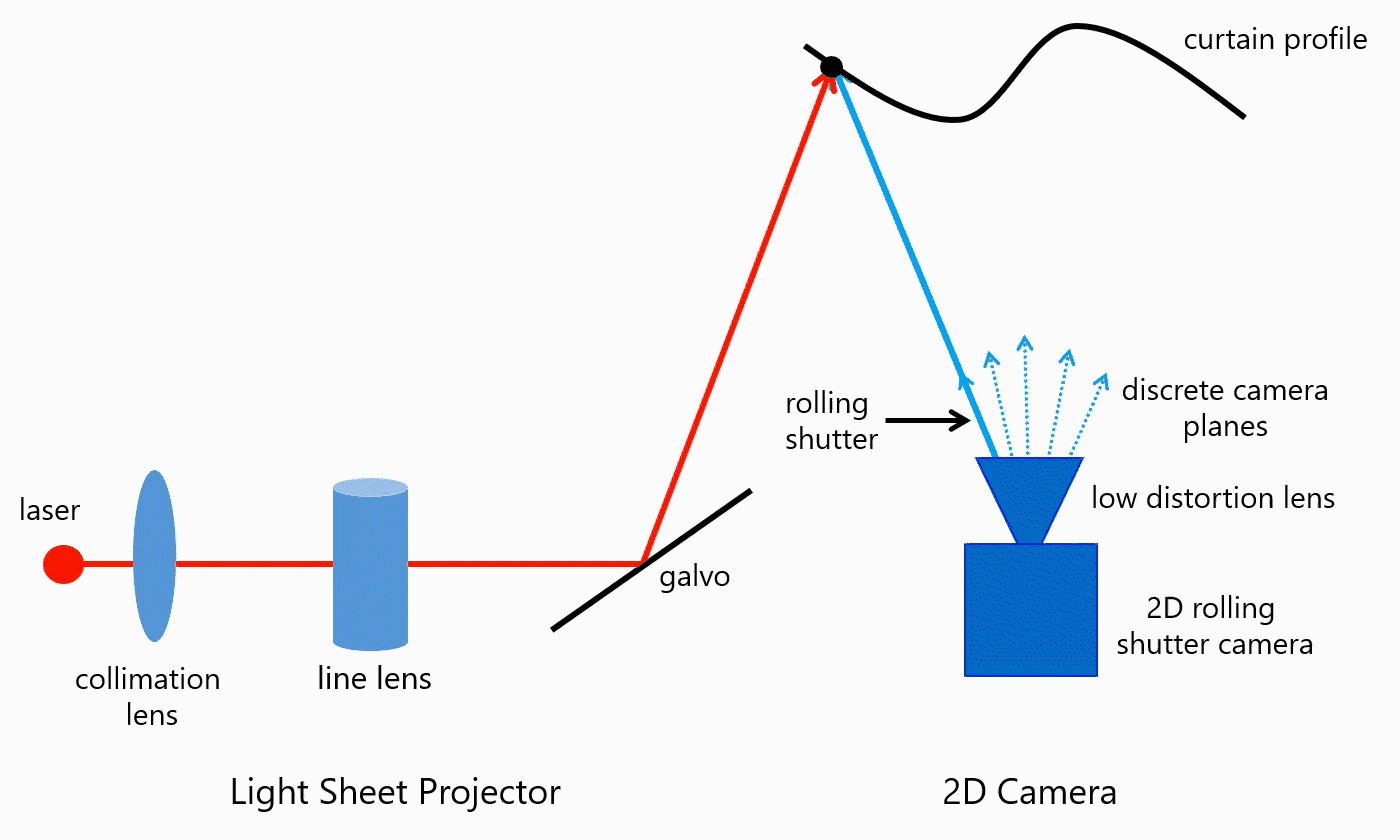

A programmable light curtain device places the illumination and imaging planes side-by-side so that they intersect in a line. If there is nothing along this line, then the camera doesn't see anything. But if there is an object along this line, the light is reflected towards the camera and the object is detected. By changing the angles between the imaging plane and illumination plane this line is swept through a volume to create a light curtain. The sequence of plane angles is determined by triangulation from a specified light curtain and can be changed in real-time to generate many light curtains per second.

Since the illumination and imaging are synchronized and focused at a single line, the exposure can be very short (~100us). This short exposure integrates very little ambient light while still collecting all the light from the illumination system.

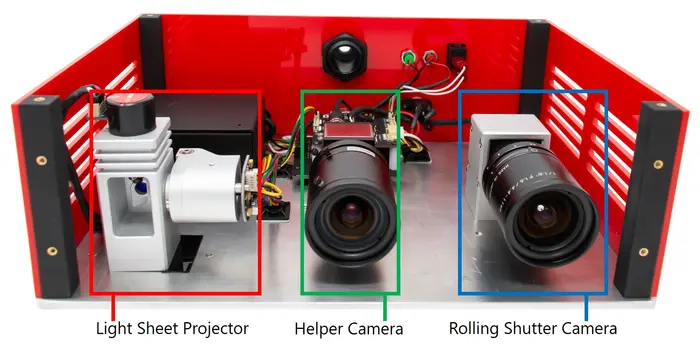

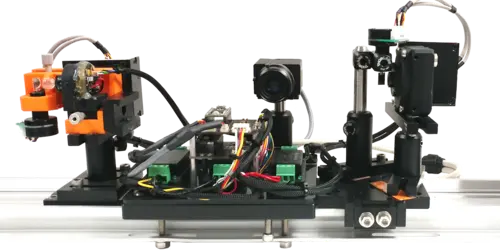

The illumination system uses a custom-built light sheet projector comprised of a laser, collimation lens, a lens to fan the laser in to a line, and a galvo mirror to direct the laser line. The imaging side contains a rolling shutter 2D camera. The light sheet projector is emitting a plane of light and the imaging side is capturing a plane of light with the rolling shutter camera. The motion of the galvomirror-steered light sheet is synced with the progression of the camera's rolling shutter to intersect the imaging plane and lighting plane along the curtain profile. This scanning happens at the full frame rate of the camera producing 60 light curtains per second.

|

Our light curtain prototype consists of:

Performance Specs:

|

|

|

RSS 2023

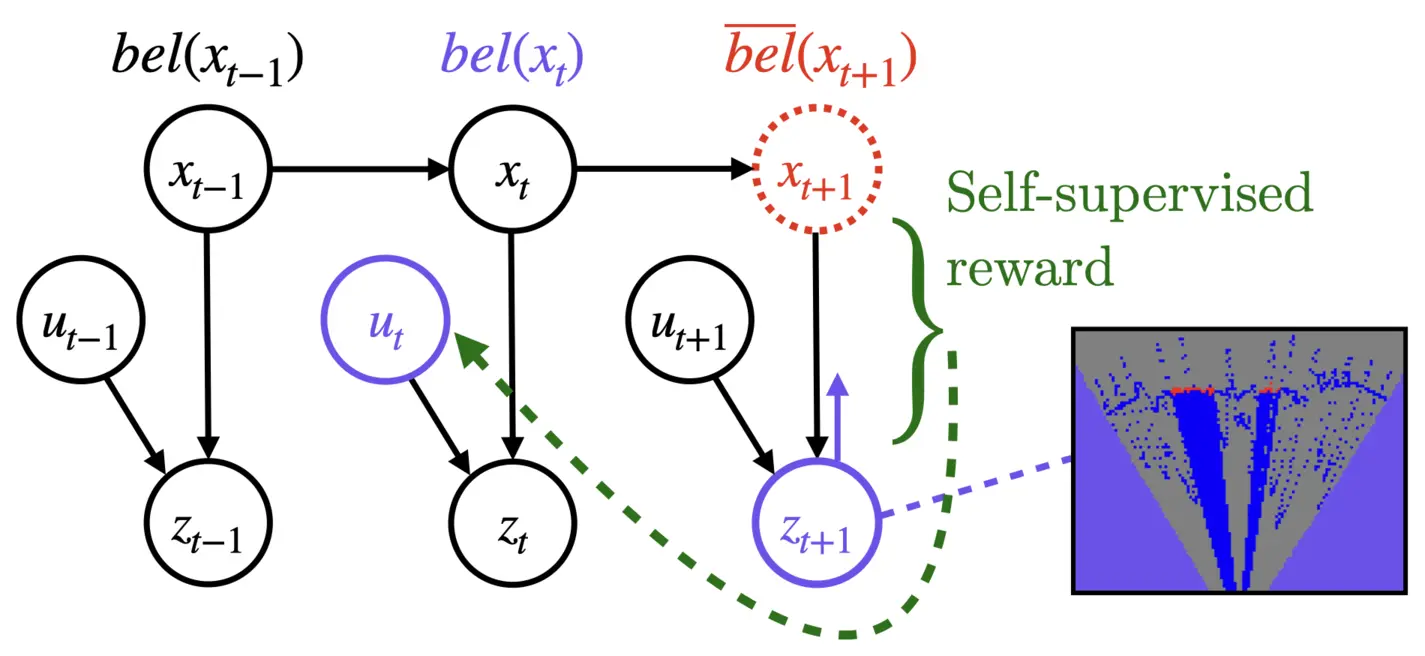

Active Velocity Estimation using Light Curtains via Self-Supervised Multi-Armed Bandits

|

@inproceedings{ancha2023rss,

title = {Active Velocity Estimation using Light Curtains via Self-Supervised Multi-Armed Bandits},

author = {Siddharth Ancha AND Gaurav Pathak AND Ji Zhang AND Srinivasa Narasimhan AND David Held},

booktitle = {Proceedings of Robotics: Science and Systems},

year = {2023},

address = {Daegu, Republic of Korea},

month = {July},

}

|

|

|

CVPR 2022

Holocurtains: Programming Light Curtains via Binary Holography

|

|

@article{chan2022holocurtains,

title = {Holocurtains: Programming Light Curtains via Binary Holography},

author = {Chan, Dorian and Narasimhan, Srinivasa and O'Toole, Matthew},

journal = {Computer Vision and Pattern Recognition},

year = {2022}

}

|

|

|

RSS 2021

Active Safety Envelopes using Light Curtains with Probabilistic Guarantees

|

|

@inproceedings{Ancha-RSS-21,

author = {Siddharth Ancha AND Gaurav Pathak AND Srinivasa Narasimhan AND David Held},

title = {Active Safety Envelopes using Light Curtains with Probabilistic Guarantees},

booktitle = {Proceedings of Robotics: Science and Systems},

year = {2021},

address = {Virtual},

month = {July},

doi = {10.15607/rss.2021.xvii.045}

}

|

|

|

CVPR 2021

Exploiting & Refining Depth Distributions with Triangulation Light Curtains

|

@inproceedings{cvpr2021raajexploiting,

author = {Yaadhav Raaj, Siddharth Ancha, Robert Tamburo, David Held, Srinivasa Narasimhan},

title = {Exploiting and Refining Depth Distributions with Triangulation Light Curtains},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}

|

|

|

ECCV 2020

Spotlight

Active Perception using Light Curtains for Autonomous Driving

|

@inproceedings{ancha2020eccv,

author = {Ancha, Siddharth AND Raaj, Yaadhav AND Hu, Peiyun AND Narasimhan, Srinivasa G. AND Held, David},

editor = {Vedaldi, Andrea AND Bischof, Horst AND Brox, Thomas AND Frahm, Jan-Michael},

title = {Active Perception Using Light Curtains for Autonomous Driving},

booktitle = {Computer Vision -- ECCV 2020},

year = {2020},

publisher = {Springer International Publishing},

address = {Cham},

pages = {751--766},

isbn = {978-3-030-58558-7}

}

|

|

|

ICCV 2019

Oral

Agile Depth Sensing Using Triangulation Light Curtains

|

@inproceedings{bartels2019agile,

title = {Agile depth sensing using triangulation light curtains},

author = {Bartels, Joseph R and Wang, Jian and Whittaker, William and Narasimhan, Srinivasa G and others},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages = {7900--7908},

year = {2019}

}

|

|

|

ECCV 2018

Oral

Programmable Triangulation Light Curtains

|

@inproceedings{wang2018programmable,

title = {Programmable triangulation light curtains},

author = {Wang, Jian AND Bartels, Joseph AND Whittaker, William AND Sankaranarayanan, Aswin C AND Narasimhan, Srinivasa G},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

pages = {19--34},

year = {2018}

}

|

|