|

|

|

|

|

|

Alexei A. Efros |

|

|

|

|

|

|

|

|

|

|

Inpainting Code [GitHub] |

Features Caffemodel [Prototxt] [Model 17MB] |

|

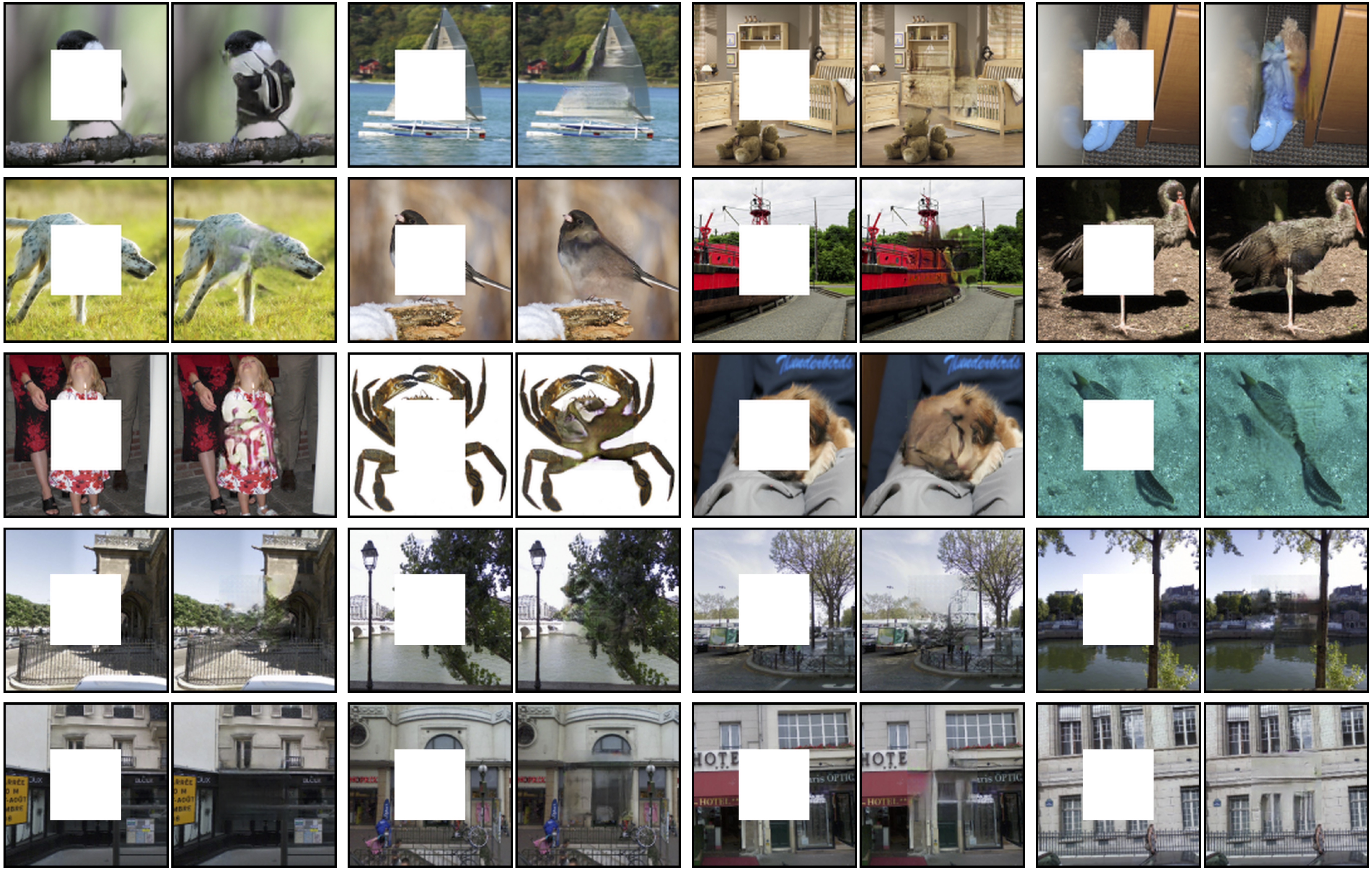

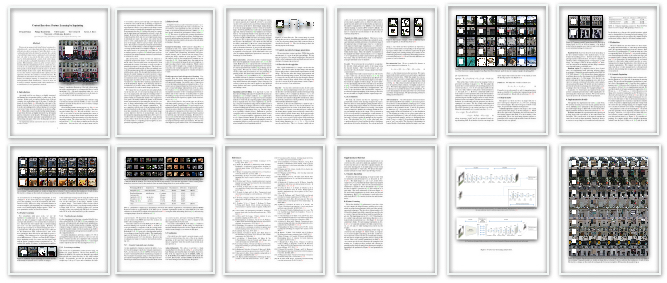

These are Context Encoder results on random crops of "held-out" images. Center half region is inpainted by our method. Browser images appear on a rolling basis so you can still see results while full file is being loaded. All the models were trained completely from scratch. 1.2M-Imagenet model was trained on complete 1.2M image-set of ILSVRC'12 for 110 epochs on Titan-X GPU and took one month to train. 100K-Imagenet model was trained on a random subset of 100K-images from ILSVRC'12 for 500 epochs spanned over 6 days. This 100K set was chosen at random, and we believe results shouldn't change if this set is chosen truly randomly. However, the list of exact 100K image ids is available for download below. Results are shown on images from ILSVRC'12 validation set. |

|

1.2M-Imagenet Trained (110 epochs) [Browse Image Results] or [Download Tar 82MB] [Browse Patch Results] or [Download Tar 76MB] |

|

100K-Imagenet Trained (500 epochs) [Training 100K Image IDs] [Browse Image Results] or [Download Tar 82MB] [Browse Patch Results] or [Download Tar 76MB] |

|

StreetView Inpainting [Browse Image Results] |

[paper 15MB]

[arXiv]

[slides]

[paper 15MB]

[arXiv]

[slides]

|

Citation |

|

@inproceedings{pathakCVPR16context,

Author = {Pathak, Deepak and

Kr\"ahenb\"uhl, Philipp and

Donahue, Jeff and

Darrell, Trevor and

Efros, Alexei},

Title = {Context Encoders:

Feature Learning by Inpainting},

Booktitle = CVPR,

Year = {2016}

}

|

|

|

|

Acknowledgements |

Template: Colorful people! |