|

|

Scene appearance from the

point of view of a light source is called a reciprocal or dual view. Since

there exists a large diversity in illumination, these virtual views may be

non-perspective and multi-viewpoint in nature. In this paper, we

demonstrate the use of occluding masks to recover these dual views, which

we term shadow cameras. We first show how to render a single reciprocal

scene view by swapping the camera and light source positions. We then

extend this technique for multiple views and build a virtual shadow camera

array. We also capture non-perspective views such as orthographic,

cross-slit and a pushbroom variant, while introducing novel applications

such as converting between camera projections and removing catadioptric

distortions. Finally, since a shadow camera is artificial, we can manipulate

any of its intrinsic parameters, such as camera skew, to create perspective

distortions.

|

Publications

"Shadow

cameras: Reciprocal Views from Illumination Masks"

S. J. Koppal and S.G Narasimhan

IEEE International Conference on Computer Vision (ICCV),

September 2009.

[PDF]

|

Pictures

|

Virtual perspective view using linear masks:

In (I) we show a ray diagram for

a perspective shadow camera. I(a) and I(b) show two translating linear

masks occluding P at times i and j respectively. The intersection of the

shadows associated with the two masks is a virtual ray, shown at I(c).

This shadow ray passes through the light source, and is associated with a

pixel (i,j) in a virtual image at I(d). In (II) we show a non-convex

plastic object. Using linear masks we obtain the input images, shown at

(III) which create a dual view in (IV). Note the foreshortening effects

of looking down at the object (shortened legs, extended scales) and that

specularity locations do not change. Also note that shadows in (II) are

occluded in (IV).

|

|

Virtual camera array from multiple linear masks:

In (I) we show a

grid mask with six intersections. We collect data using the horizontal

and vertical masks separately, as shown in (II). We then place light

sources at the six intersections of the mask, capturing six images. We

swap these images using Helmholtz reciprocity and the minima locations of

the mask shadows. The result is a virtual camera array, and in (III) and

(IV) we show the two rows of the array. Note the images are stereo

rectified both horizontal and vertically.

|

|

Non-perspective and multi-view cameras:

In (II) we show a

cross-slit view created when the motion vectors of the linear masks do

not intersect and the light source is moved between experiments. We

demonstrate the distortion effects in (III). In (IV) we create a

pushbroom-like view using the shadow from a single moving mask. Note the

octopus in (V) appears illuminated by two light sources and each tentacle

has two shadows.

|

|

Unwarping refractive distortions:

In (I) we show how

the dual view of an object looking through refractive elements can be warp-free.

This occurs when the light-source illuminates the object without being

occluded by the refractive elements. In (II) we show an image of planar

poster through two thick glass objects. (III) shows the dual view, which

has undistorted, readable text.

|

|

Controlling intrinsic parameters

of shadow cameras:

Since shadow cameras are virtual, we have control over

the locations of the pixels in space. In (I) we show a dual view created

by two linear masks moving with uniform motion at 90 degrees to each

other. Varying the speed of these two perpendicular masks or adding a

third linear mask that is not perpendicular to either of the two results

in skewed and stretched images as seen in (II).

|

|

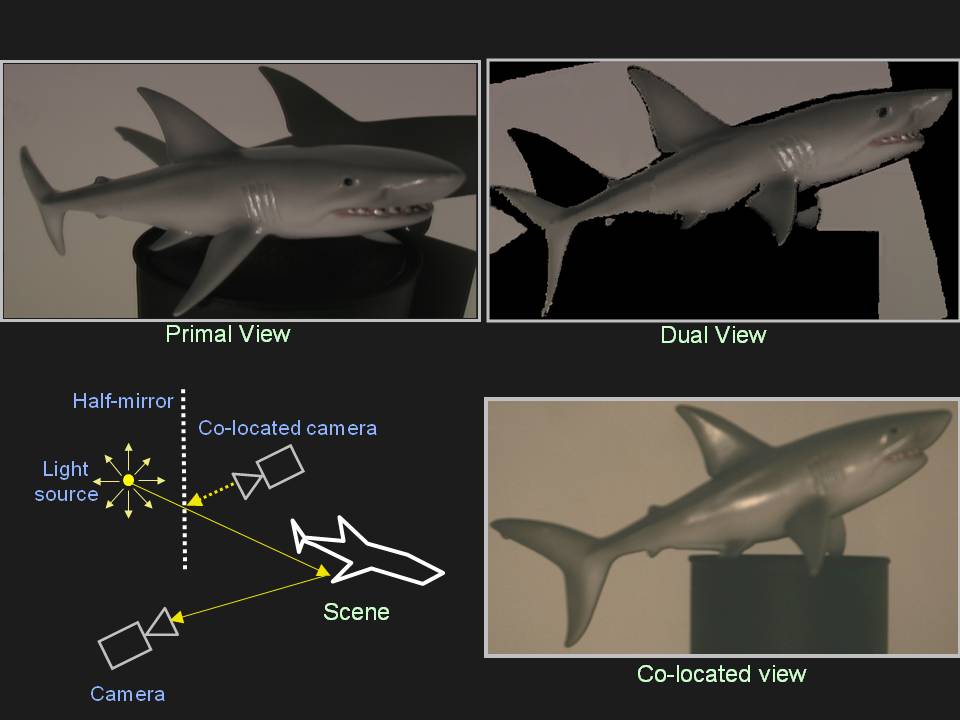

Qualitative evaluation using colocated light-source and camera:

In

this example we show the primal and dual views for a plastic toy.

Since the primal view is viewed perspectively and illuminated

orthographic, the dual view is orthographic and appears illuminated by

a near light-source. We then take a second, real orthographic view of

the scene by colocating another camera with the light source, as shown

in the ray diagram. This real image is qualitatively similar to our

rendered image. Note that colocation does not create a reciprocal

pair, which explains the illumination differences in the two images.

|

|

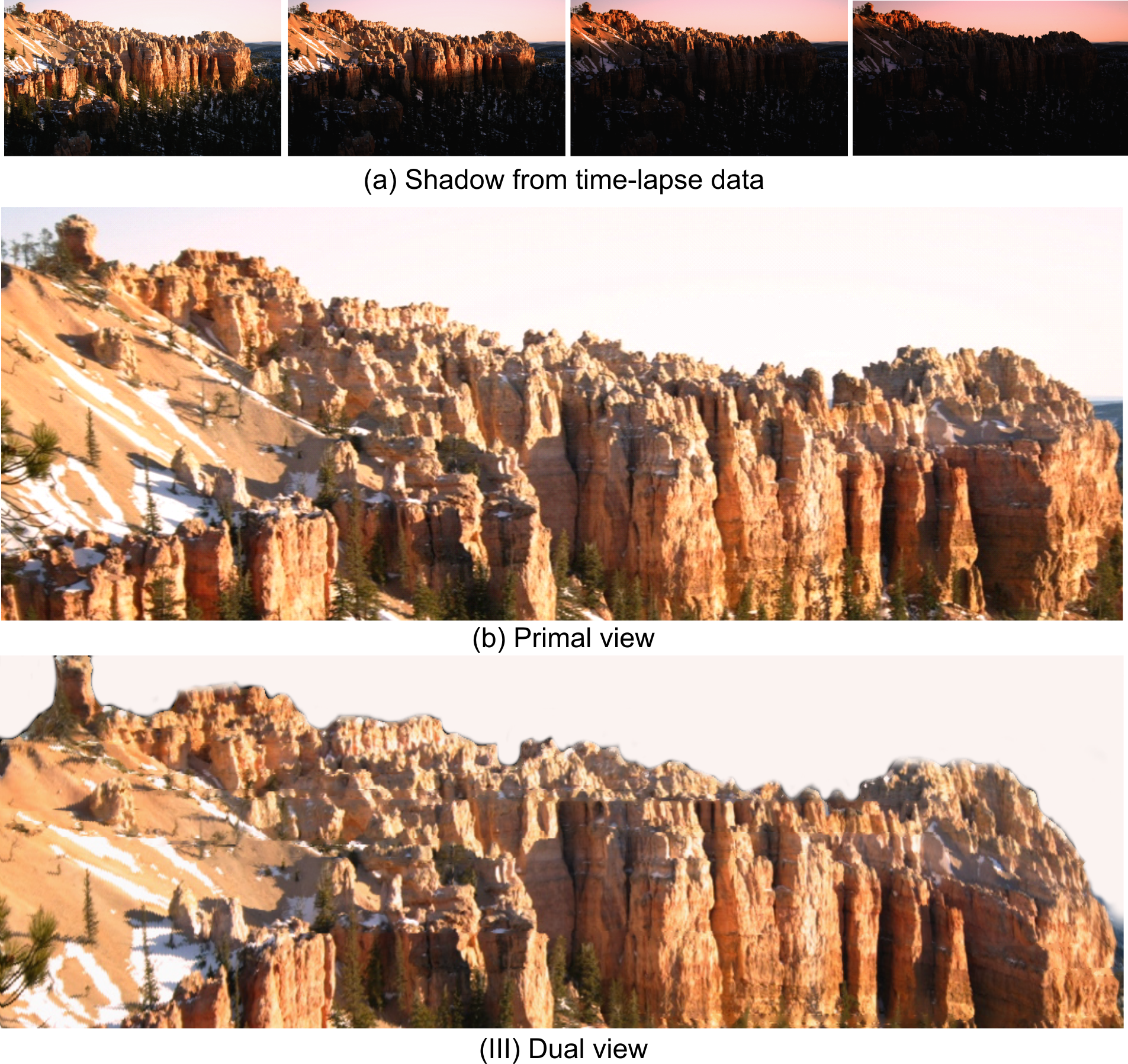

Shadow pushbroom camera for web-cam data:

At the top of the image we

show time-lapse photographs of a cliff occluded by the shadow of an

(unseen) neighboring mountain. The edge of the mountain can be

detected, and we can create a new second, shadow-pushbroom view of the

scene. This view is from the top of the (unseen) neighboring mountain:

for example, the snow-fields on the left appear to be viewed from

above, as compared to the original image.

|

|

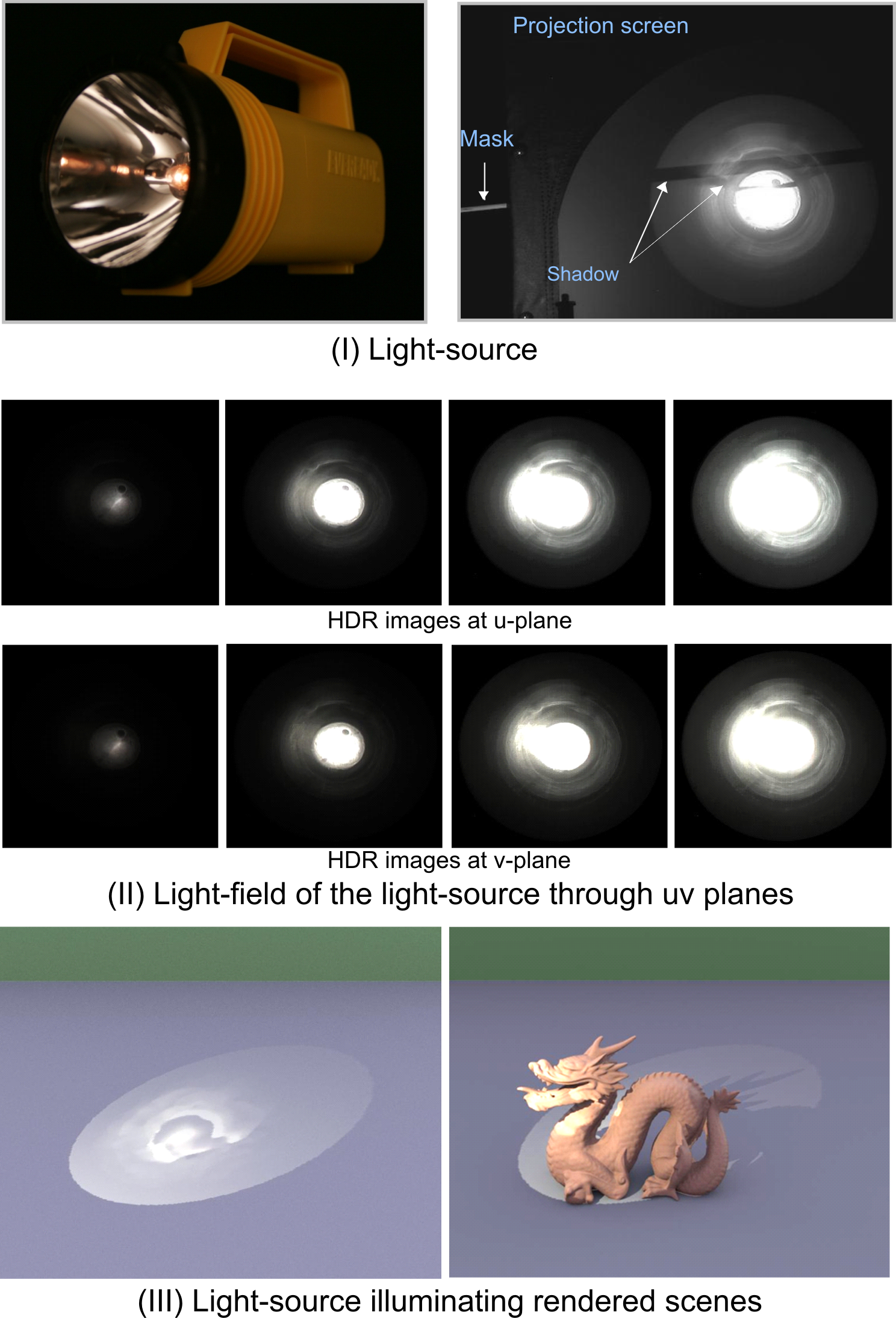

Capturing the light-field of a light-source:

We place the camera and a

light-source on opposite sides of a translucent screen. The source

creates a pattern on the screen, and we capture such illumination

patterns for two screen positions. Correspondences between the two

illumination patterns are obtained using the horizontal and vertical

shadows of linear mask motion, without changing the positions of the

light-source. Our light-source light-field capture method uses four 1D

sweeps of the linear opaque mask, which differs from other approaches

that use time-intensive 2D raster scans. Finally, we can render the

light-source in any computer generated scene.

|

|

|