Roger B. Dannenberg

Home Publications Videos Opera Audacity

Photo by Alisa.

Roger B. DannenbergHome Publications Videos Opera Audacity |

Photo by Alisa. |

Music Performance over Networks and the Latency Problem

Audio delay or latency over digital networks results from the finite speed of light, especially over long distances, and from the need to delay audio at the receiving end to ensure that audio samples can be delivered in an uninterrupted stream.

It seems that audio delay in platforms such as Zoom can be cut by more than half, especially within city-size regions, although software to do that is not as robust and easy to use. Most musicians are still unlikely to be happy with the results.

Many teaching and performing musicians are experimenting with performance over the Internet, learning that delays or latency is a critical limiting factor for traditional performances. This blog discusses the sources of audio latency over networks and what you can do about it.

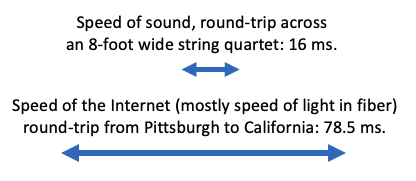

First, just as speed of sound is a limiting factor in live acoustic music performance (soloists stand next to the piano, string quartets sit together, orchestras that are 40 feet across can never swing like a jazz quartet that's 10 feet across), the speed of light is a fundamental limit for network music.

If you are interested in great distances, say, Pittsburgh to the West Coast, that's about 40 milliseconds (ms) at the speed of light, so comparable to an orchestra that’s 40 feet wide (sound travels about 40 feet per millisecond).

That’s just the limit due to physics, and within a metropolitan area, network transmission times drop to around 15 ms (some due to speed of light, but mostly due to hops from one network to another as the data traverses service providers and routers).

Beyond the limits of the speed of light and engineering choices in networks, there is additional latency due to computers and software at each end of an audio connection (and sometimes in the middle).

I build a lot of high-performance audio software for music, and it’s reasonable to get latencies down to around 10 ms at each end, but that’s already bumping the round-trip time from at least 30 ms (15 ms each way) to 50 ms ((15 + 10 ms) times 2).

I did some experiments with Zoom and measured around 170 ms latency one-way. Another service, Jitsi, was 330 ms one-way. This works for conversations, but not for rhythmic music performance.

I was about to write my own software to see how much better was possible when I found SoundJack, a non-commercial system by a German computer scientist. I think he knows all the tricks I do and spent a lot of time on this.

So I tried SoundJack. I measured 147 ms round-trip to Boston, and I estimate that would be 124 ms within Pittsburgh. In contrast, Zoom round-trip is 340 ms, so a vast improvement is possible. However, 123 ms is still not going to be comfortable for playing songs together.

I do not have a full explanation for why the gap between 50 ms minimum latency and 123 ms measured latency. I suspect that by optimizing parameters SoundJack could get down to around 100 ms, but the author says that parameter optimization is too complicated to automate. In other words, I don’t think young music students and music teachers are going to be successful tweaking network settings. Using wired network connections might shave a few milliseconds from wireless, but that’s a small improvement.

I think most of the additional latency I haven’t explained is due to jitter, which is the variation in transmission time across the network. When I measure round-trip network time to my own service provider, verizon.net, I see about 15 ms from home in Squirrel Hill, but frequently, times are 25 ms or more and occasionally over 200 ms. To counter these long delays, audio systems intentionally add delay (referred to as buffering) so if audio information shows up late, the receiver is still playing audio stored in a buffer and the lateness makes no difference. But of course, when you compensate for the occassional late data, you basically make everything late, so we have systems like Zoom with 100’s of ms latency.

This animation illustrates what’s going on in network audio. Audio packets are transmitted at regular intervals, but due to jitter, the arrival times are not so regular, as illustrated by the circles coming in from the left. Packets are stored in a buffer (middle), and eventually delivered at regular intervals as audio. When an incoming packet is late, the audio continues without interruption because we’ve been holding a reserve, but keeping that reserve means everything is delayed. You can adjust the Network Jitter and Audio Latency in this model. Notice that Higher jitter and lower latency both lead to more dropouts in the audio output. (Numbers here are arbitrary, but you can think of them as milliseconds, in which case we’ve slowed things down by a factor of 5.)

The use of buffering is a fundamental trade-off between sound with fewer interruptions and dropouts at higher latency vs lower latency with more drop-outs. Zoom goes for pretty high latency because we can tolerate it – it’s a good tradeoff for voice. Of course, drop-outs are really annoying for music too, so we cannot just ignore jitter and eliminate buffering. My measurements with SoundJack were based on enough buffering that the audio seemed mostly continous and drop-out free, but it was far from perfect.

Maybe if the pandemic goes on, there will be increasing pressure to provide lower latency and lower jitter streaming on the Internet. At least jitter can be solved, mainly through reserved bandwidth and priorities. Phone service is an example of such a network. But Internet standards are pretty entrenched, so I don’t see any big improvements coming to consumers in the near future.

Wrapping up, I think you can do much better than current voice-and-video-over-IP offerings, but the best is going to in the 100 to 150 ms round trip range, which is at best unnatural for musicians.

One thing that I have not seen tried is to provide a pianist coach with an electronic keyboard that is silent locally but sends a signal to the student. The student sings along and a mix of the keyboard sound and student sound is returned to the coach. It would be unnerving to play 150 ms ahead of the actual sound, but church pipe organists sometimes deal with long acoustic latencies, so this seems to be a learnable skill. If anyone can do it, musicians can.