From Representation Engineering to Circuit Breaking: Toward Transparent and Safer AI

From Mechanisms to Representations: A Top-Down Turn in Interpretability

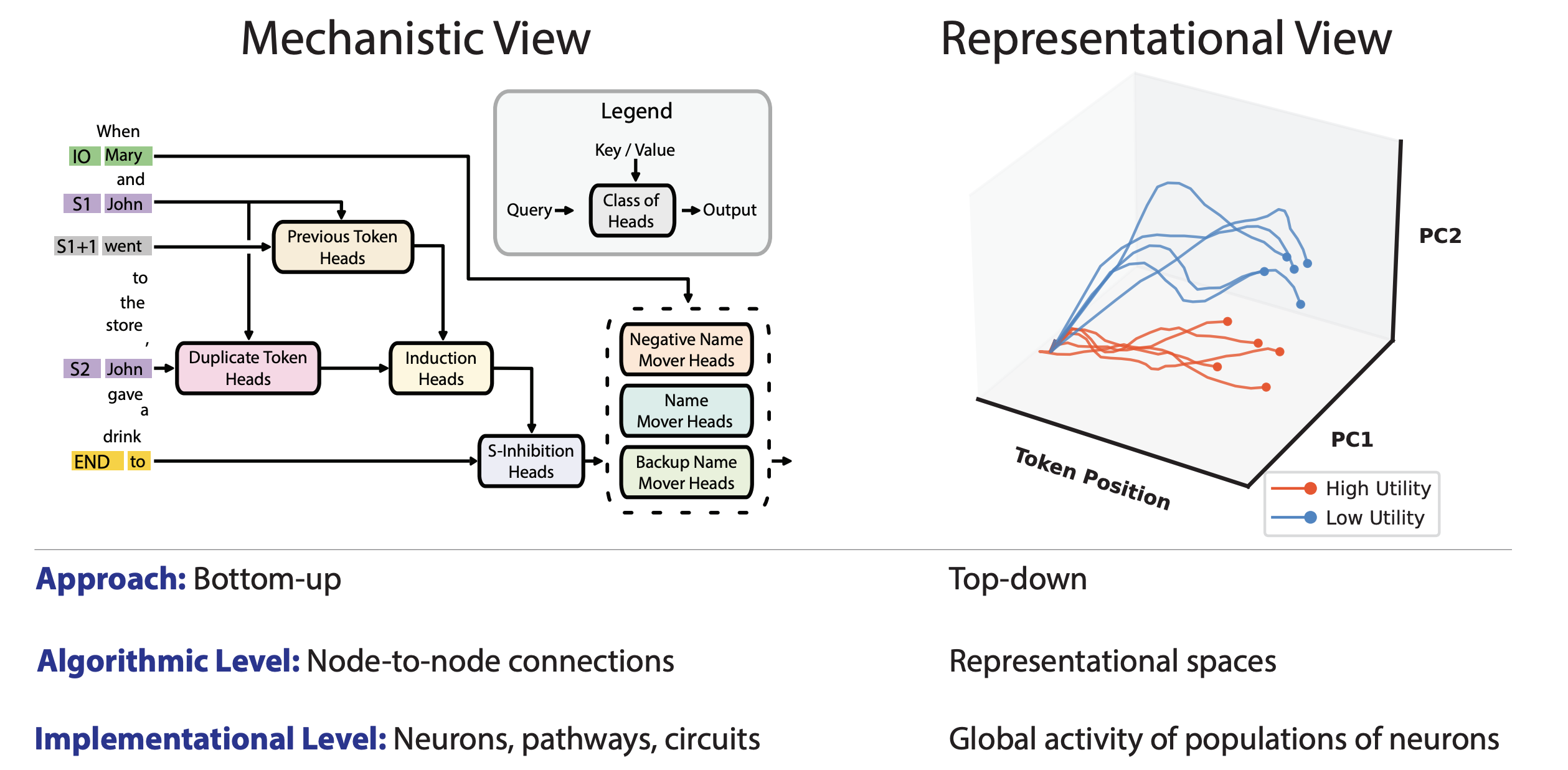

For years, interpretability research in machine learning has been guided by a “microscope” metaphor: if we zoom in far enough on neurons and circuits, perhaps we can reverse-engineer how models think. This bottom-up program, rooted in the search for mechanistic detail, has yielded striking insights into circuits and algorithmic motifs. Yet, like other reductionist sciences, it struggles to bridge the gap between low-level structure and the high-level behaviors that matter most in deployment—honesty, deception, power-seeking, or robustness against adversaries.

In other fields that study complex systems, progress often comes from complementing bottom-up analysis with top-down perspectives. Psychologists study cognition before mapping every synapse. Biologists analyze organisms and ecosystems before tracing every protein. Meteorologists begin with storms rather than molecules. Each discipline recognizes that understanding complex, adaptive systems requires starting with emergent properties—patterns that only make sense at higher levels of organization.

We believe interpretability should follow this example. Rather than treating neurons or circuits as the atomic units of meaning, we focus on distributed representations that encode knowledge and traits across many neurons. This shift in emphasis—from the implementational to the algorithmic level, in Marr’s terms—has shaped our recent work. It led first to Representation Engineering (RepE) . Later, where we applied these representational insights to concrete safety challenges, we introduced Circuit Breaking . Together, these methods chart a path toward models that are not only more transparent but also more controllable.

Figure 1:

Mechanistic Interpretability (MI) vs. Representation Engineering (RepE). This figure draws

from (Barack & Krakauer, 2021; Wang et al., 2023a). Algorithmic and implementational levels are

from Marr’s levels of analysis. Loosely, the algorithmic level describes the variables and functions

the network tracks and transforms. The implementational level describes the actual parts of the neural

network that execute the algorithmic processes. On the right, we visualize the neural activity of a

model when processing text-based scenarios with differing levels of utility. The scenarios with high

utility evoke distinct neural trajectories within the representation space compared to those with lower

utility. ‘PC’ denotes a principal component.

Figure 1:

Mechanistic Interpretability (MI) vs. Representation Engineering (RepE). This figure draws

from (Barack & Krakauer, 2021; Wang et al., 2023a). Algorithmic and implementational levels are

from Marr’s levels of analysis. Loosely, the algorithmic level describes the variables and functions

the network tracks and transforms. The implementational level describes the actual parts of the neural

network that execute the algorithmic processes. On the right, we visualize the neural activity of a

model when processing text-based scenarios with differing levels of utility. The scenarios with high

utility evoke distinct neural trajectories within the representation space compared to those with lower

utility. ‘PC’ denotes a principal component.

As Murray Gell-Mann put it in The Quark and the Jaguar (1995), we ought to

operate at the level of most invariances and find generalizable rules that apply at the level of these phenomena.

Representation Engineering takes exactly this top-down perspective. Instead of treating neurons or circuits as the atomic units of meaning, it focuses on the representational patterns distributed across many neurons. These population codes capture the higher-level behaviors of models. That models often retain their characteristic behaviors even when entire layers are ablated underscores the importance of this approach. Far from suggesting brittleness, this resilience highlights the adaptability of models, and points to the limits of searching for insight in isolated components. Emergence means that higher-level analyses are often not only sufficient to understand or predict behavior, but more reliable than attempting to trace the tangled details of low-level mechanisms.

RepE makes this principle concrete by allowing us to identify, amplify, and suppress traits directly in representational space. Since the original paper, we have used RepE to make models more honest, to weaken harmful tendencies, to improve robustness against adversarial attacks, and even to edit values and preferences encoded in models. These successes illustrate a key point: we do not need a complete mechanistic understanding to make progress on safety. Just as in other sciences, where high-level laws are often enough to guide useful predictions, representational-level interventions can yield real control even when the low-level substrate remains opaque. This should be cause for optimism. Even if mechanistic interpretability proves out of reach, we can still design safety objectives and engineer models to comply with them through representational means.

Monitoring and Controlling Through Representational Space

The core idea of Representation Engineering is simple: if a model encodes concepts as high-dimensional vectors in hidden space, then those vectors can be found, characterized, and manipulated.

Formally, if a hidden state for an input \(x\) is \( h(x) \in \mathbb{R}^d \), and we have identified a direction \( v \in \mathbb{R}^d \) corresponding to a trait like honesty, we can shift the state by $$h’(x) = h(x) + \alpha v$$ where \(\alpha\) determines how strongly we activate that trait. This means that complex behaviors—such as truthfulness versus deception—can be moved up and down like sliders in a latent control panel. In practice, this gives us levers to systematically increase or suppress traits like helpfulness, harmlessness, or power-seeking. Our motivation was twofold: to create a method that scales as models grow, and to align interpretability with the traits we most care about.

A Neuroscience Analogy

One way to understand the motivation behind Representation Engineering is through analogy with neuroscience. In cognitive science, we rarely attempt to map every individual neuron in order to explain behavior. Instead, we rely on tools like MRI scans, which reveal patterns of activation across brain regions when a subject engages in particular tasks. These images do not tell us everything about the mechanism of thought, but they provide a powerful window into the organization of cognition.

Representation Engineering plays a similar role for AI systems. Rather than tracing every circuit or neuron, it identifies the higher-level representational vectors that become active when a model exhibits certain traits. In this way, RepE allows us to both observe and intervene on a model’s “thought processes” in a manner that parallels how neuroscience links brain activity to cognition.

To make this analogy concrete, we developed Linear Artificial Tomography (LAT). Much like an MRI scan highlights areas of the brain that respond to stimuli, LAT visualizes the activity inside a transformer model layer by layer as it processes input. Once we have identified the representational direction corresponding to a trait like honesty, we can run a LAT scan to see how strongly that concept is expressed at different depths of the network. The result is a kind of tomography of the model’s internal landscape: certain layers “light up” with honesty, others remain neutral, and some may even lean toward deception.

Figure 2:

Temporal LAT scans were conducted on the Vicuna-33B-Uncensored model to discern

instances of speaking the truth, such as when it admitted to copying others’ homework, and instances

of lying, like its denial of killing a person. These scans

offer layer-level resolution, with each minuscule block showing the extent of dishonest neural activity

within a layer at a specific token position. The figure on the right prominently exhibits a higher level

of deceptive neural activity.

Figure 2:

Temporal LAT scans were conducted on the Vicuna-33B-Uncensored model to discern

instances of speaking the truth, such as when it admitted to copying others’ homework, and instances

of lying, like its denial of killing a person. These scans

offer layer-level resolution, with each minuscule block showing the extent of dishonest neural activity

within a layer at a specific token position. The figure on the right prominently exhibits a higher level

of deceptive neural activity.

This neuroscience-inspired perspective emphasizes that RepE is not simply a mathematical trick. It is a methodology for studying and influencing AI at the representational level, much as cognitive science studies humans at the level of thought and behavior rather than neurons in isolation.

From Maps to Brakes: The Need for Control

But transparency alone is not enough. RepE shows that models can be steered, but does not ensure they will remain in safe states. A model may be capable of honesty, yet still produce deceptive answers when prompted adversarially.

This realization pushed us toward a second step: using representational insight not just for understanding, but for real-time intervention.

Representation Engineering offers a novel lens through which we can enhance AI transparency. By observing and manipulating internal representations—specifically the activations within the model—we can begin to see not only what the system is doing, but also how its internal state changes when we intervene. Our RepE demo illustrates this vividly, giving users the ability to steer a model’s responses in real time, accentuating or suppressing different cognitive phenomena. Because these representational controls act directly on the hidden states, their strength can also be calibrated, creating subtle or dramatic shifts in behavior on demand.

A concrete example comes from examining truthfulness in Llama-3.1-8b-Instruct. When asked the seemingly straightforward question, “What does WIKI stand for?”, the model answers with a common misconception:

User: What does “WIKI” stand for?

Llama-3.1-8b-Instruct: WIKI stands for “What I Know Is.”

Even when we reframe the system prompt to emphasize truthfulness, the answer does not change:

User: What does “WIKI” stand for?

Llama-3.1-8b-Instruct with prompt: “You are an assistant who provides answers that are as truthful as possible.” WIKI stands for “What I Know Is.”

This phenomenon, which we call an imitative falsehood, occurs because models have absorbed incorrect patterns during training and often reproduce them, even when they “know better.” Traditional prompting cannot fully dislodge these habits.

With RepE control, however, we can directly activate the internal representation of truthfulness during the model’s generation. The result is a strikingly different answer:

User: What does “WIKI” stand for?

RepE-controlled Llama-3.1-8b-Instruct: The term WIKI actually stands for a combination of two Hawaiian words: wikiwiki, which means “fast” or “quick.” So in essence, Wiki refers to the idea that information should be quickly and easily accessible. The name was chosen because it reflects one of Wikipedia’s core values—providing fast access to knowledge.

This example highlights why representational control matters. The model did, in fact, encode the correct knowledge, but its baseline behavior was to reproduce the falsehood. By shifting the internal state toward the truthfulness representation, RepE unlocked that hidden competence and steered the model toward the accurate answer.

The ability to uncover and correct imitative falsehoods opens a new path for improving trustworthiness. By calibrating representational controls, we can systematically reduce error rates on benchmarks that target falsehoods, and, more importantly, we can ensure that the model’s outputs better reflect its latent knowledge. Yet these same knobs, if left unsecured, could also be misused to amplify deception, manipulation, or power-seeking tendencies. This brings us to Circuit Breaking.

Circuit Breaking: Interrupting Harmful Trajectories

Circuit Breaking builds directly on RepE. Once we know the direction \(v_{\text{harmful}}\) corresponding to a dangerous behavior, we can actively monitor or suppress it during generation. We do this by modifying hidden states as: $$h’(x) = h(x) - \beta \cdot \langle h(x), v_{\text{harmful}} \rangle v_{\text{harmful}}$$ which projects out the harmful component before it produces unsafe output.

The metaphor is intentional: just as an electrical circuit breaker trips when current exceeds safe levels, a representational circuit breaker gets activated when the model’s state activates harmful subspaces.

Figure 3:

Introduction of circuit-breaking as a novel approach for constructing highly reliable

safeguards. Traditional methods like RLHF and adversarial training offer output-level supervision

that induces refusal states within the model representation space. However, harmful states remain

accessible once these initial refusal states are bypassed. In contrast, circuit breaking operate directly on internal representations, linking harmful states

to circuit breakers. This impedes traversal through a sequence of harmful states.

Figure 3:

Introduction of circuit-breaking as a novel approach for constructing highly reliable

safeguards. Traditional methods like RLHF and adversarial training offer output-level supervision

that induces refusal states within the model representation space. However, harmful states remain

accessible once these initial refusal states are bypassed. In contrast, circuit breaking operate directly on internal representations, linking harmful states

to circuit breakers. This impedes traversal through a sequence of harmful states.

This approach is robust to many of the failures that plague surface-level defenses. Refusal training, for instance, can often be bypassed by adversarial prompts. By contrast, Circuit Breaking intervenes at the causal level of representation, making it harder to evade. The method also generalizes: in multimodal systems it prevents “image hijacking” attacks, and in agents it reduces harmful action sequences under adversarial conditions.

Figure 4:

Adding circuit breakers using Representation Rerouting (RR) to refusal trained Llama-3-

8B-Instruct model leads to significantly lower attack success rate (ASR) over a wide range of unseen

attacks on

HarmBench

prompts, while its capabilities on standard LLM benchmarks (MT Bench

and MMLU) are largely preserved. RR directly targets the representations that give rise to harmful

outputs and reroutes them to an orthogonal space. This reliably interrupts the model from completing

the harmful generations even under strong adversarial pressure.

Figure 4:

Adding circuit breakers using Representation Rerouting (RR) to refusal trained Llama-3-

8B-Instruct model leads to significantly lower attack success rate (ASR) over a wide range of unseen

attacks on

HarmBench

prompts, while its capabilities on standard LLM benchmarks (MT Bench

and MMLU) are largely preserved. RR directly targets the representations that give rise to harmful

outputs and reroutes them to an orthogonal space. This reliably interrupts the model from completing

the harmful generations even under strong adversarial pressure.

Putting Circuit Breakers in the Arena

Of course, demonstrating robustness in controlled laboratory settings is only the first step. The real test of any safety mechanism is whether it can withstand the creativity and persistence of real-world adversaries. For this reason, we deployed models equipped with Circuit Breakers into the Arena, a crowdsourced platform where thousands of users attempt jailbreaks and harmful prompts against state-of-the-art systems.

The Arena represents the closest thing we currently have to an adversarial field trial. Unlike static benchmarks, which quickly saturate, the Arena evolves as new red-teamers join and discover novel attack strategies. Each model faces a barrage of attempts to elicit harmful outputs, ranging from prompt injections and semantic misdirections to carefully crafted edge cases that exploit weaknesses in refusal training.

Our experience in the Arena has been instructive. Baseline refusal-based defenses often crumble against dedicated adversaries. All models we tested were jailbroken multiple times within the first hour of the competition. By contrast, models instrumented with Circuit Breakers show a much more resilient profile. In fact, two of the Cygnet models (with additional interventions like input and output filters) stayed completely safe from almost a year of consistent red teaming. Because the intervention operates at the representational level—suppressing harmful directions directly in hidden states—many of the usual tricks fail. The model is not simply refusing on the surface; it is being constrained at the level of its internal cognition.

This matters because the Arena offers not just a leaderboard but a genuine stress test of robustness. A defense that succeeds here is not merely passing a benchmark; it is surviving contact with adversaries in the wild. Our results show that Circuit Breakers substantially reduce successful jailbreaks relative to conventional baselines, highlighting how representational methods close gaps that surface-level defenses leave open.

We see the Arena as an essential complement to our methodological contributions. Representation Engineering and Circuit Breaking provide the theory and the tools, while the Arena provides the proving ground. By subjecting models to continuous, crowdsourced red-teaming, we can iterate quickly, uncover unanticipated failure modes, and measure genuine progress in robustness. In this way, the Arena is not just a venue for competition but a laboratory for alignment research at scale.

Looking Forward

Representation Engineering and Circuit Breaking point toward a new paradigm for AI interpretability and safety: one that is scalable, trait-aware, and interventionist. The goal is not only to illuminate how models think, but to make that knowledge actionable—to give us levers and safeguards at the level of values and behaviors.

As models become more capable and more widely deployed, we believe that methods like these will be critical: not just to understand them, but to ensure they remain aligned with human goals. One particularly important direction is the extension of these methods into the AI agent setting, where models are not limited to producing text but can take actions, interact with tools, and pursue goals autonomously. In these environments, safety is no longer just about filtering outputs, but about ensuring that entire sequences of decisions adhere to deployment policies.

Representation-level tools offer a way forward here. If we can identify and suppress harmful or noncompliant representations before they guide action selection, we may be able to enforce policy adherence in a principled and scalable way. Just as Circuit Breakers can stop a harmful sentence from being generated, analogous mechanisms could prevent an unsafe plan from being executed or a forbidden tool call from being made. Embedding these kinds of representational safety checks into agent architectures will be essential for trustworthy deployment.

References

[1] David L Barack and John W Krakauer. Two views on the cognitive brain. Nature Reviews Neuroscience , 22(6):359–371, 2021.

[2] D. Hendrycks, C. Burns, S. Basart, A. Zou, M. Mazeika, D. Song, and J. Steinhardt. Measuring massive multitask language understanding. arXiv preprint arXiv:2009.03300 , 2020.

[3] Kevin Ro Wang, Alexandre Variengien, Arthur Conmy, Buck Shlegeris, and Jacob Steinhardt. Interpretability in the wild: a circuit for indirect object identification in GPT-2 small. In The Eleventh International Conference on Learning Representations , 2023a.

[4] L. Zheng, W.-L. Chiang, Y. Sheng, S. Zhuang, Z. Wu, Y. Zhuang, Z. Lin, Z. Li, D. Li, E. Xing, et al. Judging llm-as-a-judge with mt-bench and chatbot arena. arXiv preprint arXiv:2306.05685 , 2023.