Unsupervised Video Adaptation for Parsing Human Motion

Haoquan Shen, Shoou-I Yu, Yi Yang, Deyu Meng, Alexander Hauptmann

The image below is an overview of our framework. Our intuition is to refine the model

by alternating the adjustment and retraining processes.

We utilize two constraints in the adjustment process. One is the continuity constraint.

The other one is the tracking constraint as shown below:

Frame f' is the frame 1 second after frame f. a) Pose detection results of frame f. b) Trajectory keypoints of the right arm in frame f.

c) Wrong pose detection results of frame f'. d) Refined pose according to the arm trajectory key point of frame f'.

The image below is a comparison between our model and that of [32], from which we can see a dramatic improvement of our method.

This figure shows the aggregated frames sampled from dancing video clips of two seconds and the pose skeletons obtained by our model and [32], respectively.

For the details of the algorithm, please refer to the paper here.

The code and dataset (FYDP and UYDP) of our paper are available here.

Note: The dataset is for the academic use only!

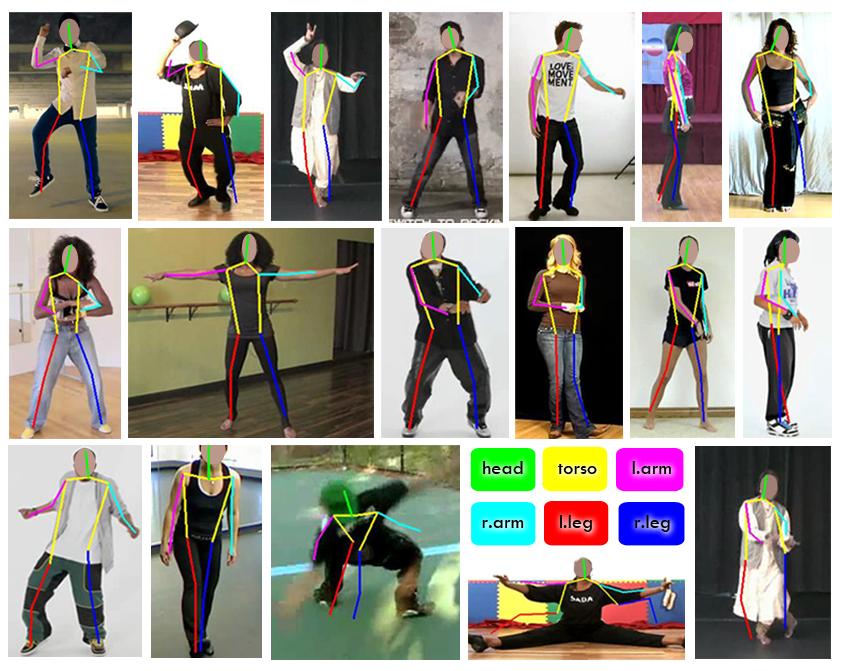

The image below is a glimpse of our dataset.

Please cite our paper blow:

Haoquan Shen, Shoou-I Yu, Yi Yang, Deyu Meng, Alexander Hauptmann,

Unsupervised Video Adaptation for Parsing Human Motion,

European Conference on Computer Vision, 2014.

Contact shenhaoquan@gmail.com if you have any questions.