Watch and Learn: Semi-Supervised Learning of Object Detectors from Videos

|

|

|

|

|

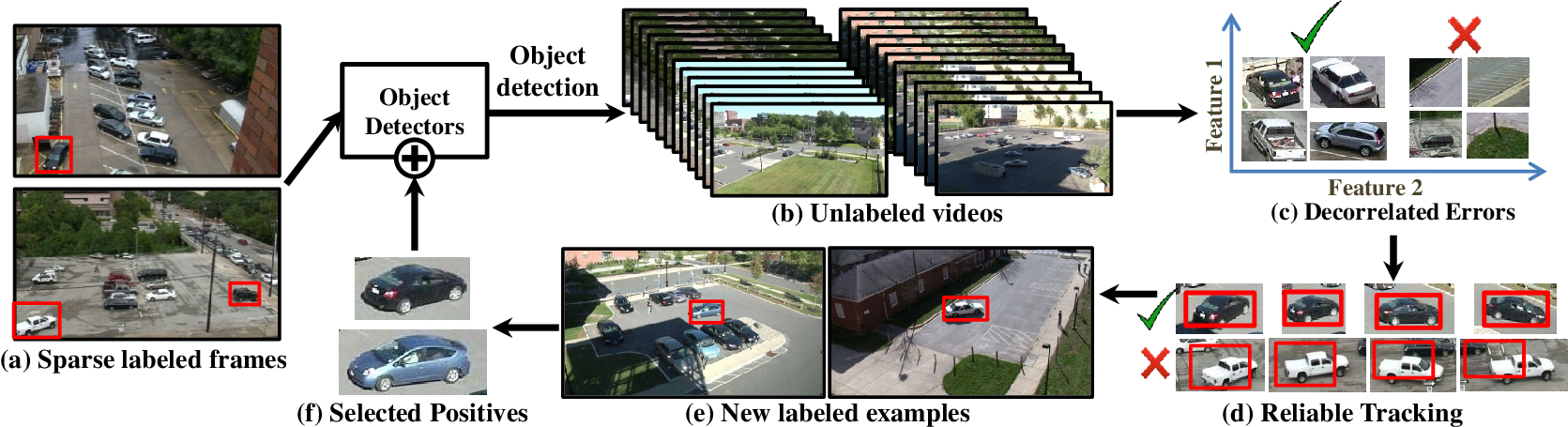

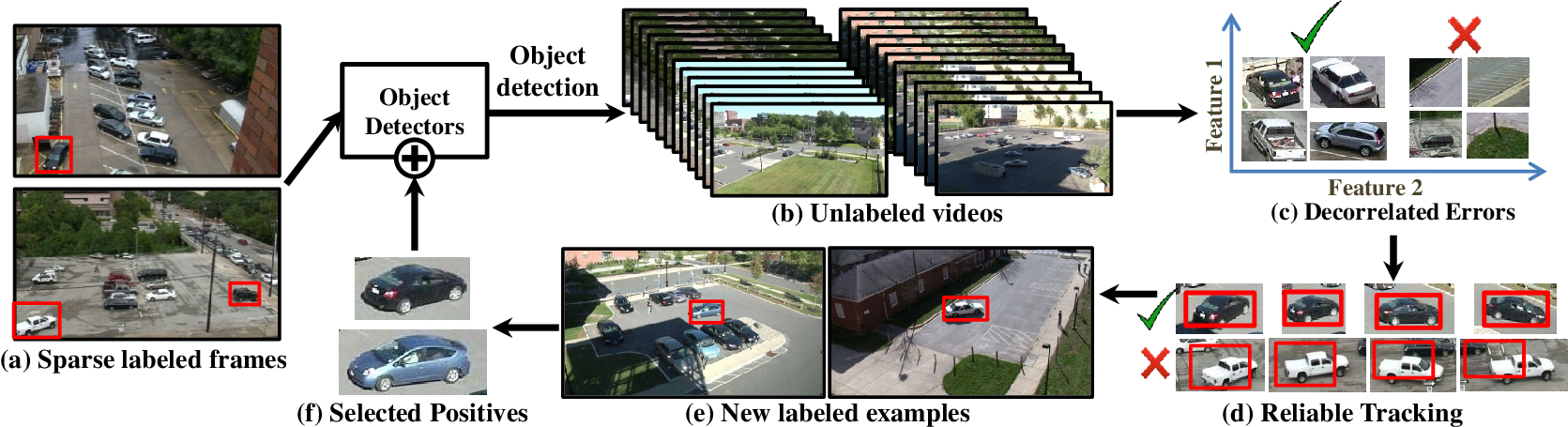

We present a semi-supervised approach that localizes

multiple unknown objects in videos. We address the problem of training object detectors from sparsely labeled video

data. Existing approaches either do not consider multiple

objects, or make strong assumptions about dominant motion of the objects present. In contrast, our approach works

in a generic setting of sparse labels, and lack of explicit

negative data. We show a way to constrain semi-supervised

learning by combining multiple weak cues in videos and exploiting decorrelated errors in a multi-feature modeling of

data. Our experiments demonstrate the effectiveness of our

approach by evaluating our automatically labeled data on

a variety of metrics including quality, coverage (recall), diversity, and relevance to training a object detector.

|

People

Ishan Misra, Abhinav Shrivastava, Martial Hebert

Paper

Acknowledgements

This work was supported in part by NSF Grant IIS1065336, the Siebel Scholarship (for IM), Microsoft Research PhD Fellowship (for AS) and a Google Faculty Research Award.

Copyright notice