|

||

|

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

| site credits | ||

Project Overview

|

|

|

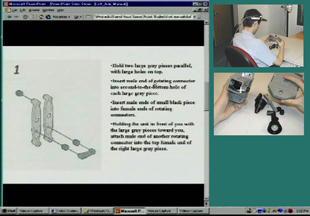

Head-Mounted vs. Scene-Oriented CamerasGoal: To understand the value of different sources of visual information for collaboration on physical tasks.

Findings: Scene camera was of more value than head-mounted camera, or audio-only, but not as valuable as working side-by-side. |

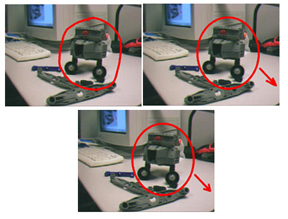

Robot used in our studies

|

|

|

|

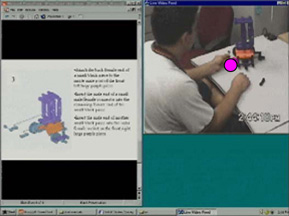

Cursor Pointing ToolGoal: To assess whether providing remote helpers with a cursor-controlled pointing device that is simultaneously viewed by the worker building the robot will improve collaboration.

Findings: Participants valued the cursor pointing device. They rated it significantly easier to refer to task objects and locations using the cursor tool. However, the presence of the cursor pointer did not improve performance times over those with the scene camera alone. |

|

|

|

|

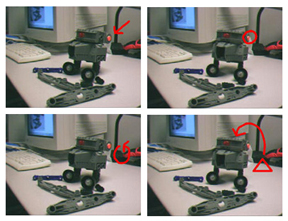

Video Drawing ToolsGoals: To design a new tool to allow remote helpers to communicate gestural information by drawing on a video feed, and to assess the value of this tool for remote collaboration on physical tasks. |

Examples of gesture drawing

|

|

|

|

Gesture in Face-to-Face CommunicationGoals: To understand the timing and nature of gestures during collaborative physical tasks, and to understand when workers focus their attention on helpers' gestures. |

|

|

This work is funded by the National Science Foundation, grant #0208903. The opinions and findings expressed on this site are those of the investigators, and do not reflect the views of the National Science Foundation. | |