|

The population of competing planning systems has changed over the three competitions. Few systems have competed more than once and no system has appeared in all three competitions. In part, this is a reflection of the speed of development of planning systems, revealing the extent to which the technology of 1998 has been surpassed by that of 2002. It is also a reflection of the growing interest in the series, which has encouraged more competitors to come forward, and of the work involved in taking part which has discouraged previous competitors from repeating their effort. Entering the competition involves more than generating interesting and efficient approaches to solving the planning problem: it demands the ability to construct a system that can respond robustly and reliably when confronted with previously unseen challenges, including domains that were not amongst those used in development of the system. It must be sufficiently well-engineered that its performance is not dominated by poor programming rather than by the real algorithmic effort of solving the problem (careless choice of a data structure can undermine the opportunity to show off a clever new planning algorithm). For systems that use hand-coded rules to guide the planning system there is an additional demand: the time required to read and understand domains sufficiently to construct sensible control knowledge and to then encode and test the knowledge to achieve good performance. The time-table for the testing was relatively compressed (the entire problem suite was generated and delivered to the competitors over a two month period and testing was carried out remotely on a single machine), so those using hand-coded controls were forced to find time to analyse the domains, hypothesise and test rules in intense sessions.

Details of the competing systems can be found in Appendix B. To summarise, many of the fully-automated planners use relaxed plan heuristics to guide a heuristic search. LPG [Gerevini, Saetti, SerinaGerevini et al.2003] uses local search to improve candidate plan structures formed in a Graphplan-style plan graph. Several planners (MIPS, FF and SAPA) also extend the use of relaxed plan heuristics to include numeric features. VHPOP is a partial-order planner, demonstrating that partial-order planning can be competitive with the more recently fashionable heuristic forward state-space search planners. For various reasons, several planners generated only small collections of results, which are disregarded in the analysis.

A few competitors used more than one version of their planner, or more than one parameter setting. We did not attempt to enforce the use of unique versions, but left it to competitors to ensure that we were informed where variations were used. Multiple versions of FF, MIPS and LPG were used. FF submitted almost all data for a version optimised for speed performance. A small subset of data was submitted for a version optimised for quality, showing that there are alternative criteria by which performance can be evaluated. In all of the analyses we report we have used the data generated by FF optimised for speed exclusively. MIPS also offered data in two variants, using slightly different parameter settings. In our analyses we use data for one variant (MIPS) exclusively, except in the case of the Satellite HARDNUMERIC problems in which we used the data from the other variant (MIPS.plain). LPG submitted data in three versions: one based on earliest plan produced, one based on best plan produced over a longer time span and a third which represented a compromise between speed and quality. In fact, since all of the results for the version optimised for quality were generated within a few minutes at most, we chose to use this data exclusively in the analyses that follow. This should be borne in mind when reviewing the results for comparative speed performance of the planners. The performance of LPG relies on certain parameter settings. In most cases, the parameters used for LPG were automatically set, but in a few cases some parameters were set by hand. In their paper, appearing in this issue, Gerevini, Saetti and Serena lpg give new results of an experiment testing their planner with all parameters set automatically. In general they observe no significant difference in the performance of LPG with respect to the data provided for the competition.

The three hand-coded planners that competed represent two alternative approaches to planning: forward state-space search using control rules to prune bad choices and promote good choices, and hierarchical task network (HTN) planning.

|

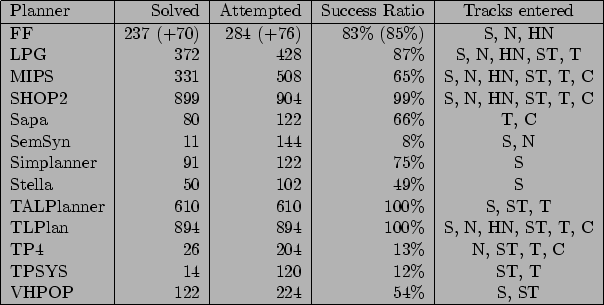

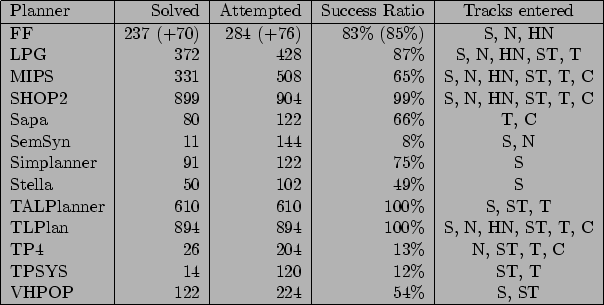

There were 508 problems available to the fully-automated planners and MIPS was the only planner to attempt all of them. There were 904 problems available to the hand-coded planners and SHOP2 was the only planner to attempt all of these, solving almost all of them and solving the most problems overall. TLPLAN and TALPLANNER were the only planners that solved all problems attempted. Not all planners attempted all problems they were equipped to handle. In particular, SAPA did not attempt STRIPS problems, although it is capable of solving them.

Although the planning competitions have been a great source of data from the state-of-the-art planners and an encouragement and catalyst for progress in the field, they are also lively and exciting competition events. This means that there must be ``winners''. The choice of winners is left to the organisers and must be treated with caution. In summarising the results at the AIPS conference in Toulouse, we presented a table of results in the form shown in Figure 1, together with a selection of graphs showing relative performance of planners in terms of speed and quality of plans in several of the problem sets. It was hard to synthesise a comprehensive view in the short time between final data collection and the presentation (a matter of a couple of days, during the conference), so our initial assessments were based on a rather crude evaluation of the evidence. We chose to award a prize to LPG as the best performer of the fully-automated planners: it solved the most problems of all fully-automated planners, showing excellent performance in the time tracks. We also awarded a prize to MIPS which solved the second most problems and had the widest coverage of all the fully-automated planners. It is clear that FF produced exceptional performance in the numeric level problems and could well have been judged worthy of a prize for that. We chose to acknowledge the great difficulty for newcomers to the competition in building a system that is sufficiently robust to compete, especially when there is no team of programmers and researchers to support the effort. For this reason we awarded a prize to VHPOP as best newcomer, with its creditable performance in both STRIPS and SIMPLETIME problems.

Turning to the hand-coded planners, we awarded a prize for best performance to TLPLAN, which tackled almost all of the problems available, solved all of those it attempted and produced plans of very high quality with dramatic speed. We also rewarded SHOP2 for the fact that it attempted every problem and produced more plans than any other planner. The quality of its plans was consistently good and its performance highly competitive. TALPLANNER also performed outstandingly, often producing the highest quality plans and doing so tremendously efficiently, but its coverage was restricted to the STRIPS and TIME tracks. In selecting prize winners we chose to emphasise breadth of coverage, particularly in the new challenges of handling numeric and temporal planning problems. Competitions demand a degree of spectacle in the selection of winners, but the more measured evaluation of planners takes time. In this paper we present various analyses of the data collected during the competition and leave it to the community to judge the final rankings of the planners.