Research Projects

Ontological Supervision for Fine Grained Classification of Street View Storefronts

Joint work with Qian Yu, Martin C. Stumpe, Vinay Shet, Sacha Arnoud, and Liron Yatziv

Modern search engines receive large numbers of business related, local aware queries. Such queries are best answered using accurate, up-to-date, business listings, that contain representations of business categories. Creating such listings is a challenging task as businesses often change hands or close down. For businesses with street side locations one can leverage the abundance of street level imagery, such as Google Street View, to automate the process. However, while data is abundant, labeled data is not; the limiting factor is creation of large scale labeled training data. In this work, we utilize an ontology of geographical concepts to automatically propagate business category information and create a large, multi label, training dataset for fine grained storefront classification. Our learner, which is based on the GoogLeNet/Inception Deep Convolutional Network architecture and classifies 208 categories, achieves human level accuracy.

Paper:

Ontological Supervision for Fine Grained Classification of Street View Storefronts CVPR 2015Exemplar Based Methods for Viewpoint Estimation

Joint work with Vishnu Naresh Boddeti, Zijun Wei, and Yaser Sheikh

Estimating the precise pose of a 3D model in an image is challenging; explicitly identifying correspondences is difficult, particularly at smaller scales and in the presence of occlusion.

Exemplar classifiers have demonstrated the potential of detection-based approaches to problems where precision is required. In particular, correlation filters explicitly

suppress classifier response caused by slight shifts in the bounding box. This property makes them ideal exemplar classifiers for viewpoint discrimination, as small translational shifts can often be confounded with small rotational shifts.

However, exemplar based pose-by-detection is not scalable because, as the desired precision of viewpoint estimation increases, the number of exemplars needed increases as well. We present a training framework to reduce an ensemble of exemplar correlation filters for viewpoint estimation

by directly optimizing a discriminative objective. We show that the discriminatively reduced ensemble outperforms the state-of-the-art on three publicly available datasets and we introduce a new dataset for continuous car pose estimation in street scene images.

Data:

CMUcar DatasetPaper:

Exemplar Based Methods for Viewpoint Estimation BMVC, 2014Supplementary Material

Poster

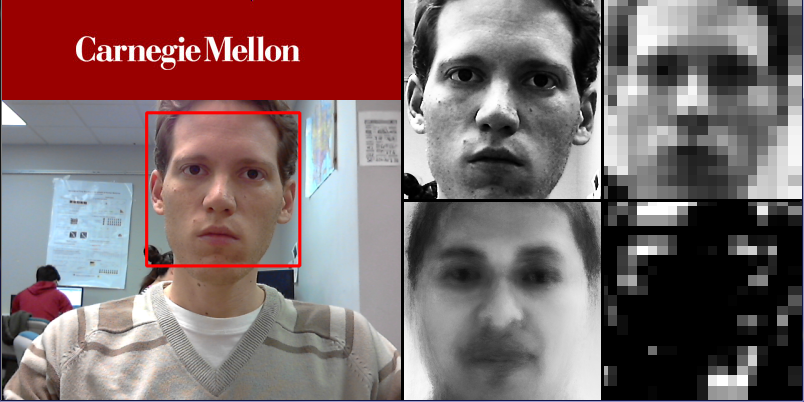

Visual Accessibility Through Computer Vision

Joint work with Karl Hellstern, Zijun Wei, Yaser Sheikh, and Takeo Kanade

We have built algorithms that improve the BrainPort vision device which is manufactured by Wicab.

With this device blind users can perceive the approximate size, shape and location of objects in their surrounding. Visual information is gathered by a camera that is mounted on a pair of sunglasses. It is then translated into electric pulses that are translated to the surface of the tongue.

We have designed a system that allows users of the BrainPort device to recognize faces.

The system detects faces in the image captured by the camera, compares them with a

"prototypical" or "average" face, and produces a difference map which allows the user to literally feel what is unique about the face of the person in front of it.

A prototype of our system was depicted in an episode of the BBC show Frontline Medicine.

Additionaly, we have created an Android app that can detect various signs (such as restroom, EXIT, etc') and guide the visually impaired user to the location of the sign using vibrating signals.

Poster:

QoLT NSF Site VisitMedia:

Segment from the BBC's "front-line medicine"More on the project at CMUs website

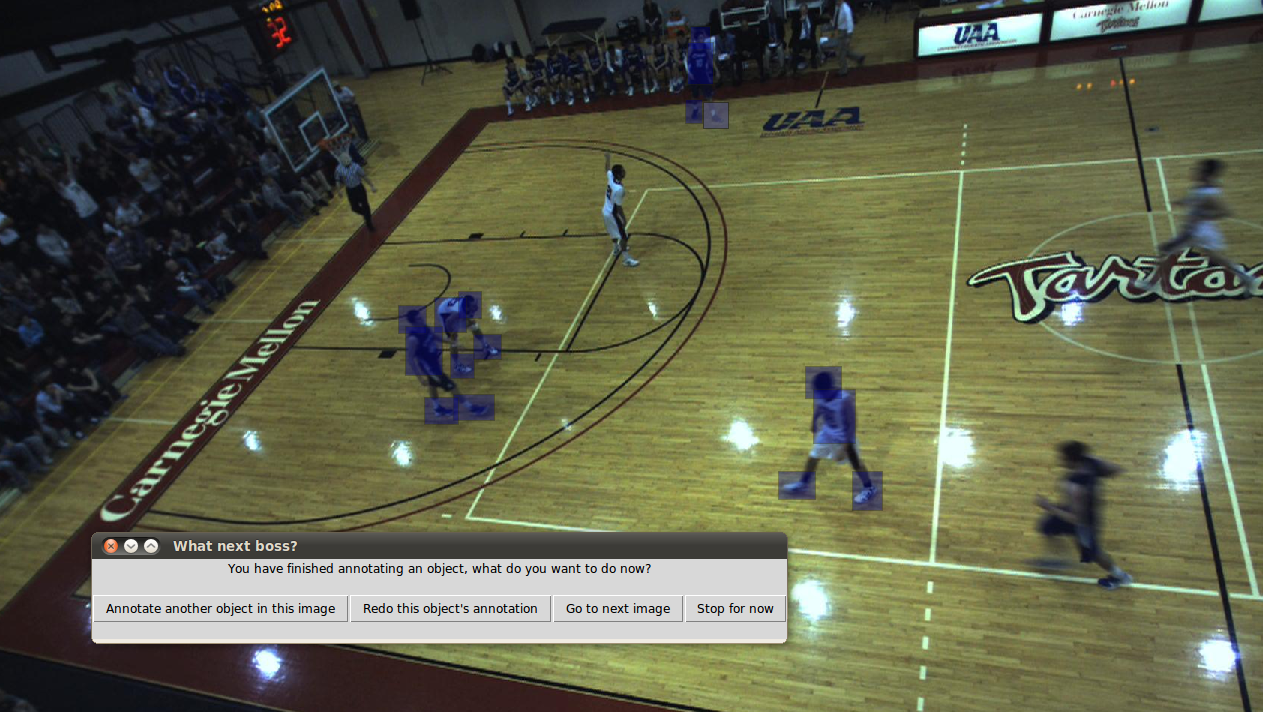

Persistent Particle-Filters For Background Subtraction

Joint work with Shmuel Peleg

Moving objects are usually detected by measuring the appearance

change from a background model. The background model should adapt

to slow changes such as illumination, but detect faster changes caused

by moving objects. Particle Filters do an excellent task in modeling non

parametric distributions as needed for a background model, but may

adapt too quickly to the foreground objects.

A persistent particle filter is proposed, following bacterial persistence.

Bacterial persistence is linked to the random switch of bacteria between

two states: A normal growing cell and a dormant but persistent cell. The

dormant cells can survive stress such as antibiotics. When a dormant

cell switches to a normal status after the stress is over, bacterial growth

continues.

Similar to bacteria, particles will switch between dormant and active

states, where dormant particles will not adapt to the changing

environment. A further modification of particle filters allows discontinuous

jumps into new parameters enabling foreground objects to join the back-

ground when they stop moving. This can also quickly build multi-modal

distributions.

M.Sc. Thesis:

Persistent Particle-Filters For Background SubtractionPaper:

Bacteria Filters: Persistent Particle-Filters For Background Subtraction, ICIP 2010HANS - HUJI's Autonomous Navigation System

Joint work with Keren Haas, Dror Shalev, Nir Pochter, Zinovi Rabinovich, and Jeff Rosenschein

I worked on HANS alongside Keren Haas and Dror Shalev as a final project for the Computer Engineering program. Our advisors were Prof. Jeff Rosenschein, Nir Pochter, and Zinovi Rabinovich.

HANS has won the Computer Engineering School 2008 "Best Computer Engineering Project" award.

Building autonomous robots that can operate in various scenarios has long been a goal of research in Artificial Intelligence. Recent progress has been made on space exploration systems, and systems for autonomous driving. In line with this work, we present HANS, an autonomous mobile robot that navigates the Givat Ram campus of the Hebrew University. We have constructed a wheel-based platform that holds various sensors. Our system's hardware includes a GPS, compass, and digital wheel encoders for localizing the robot within the campus area. Sonar is used for fast obstacle

avoidance. It also employs a video camera for vision-based path detection. HANS' software uses a wide variety of probabilistic methods and machine learning techniques, ranging from particle filters for robot localization to Gaussian Mixture Models and Expectation Maximization for its vision system. Global path planning is performed on a GPS coordinate database donated by MAPA Ltd., and local path planning is implemented using the A* algorithm on a local grid map constructed by the robot's sensors.

Documents:

HANS - HUJI's Autonomous Navigation System, Project BookPoster

Blog:

This blog was used to recored the development processVideo segmentation of unknown, static background using min-cut

Joint work with Shmuel Peleg

The algorithm has been developed as part of 'guided work' that took place during the spring semester of 2007 under the supervision of Prof. Shmuel Peleg.

In this report we describe an algorithm for foreground layer extraction based on EM learning and min-cut. The background is unknown but assumed to be static, and the foreground is therefore defined as the dynamic part of the frame. From a single video stream our algorithm uses color cues as well as

information from image contrast, that is, the color differences between adjacent pixels, to cut out the foreground layer. Experimental results show that the accuracy is good enough for most practical uses.