Abstract

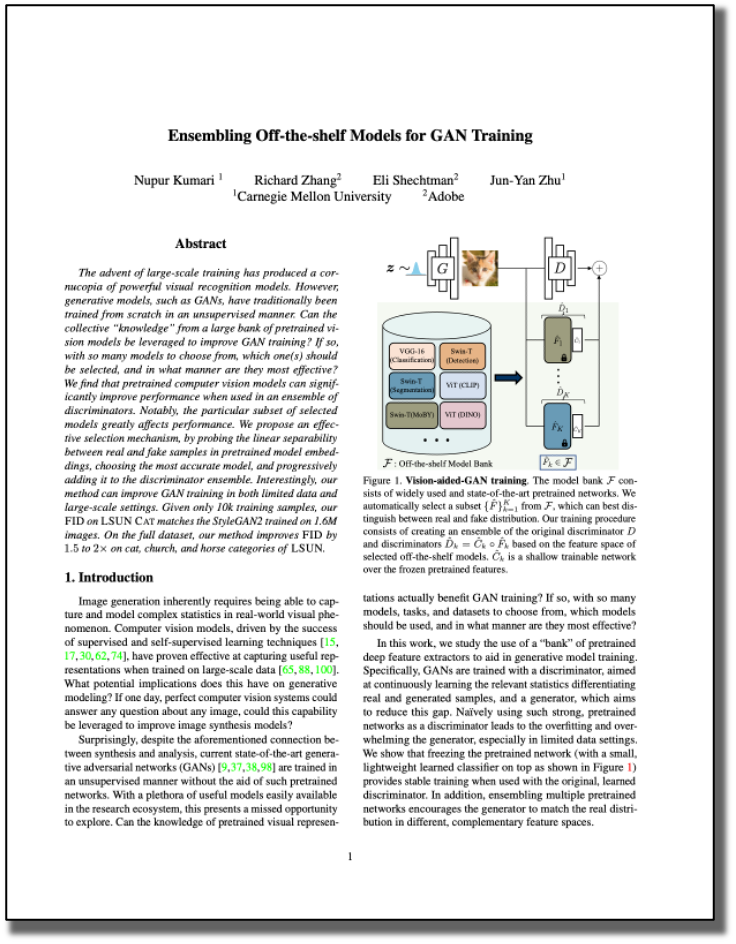

The advent of large-scale training has produced a cornucopia of powerful visual recognition models. However, generative models, such as GANs, have traditionally been trained from scratch in an unsupervised manner. Can the collective "knowledge" from a large bank of pretrained vision models be leveraged to improve GAN training? If so, with so many models to choose from, which one(s) should be selected, and in what manner are they most effective? We find that pretrained computer vision models can significantly improve performance when used in an ensemble of discriminators. Notably, the particular subset of selected models greatly affects performance. We propose an effective selection mechanism, by probing the linear separability between real and fake samples in pretrained model embeddings, choosing the most accurate model, and progressively adding it to the discriminator ensemble. Interestingly, our method can improve GAN training in both limited data and large-scale settings. Given only 10k training samples, our FID on LSUN Cat matches the StyleGAN2 trained on 1.6M images. On the full dataset, our method improves FID by 1.5x to 2x on cat, church, and horse categories of LSUN.

Paper

arxiv:2112.09130 , 2021.

Citation

Nupur Kumari, Richard Zhang, Eli Shechtman, Jun-Yan Zhu. "Ensembling Off-the-shelf Models for GAN Training", in CVPR 2022.

Bibtex

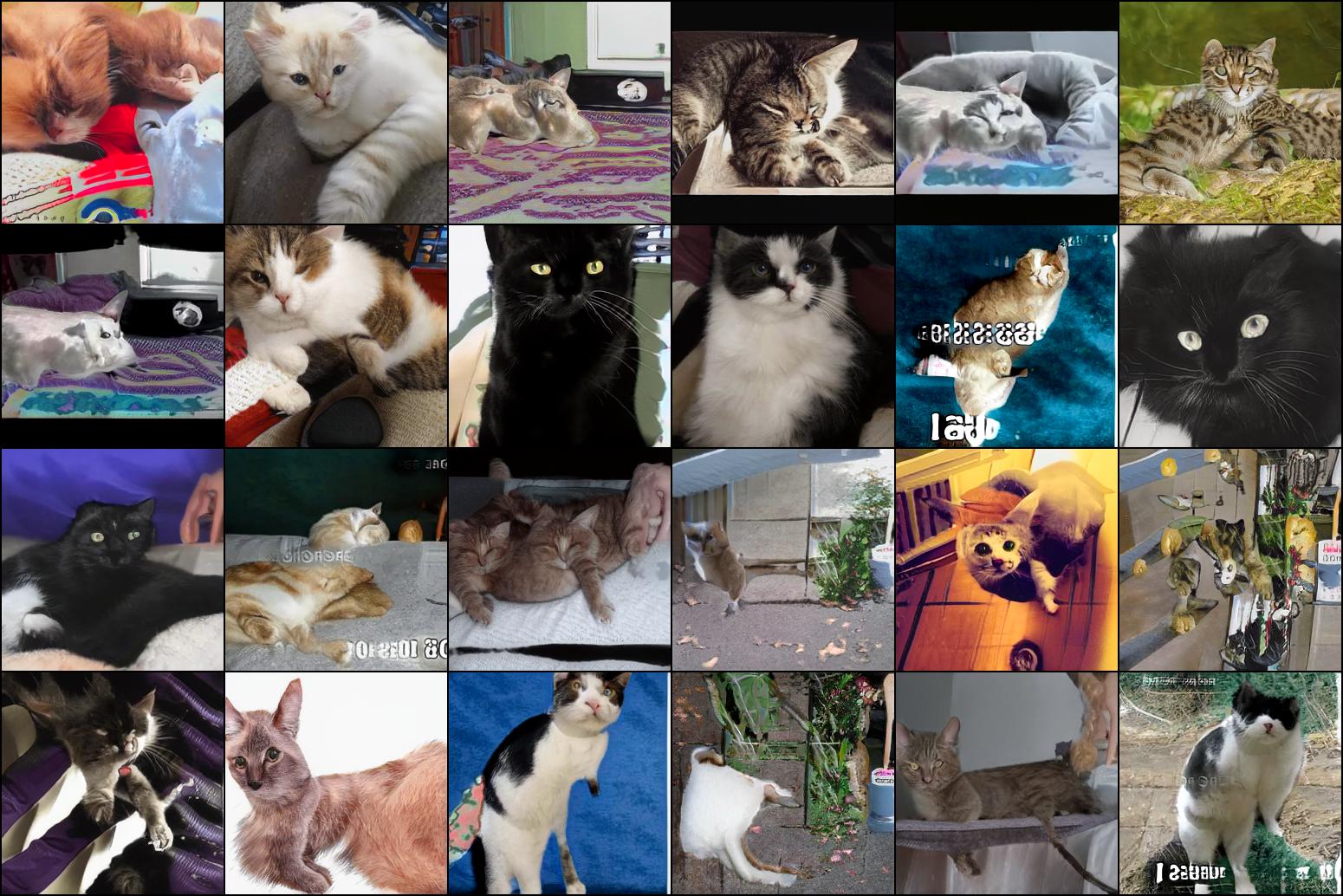

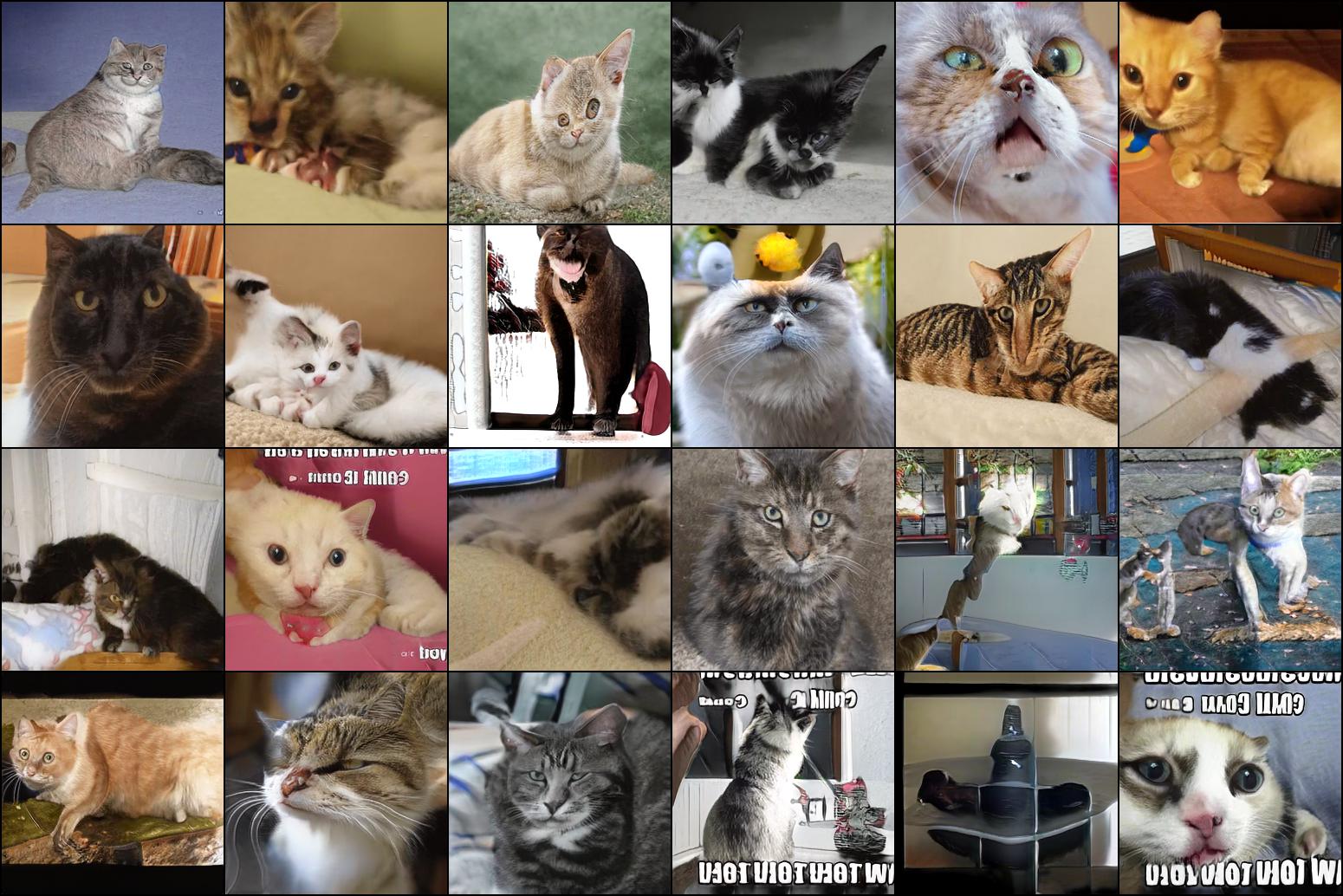

Our method outperforms recent GAN training methods by a large margin, especially in limited sample setting. For LSUN Cat, we achieve similar FID as StyleGAN2 trained on the full dataset using only 0.7% of the dataset. On the full dataset, our method improves FID by 1.5x to 2x on LSUN Cat and LSUN Church.

We show generated images by interpolation between latent codes for model trained by our method on AnimalFace Cat (160 images), Dog (389 images), and Bridge-of-Sighs (100 photos).

We finetune StyleGAN2-ADA with our method and show randomly generated images for the same latent code by StyleGAN2-ADA and Ours.

FFHQ

1k training samples

2k training samples:

LSUN Cat

LSUN Church

We randomly sample 5k images and sort them according to Mahalanobis distance using mean and variance of real samples calculated in inception feature space. Below visualization shows the top and bottom 30 images according to the distance.

StyleGAN2-ADA

Ours

- Diana Sungatullina, Egor Zakharov, Dmitry Ulyanov, and Victor Lempitsky. Image Manipulation with Perceptual Discriminators. In ECCV 2018.

- Tero Karras, Miika Aittala, Janne Hellsten, Samuli Laine, Jaakko Lehtinen, and Timo Aila. "Training generative adversarial networks with limited data". In NeurIPS 2020.

- Shengyu Zhao, Zhijian Liu, Ji Lin, Jun-Yan Zhu, and Song Han. "Differentiable Augmentation for Data-Efficient GAN Training." In NeurIPS 2020.

- Axel Sauer, Kashyap Chitta, Jens Muller, and Andreas Geiger. "Projected GANs Converge Faster." In NeurIPS 2021.

- Puneet Mangla, Nupur Kumari, Mayank Singh, Balaji Krishnamurthy, and Vineeth N. Balasubramanian. Data instance prior (DISP) in generative adversarial networks. In WACV 2022.

Acknowledgment

We thank Muyang Li, Sheng-Yu Wang, Chonghyuk (Andrew) Song for proofreading the draft. We are also grateful to Alexei A. Efros, Sheng-Yu Wang, Taesung Park, and William Peebles for helpful comments and discussion. The work is partly supported by Adobe Inc., Kwai Inc, Sony Corporation, and Naver Corporation. Also, thanks to Kangle Deng for the website template.