Selected Projects (complete list of publications)

Samarjit Das

I am a postdoctoral research fellow at the Robotics Institute, Carnegie Mellon University. I work with Prof. Jessica Hodgins and Prof. Fernando De la Torre. I am a member of CMU Computer Vision Group as well as CMU Graphics Lab. Prior to this, I finished my PhD in Electrical and Computer Engineering at Iowa State University working with Prof. Namrata Vaswani. Here’s a copy of my CV and a complete list of publications.

Research Interests

Deformable Shape Models for Motion Activities

Proposed novel stochastic shape deformation models for human body activity tracking in videos with application such as automatic landmark extraction and abnormality detection.

IEEE PAMI

I am interested in statistical signal processing and machine learning applications for perception tasks involving visual data as well as other sensor modalities such as inertial measurement units, motion capture and physiological sensors. In general, my work addresses the challenges involved in sensing and intelligent decision making for real-world cyber physical systems (CPS). My past and present research topics include:

Computer Vision: Visual contexts for motion interpretation, motion activity modeling and tracking from visual inputs, abnormality detection, robust visual tracking under dynamic scene parameters

Statistical Signal Processing: sequential Monte Carlo techniques and Bayesian filtering on large dimensional state spaces, stochastic deformable shape models, and sparse tracking with sequential compressive sensing

Machine Learning: weakly supervised learning algorithms for discriminative pattern segmentation and classification in time series data, model switching and change detection in complex high dimensional systems

Particle Filter with Mode Tracking for Tracking Across Illumination Changes

Proposed efficient Particle Filtering algorithms in order to deal with large dimensional state-spaces and multimodality of the observation likelihood with applications to robust visual tracking under dynamic illumination conditions. (with Siemens Research)

IEEE TIP

Wearable Sensing for Computational Emergency Medicine

Developing cyber physical systems for improve emergency care under challenging scenarios (e.g. road-side, air-ambulance). Used motion capture, wearable IMUs and video camera enabled medical instruments for visuo-motor analysis of paramedic performances.

In collaboration with UPMC and STAT Air-ambulance

SIH Medical Journal, IEEE ICASSP

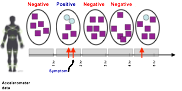

Multiple Instance Learning for Surprising Movement

Pattern Detection in Uncontrolled Environments

The wearable IMU based system relied on a weakly supervised approach owing to the incomplete ground truth information which is a major bottleneck in adapting supervised learning approaches for wearable monitoring during daily living.

IEEE EMBC

Visual Context for Motion Interpretation: Visuo-motor Analysis in Wearable CPS for Activity Monitoring

Working with wearable cameras and vision based functional object categorization for providing visual contexts towards motion understanding. Our method aims to utilize Segmentation-Classification support vector machine for weakly supervised segmentation and clustering of temporal events.

On going work

Sparse Tracking with MCMC and Sequential Compressive Sensing: Robust Visual Tracking

This project is a marriage between sequential sampling based Monte Carlo techniques and the idea of compressive sensing under sparsity constraints. We demonstrate robust tracking of complex illumination patterns in real-life videos.

IEEE TIP (under review), Asilomar

Vision-based Video Content Analysis for Interactive Laryngoscopy

Video laryngoscopy can be useful for paramedics performing Endotracheal Intubation (ETI), a critical emergency procedure. We are developing a system that integrates computer vision capabilities to video laryngoscopes for better real-time guidance.

NAEMSP

CPR Assessments Without Body Contact: Smart Phone For Bystander Assistance in Medical Emergencies

We have developed a system that leverage low level vision based motion features from raw smart-phone videos and uses that to classify chest compression rate of CPR in real-time to assist the provider. The phone stays on the ground and no straps are needed.

NAEMSP Patent under filing process

Information Hiding Inside Structured Shapes

We developed a low level image processing and vision based detector to extract hidden information embedded within structured shapes as subtle imperceptible deformations. Computer graphics tools like Adaptively sampled Distance Fields (ADF) was used for rendering the data embedded shapes. (at MERL)

IEEE ICASSP Patent filed

Motion Capture For Quantitative Measurement of Movement Anomalies

This project used a full-body optical motion capture system with 16 infrared cameras for quantitative assessment and predicting movement abnormalities in people with Deep Brain Stimulator implanted in their brains.

IEEE EMBC

Particle Filters on large Dimensional State Spaces and Applications in Computer Vision

Advisor: Namrata Vaswani, Iowa State University

Dissertation