The Effects of Simple May-Alias Analysis in L3

David Abraham (dabraham)

Liz Crawford (ehc)

Sue Ann Hong (sahong)

Virginia Vassilevska (virgi)

Project Proposal

Final Report

Poster (ppt)

Project Proposal

- Introduction

- Approach

Our project will focus on intraprocedural may-alias analysis. We plan to first implement simple may-alias analysis as presented in Muchnick (p.297-314). However, a simple may-alias analysis is naive and still overly conservative. Hence we hope to also implement extensions of the simple algorithm such as the one given by Goyal [3]. We will compare the effectiveness of our implementations to using no alias analysis on optimizations such as dead code elimination, common subexpression elimination, and loop invariant hoisting. We plan to use the Java implementation of the L3 compiler. In addition to implementing may-alias analysis algorithms, we may implement further optimizations similar to those in tasks 1 and 2 as the test cases for how effectively alias analysis can improve those optimizations. We will adapt test programs from the original L3 test suite that contain memory optimizations and create our own suite to use to compare run-time performances. Our fall-back plan is to at least implement simple may-alias analysis and compare the performance to no alias analysis.

- Timeline

4/5 - 4/12 Study algorithms. Familiarize with Java compiler. Construct test programs. 4/12 - 4/19 Implement simple may-alias analysis, modify optimization code from tasks 1 & 2 4/19 - 4/26 Keep implementing and debug. 4/26 - 5/3 Add extensions to the simple may-alias analysis, run tests, write ups. - References

- [1] S. Muchnick, Advanced Compiler Design and Implementation. Morgan Kaufmann Publishers, 1997.

- [2] Ghiya, Lavery, and Sehr. On the importance of points-to analysis and other memory disambiguation methods for C programs. PLDI'01.

- [3] Goyal, D. An improved intra-procedural may-alias analysis algorithm. Tech. Rep. TR1999777, New York University. Feb.1999.

- [4] J. Wu. Survey of Alias Analysis [http://www.cs.princeton.edu/~jqwu/Memory/survey.html].

Aliasing occurs when a storage location can be accessed in two or more ways. Without a formal model of alias information, many compiler optimizations are overly conservative. For example, in Common Subexpression Elimination (CSE), any assignment to a memory location kills the availability of all other memory expressions. Alias information can also be used to improve the performance of Dead-Code Elimination (DE), and Instruction Scheduling (IS). In this project, we will investigate the improvements that can be gained by implementing an alias analysis.

Final Report

Abstract

In this project, we study the effects of simple may alias analysis in the L3 compiler. Alias analysis can potentially increase the effectiveness of many compiler optimization techniques. In this report, we examine how it affects the performance of Constant Propagation (ConstP) and Common Sub-Expression Elimination (CSE). We have implemented these optimizations to take advantage of alias information. We have also implemented Constant Folding, since this is required to asses the benefits of ConstP, and Copy Propagation (CopyP) to clean up the unnecessary copies produced by CSE.

1. Introduction

Aliasing occurs when a storage location can be accessed in two or more ways. Without a formal model of alias information, many compiler optimizations are overly conservative. For example, in Common Subexpression Elimination (CSE), any assignment to a memory location kills the availability of all other memory expressions. Alias analysis seeks to model the memory locations variables can be associated with.

Formally, we say that *p and *q are aliases if they point to the same storage location. However, tracking the locations a pointer refers to can be difficult. As such, we also define a weaker notion of aliasing. Pointers *p and *q may-alias each other if they may point to the same location.

Alias analysis is an ongoing area of research in Optimizing Compilers. There are a number of different types of approaches that have been considered. Here we outline some of the major differences between the approaches.

Flow Insensitive versus Flow Sensitive

A flow insensitive analysis is designed to be correct for all possible execution paths of the program. For instance, if at some lin *p can be aliased by *q on one entry path to the line, then we simply record that at the line *p and *q may-alias. A flow sensitive analysis is more detailed. It would record on which control paths the two pointers may-alias and on which they do not. This more detailed analysis could potentially be used by flow sensitive optimization techniques.

Intra-procedural versus Inter-procedural

In intra-procedural alias analysis, if a pointer is assigned to a function call, then we do not analyze the memory usage of the procedure. Rather, we simply say that the pointer may alias all other memory references. In an inter-procedural analysis (e.g., [3]), the function call is analyzed in terms of its parameters, the globals it accesses, and the other functions it calls. This leads to more precise may alias information.

Context Insensitive versus Context Sensitive

In a context sensitive alias analysis (e.g.,[2]), functions are analyzed at every call sight with the specific input parameters for that call. A context insensitive algorithm just analyses each function once. Context sensitive algorithms can result in more accurate alias information.

For more information on the different types of alias analysis algorithms we direct the reader to [4].

For this project, we have implemented simple may-alias analysis. Our alias analysis is flow insensitive, intra-procedural and context-insensitive. We outline this algorithm in Section 2. In Section 3 we describe each of the optimizations that we have implemented. In particular we discuss how these optimizations use the may-alias information. In Section 4 we describe the experiments we have conducted to understand the effects of our may-alias analysis. In Section 5 we discuss the results.

2. The May-Alias Algorithm

In L3, the function alloc(num, type) is the only provision for memory allocation. This function allocates an array of num * sizeof(type) words. We track each static call to alloc with a unique base label, as well as a record of the array's length. (Whenever an array length is determined dynamically, we conservatively record the length as infinity.)

For each statement in the IR, we record the set of memory locations to which each temp may point. A memory location is specified by an array base label (see above), together with an offset. So abstractly, we maintain a set of triples (p, b, o), where p is a temp, b is a base label and o is an offset. We can determine if two temps p and q may alias each other by testing if p and q are contained in triples with a common base and offset.

We construct an IN and OUT set of triples for each statement.

IN is just the union of the OUT sets of the predecessors of this statement.

OUT is the result of a transfer function applied to the IN set.

The transfer function has several cases, one for each type of statement. We give some of the main cases below: (Note that All(p) refers to all possible triples (over all bases and offsets) with p as a first component.)

- statement: p = q

OUT = IN - All(p) + {(p, b, o) | (q, b, o) in IN} - statement: p = q + k, where k is a constant

OUT = IN - All(p) + {(p, b, o) | (q, b, o + k) in IN, o + k <= length(b)} - statement: p = MEM(q)

OUT = IN + All(p) - statement: p = alloc(len, int) // static alloc label is b

OUT = IN - All(p) + {(p, b, 0)} - statement: p = f(...)

OUT = IN + All(p)

Remarks

- Representing the IN and OUT sets as a set of triples, as described above, is not feasible in practice. For example, if an array is long, or we cannot determine its length statically, then generating All(p) is at best inefficient. So instead, we represent these sets in a more intelligent manner. The interested reader is encouraged to view the source code commentary for details of this representation

- We could make our alias analysis less conservative by keeping track of type information from the l3 source in the IR. For example, whenever OUT = IN + All(p), p can only point into arrays of the same type as p. Rather than add all triples involving p, we could correctly add only those triples where the array and p have the same type. Although this would improve the alias analysis, we felt that this additional information would not be useful to other optimizations, since L3 is already strongly typed.

- If the L3 source code contains a wrapper function around the basic function alloc, and all memory allocations are through this wrapper, then our alias analysis will not perform well (see case 5 above). To improve this, we would need to implement an interprocedural analysis. This would also see improvements for other calls to functions as well, but was beyond the scope of our project.

- We only keep track of first level variables. So, for example, we do not maintain triples with first component MEM(p). This means we are overly conservative in case 3 above. There is potentially much scope for improvement here, but this was beyond the scope of our project.

- The may-alias algorithm presented here is based on the algorithm given in [1].

3. Optimizations Studied

Constant Folding and Constant Propagation

Constant folding is an optimization that deals with evaluating binary operations on constants during compile time. More generally, if x=BINOP(c1, c2) where c1 and c2 are constants, the compiler evaluates BINOP(c1, c2) to the appropriate constant c3 and replaces the statement with x=c3. Alias analysis has little impact on constant folding. It can, however, help constant propagation. Constant propagation deals with assignments of the form x=c where c is a constant. It finds statements which use x, for example:- function calls to which x is a parameter,

- assignments, the right hand side of which uses x, or

- MEM(x)=...

- all definitions of MEM(x) that reach S set MEM(x) to c, and

- no MEM(y) such that y may alias x gets modified along a path from a reaching definition of MEM(x) to S

s:MEM(x)=RHS:

- gen(s) = {s} if RHS is a constant, and is empty otherwise;

- kill(s) contains all assignments of the form MEM(y)=c where x may alias y

Common Sub-expression Elimination

Common sub-expression elimination can decrease the running time of a program by reducing the number of times an expression is evaluated. Instead of computing a recurring expression again and again, one may store the result at the first occurence of the expression into a temporary variable and replace the following instances of the expression with the temporary variable when it is safe to do so. To identify such safe instances, one may utilize a standard available expressions dataflow analysis, which gathers the information at each point in the program of what expressions are available there, i.e., have not changed since they were evaluated last. If an expression is both used and available at a point in the program, then one may use the stored value instead of evaluating the expression again.

Without alias analysis, we are forced to employ a conservative version of available expressions as described in [5], where when an assignment occurs to a memory location, i.e., of the form MEM[x] = ..., all expressions involving memory access are killed (i.e, no longer available after the assignment statement). However, given such an assignment to a memory location, alias analysis enables us to only kill those expressions involving memory accesses to locations that may alias x, thereby letting us identify a larger set of available expressions.

Note that we convert the intermediate representation to a three-address form to facilitate the task of common sub-expression elimination. Our three address form only allows functions calls with temporary variables or constants, a binary operation with temporary variables or constants, and a memory operation with a temporary variable to be on the right hand side of a MOVE operation.

Copy Propagation

A copy statement has the form:

x := y

Such statements arise from the code generation step. For

instance, suppose there is a statement in the source similar to the following:

a := k + c;

The code generation step will likely turn this into:

t0 := k + c;

a := t0;

Copy statements are also generated by optimizations such as Common Sub-Expression Elimination. As we will discuss in the results section, we found that Common Sub-Expression Elimination tended to make our compiler run slower. We conjectured that the generation of many copy expressions might be part of the problem. Also, copy expressions introduce many temporary variables, which makes it difficult for optimizations such as constant propagation and common subexpression elimination to identify memory expressions valid for optimization. More specifically, having many copy expressions makes it unlikely to see one memory expression, e.g. MEM[x], more than once, because it is likely there exists a copy expression y = x, after which all subsequent memory operations at address x will be performed in the form MEM[y]. As such, we have implemented standard copy propagation.

The aim of copy propagation, is given some copy statement:

x := y

we want to replace subsequent uses of the x by y and

delete the copy statement if it is safe to do so.

We can propagate a copy statement s: x:= y to some use of x if the following conditions hold.

- s is the only definition of x that reaches the use u.

- On every path from s going to u there are no assignments to y.

4. Experimental Setup

We use the L3 directory "big" as our test base. All the optimizations implemented were also tested on the "correct" directory and produced the desired output for each file.

In order to identify the sources of performance gains (and hits) we compare the following version of the compiler:

|

|

5. Results

Impact of Alias Analysis

The graph below, shows how much adding alias analysis improves our optimizations. On the x-axis are the "big" test cases, 1 through 10. On the y-axis is the percentage improvement of using alias analysis over the reference compiler with all optimizations turned on, minus the improvement over the reference compiler without alias information. Alias analysis increases performance by large percentages in many cases. In the few cases where it decreases performance, the difference is not significant.

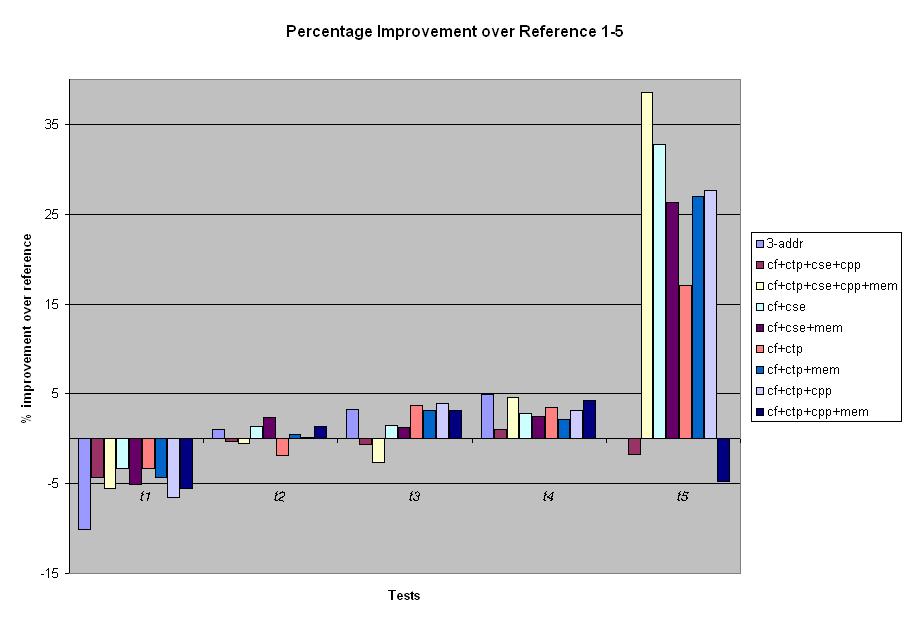

Results for Different Combinations of Optimizations

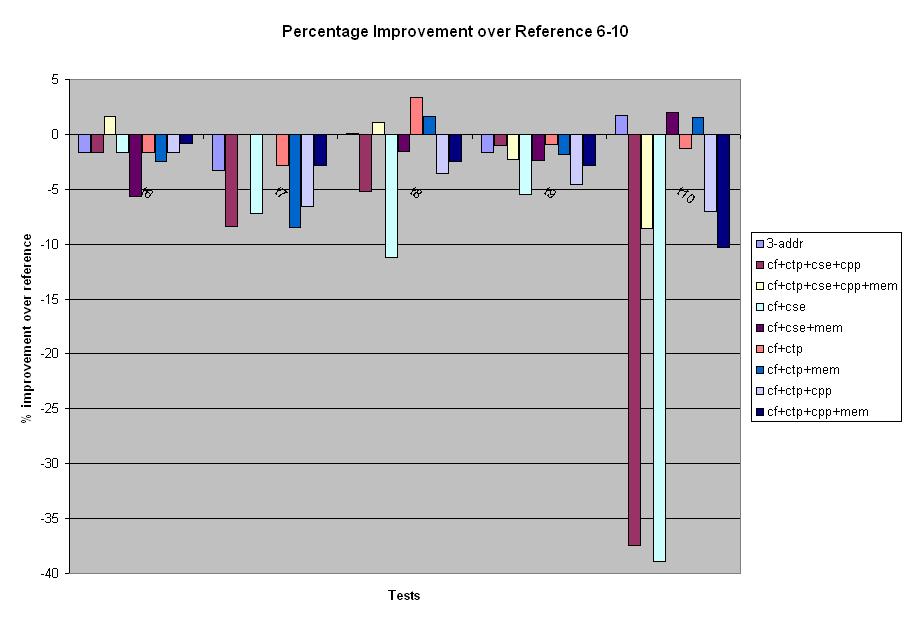

In this section, we look at the performance of different combinations of our optimizations on each individual test case. The graphs in this section show bars for each different combination of optimizations for each test case. The y-axis of each graph shows the percentage of improvement of the optimizations compared to the un-optimized reference compiler. Below we show the results for "big" test cases 1 through 5. On the test cases 1 through 4 there is no significant difference between the reference compiler and any of the versions of our optimized compiler. However on test 5, we see a large improvement from the optimizations. Without the alias analysis there is a drop in performance when all the optimizations are turned on. Once alias analysis is added we see a big improvement. Our optimizations, in particular CSE and alias analysis, lead to a large improvement on this test case due to the significant amount of pointer arithmetic and dereferencing in this test case. Below we show the results for the "big" test cases 6 through 10.

On cases 6,7 and 8, we see that all optimizations with alias analysis perform slightly better than the reference compiler, while without the alias analysis the optimizations performslightly worse.

On test 8, we see that Copy Propagation and alias analysis greatly improve CSE. However, using CSE on this test case overall decreased the performance and we see the best results when only Constant Folding and Constant Propagation are used. On case 10, CSE performs very poorly. Adding alias analysis improves its performance very significantly. The other optimizations also hurt performance but not significantly.

Below we show the results for the "big" test cases 6 through 10.

On cases 6,7 and 8, we see that all optimizations with alias analysis perform slightly better than the reference compiler, while without the alias analysis the optimizations performslightly worse.

On test 8, we see that Copy Propagation and alias analysis greatly improve CSE. However, using CSE on this test case overall decreased the performance and we see the best results when only Constant Folding and Constant Propagation are used. On case 10, CSE performs very poorly. Adding alias analysis improves its performance very significantly. The other optimizations also hurt performance but not significantly.

Results in Terms of Line Numbers

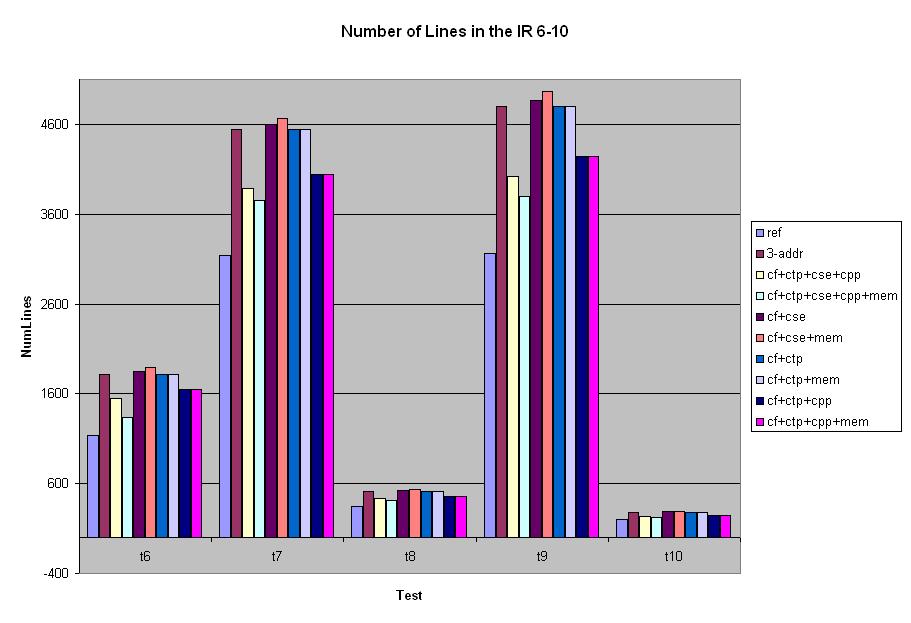

In this section, we look at how adding our optimizations decreased the number of lines in the IR code. All our optimizations use the 3-Address form, so we discuss the results in terms of whether the optimizations lead to less lines of code than un-optimized 3 Address. The graph below shows the number of lines in the IR code for different optimization settings for test cases 1 through 5 and 6 through 10. In each case, our code with all optimizations and alias analysis on or off decreased the number of lines compared to the un-optimized 3-Address code. The increased number of lines in some of the bars is due to CSE without copy propagation. When copy propagation is added the number of lines decreases.

Results in Terms of Number of Temporaries

The results we obtain when we compare the number of temporaries used in the IR in three address form with and without our optimizations are similar to the results for the number of lines. CSE increases the number of temporaries but when we use copy propagation this number drops. Alias analysis further reduces the number of temporaries.

6. Conclusions

Our experiments show that using alias analysis improves the effectiveness of most of our optimizations. In particular, when run together with all optimizations, it can often lead to an improvement over the reference compiler (using 3address form).We found that it is essential to use copy propagation in order to see an effect from using alias analysis. This is due to the fact that CSE and fat pointer operations create new temporaries. For example, suppose we knew that MEM(x)=4, but CSE (or the fat pointer operations code) could have copied y=x, and then all statement would be using MEM(y) instead of MEM(x). This happens in practically all test cases and hence without copy propagation using alias analysis does not benefit the optimizations at all.

Three address transformation and CSE increase both the number of temporaries used, and the number of lines of code. This often causes the register allocator to do worse and hence slows the code down. Alias analysis with copy propagation makes CSE more effective, and always decreases both the number of temporaries used and the number of lines. Unfortunately, the register allocator does not always perform better on a smaller number of temporaries since their interference graph may be complex. Therefore we do not always see the performance increase over the reference compiler we expect.

7. Extensions

We considered adding alias analysis to dead code elimination, and to copy propagation. We expect the effect of alias analysis in those two optimizations to be minor, mainly due to the conservativeness of our approach.8 References

[1] Andrew Appel, "Modern Compiler Implementation in ML", Cambridge University Press, 1999.[2] B.Cheng and W.Hwu, "Modular Interprocedural pointer analysis using access paths: design implementation and evaluation,", PLDI 2000, Vancouver, British Columbia, Canada.

[3] W.Landi and B.Ryder, "A safe approximate algorithm for inter-procedural pointer aliasing". In Proceeding of the ACM SIGPLAN'92 Conference on Programming language Design and Implementation, pp.235-248, June 1992.

[4] J. Wu. Survey of Alias Analysis [http://www.cs.princeton.edu/~jqwu/Memory/survey.html].

[5] S. Muchnick, Advanced Compiler Design and Implementation. Morgan Kaufmann Publishers, 1997.