Feras Saad فِرَاسْ سَعَد

I am an Assistant Professor in the Computer Science Department at Carnegie Mellon University, affiliated with the Principles of Programming and Artificial Intelligence groups.

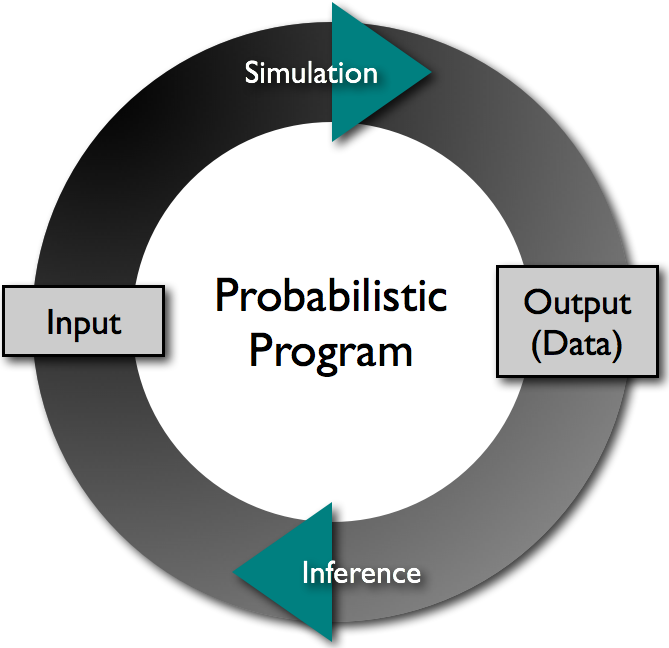

My research group develops programming techniques for probabilistic modeling and inference that are flexible, scalable, and sound―backed by formal guarantees on correctness and statistical validity. We explore these ideas through a blend of theoretical and applied research in probabilistic programming languages, statistics and data science, artificial intelligence, and the mathematical foundations of probabilistic computation.

Our projects are associated with open-source software libraries that help practitioners use these techniques in their own applications. See research and software for an overview.

Background

I received the PhD in Computer Science, and MEng/SB degrees in Electrical Engineering and Computer Science, from MIT. My theses on probabilistic programming were recognized with the Sprowls PhD Thesis Award in AI+Decision Making and Johnson MEng Thesis Award in Computer Science. Prior to joining CMU, I was a Visiting Research Scientist at Google.

Contact

|

|

Research

Our work combines ideas from probability and programming languages to build scalable systems for sound probabilistic modeling and inference. Current research themes include:

OOPSLA-24 PLDI-24 PLDI-21 POPL-19 PLDI-19 AISTATS-17 NIPS-16

OOPSLA-25 PLDI-24 AISTATS-22 AISTATS-19

Probabilistic Computing Systems Lab

Graduate Student, 2024–

Carnegie Mellon University

Interests: scientific computing, probabilistic programming

Graduate Student, 2024–

Carnegie Mellon University

Interests: probabilistic algorithms, information and computation

Alumni

Postdoctoral Associate, 2023–2024

→ Assistant Professor, POSTECH

PhD, Stanford University, 2023

Interests: continuous computation, program correctness

Visiting Graduate Student, 2024

Massachusetts Institute of Technology

Interests: differentiable programming, physical simulation

Publications

Efficient Rejection Sampling in the Entropy-Optimal Range

Draper andSaad

arXiv:2504.04267 [cs.DS], 2025

To Appear: IEEE Transactions on Information Theory

link paper code BibTeXEfficient Online Random Sampling via Randomness Recycling

Draper andSaad

SODA, Proc. Annual ACM-SIAM Symposium on Discrete Algorithms, 2026

link paper code BibTeXRandom Variate Generation with Formal Guarantees

Saad and Lee

PLDI, Proc. ACM Program. Lang. 9, 2025

paper link code artifact slides BibTeXFloating-Point Neural Networks Are Provably Robust Universal Approximators

Hwang, Lee, Park, Park,Saad

CAV, Proc. 37th International Conf. on Computer Aided Verification, 2025

paper link code BibTeXIntegrating Resource Analyses via Resource Decomposition

Pham, Niu, Glover,Saad , Hoffman

OOPSLA, Proc. ACM Program. Lang. 9, 2025

paper link artifact BibTeXScalable Spatiotemporal Prediction with Bayesian Neural Fields

Saad , Burnim, Carroll, Patton, Köster, Saurous, Hoffman

Nature Communications 15(7942), 2024

Editor's Highlight

link paper code BibTeXProgrammable MCMC with Soundly Composed Guide Programs

Pham, Wang,Saad , Hoffmann

OOPSLA, Proc. ACM Program. Lang. 8(OOPSLA2), 2024

paper link artifact BibTeXRobust Resource Bounds with Static Analysis and Bayesian Inference

Pham,Saad , Hoffmann

PLDI, Proc. ACM Program. Lang. 8, 2024

paper artifact link BibTeXGenSQL: A Probabilistic Programming System for Querying Generative

Models of Database Tables

Huot, Ghavami, Lew, Schaechtle, Freer, Shelby, Rinard,Saad , Mansinghka

PLDI, Proc. ACM Program. Lang. 8, 2024

paper artifact link code BibTeXLearning Generative Population Models From Multiple Clinical Datasets

via Probabilistic Programming

Loula, Collins, Schaechtle, Tenenbaum, Weller,Saad , O'Donnell, Mansinghka

AccMLBio, ICML Workshop on Efficient and Accessible Foundation Models for Biological Discovery, 2024

paper link BibTeXSequential Monte Carlo Learning for Time Series Structure Discovery

Saad , Patton, Hoffman, Saurous, Mansinghka

ICML, Proc. 40th International Conf. on Machine Learning, 2023

paper link code BibTeXScalable Structure Learning, Inference, and Analysis with Probabilistic Programs

Saad

PhD Thesis, Massachusetts Institute of Technology, 2022

MIT George M. Sprowls PhD Thesis Award

Estimators of Entropy and Information via Inference in Probabilistic Models

Saad , Cusumano-Towner, Mansinghka

AISTATS, Proc. 25th International Conf. on Artificial Intelligence and Statistics, 2022

paper link arXiv code BibTeXBayesian AutoML for Databases via the InferenceQL Probabilistic Programming System

Schaechtle, Freer, Shelby,Saad , Mansinghka

AutoML, 1st International Conf. on Automated Machine Learning (Workshop), 2022

paper link BibTeXSPPL: Probabilistic Programming with Fast Exact Symbolic Inference

Saad , Rinard, Mansinghka

PLDI, Proc. 42nd International Conf. on Programming Design and Implementation, 2021

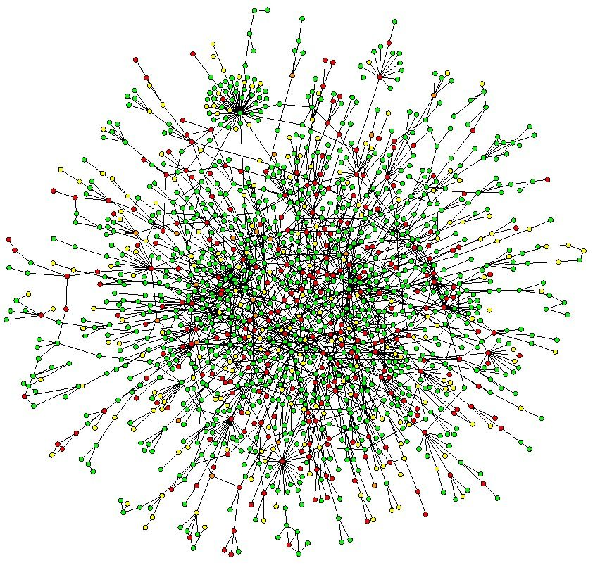

paper artifact link arXiv code BibTeXHierarchical Infinite Relational Model

Saad , Mansinghka

UAI, Proc. 37th Conf. on Uncertainty in Artificial Intelligence, 2021

Oral Presentation

paper link arXiv code BibTeXThe Fast Loaded Dice Roller: A Near Optimal Exact Sampler for Discrete Probability Distributions

Saad , Freer, Rinard, Mansinghka

AISTATS, Proc. 24th International Conf. on Artificial Intelligence and Statistics, 2020

paper supplement link arXiv code BibTeXOptimal Approximate Sampling from Discrete Probability Distributions

Saad , Freer, Rinard, Mansinghka

POPL, Proc. ACM Program. Lang. 4, 2020

paper supplement artifact link code arXiv BibTeXA Family of Exact Goodness-of-Fit Tests for High-dimensional Discrete Distributions

Saad , Freer, Ackerman, Mansinghka

AISTATS, Proc. 23rd International Conf. on Artificial Intelligence and Statistics, 2019

paper supplement link arXiv BibTeXBayesian Synthesis of Probabilistic Programs for Automatic Data Modeling

Saad , Cusumano-Towner, Schaechtle, Rinard, Mansinghka

POPL, Proc. ACM Program. Lang. 3, 2019

paper supplement link arXiv BibTeXGen: A General Purpose Probabilistic Programming System with Programmable Inference

Cusumano-Towner,Saad , Lew, Mansinghka

PLDI, Proc. 40th International Conf. on Programming Design and Implementation, 2019

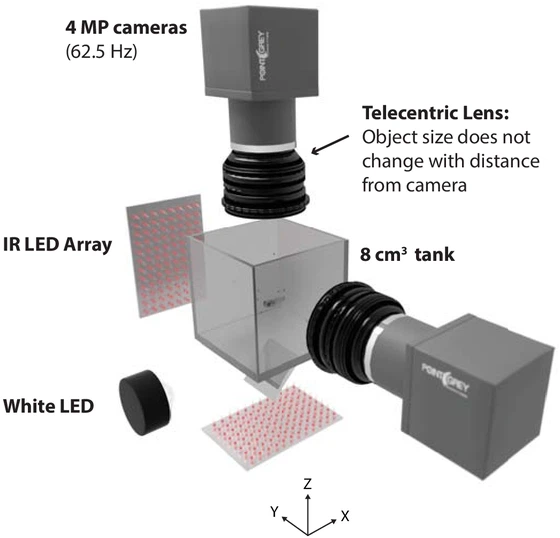

paper link code BibTeXElements of a Stochastic 3D Prediction Engine in Larval Zebrafish Prey Capture

Bolton, Haesemeyer, Jordi, Schaechtle,Saad , Mansinghka, Tenenbaum, Engert

eLife 8:e51975, 2019

paper BibTeXTemporally-Reweighted Chinese Restaurant Process Mixtures

for Clustering, Imputing, and Forecasting Multivariate Time Series

Saad , Mansinghka

AISTATS, Proc. 21st International Conf. on Artificial Intelligence and Statistics, 2018

paper supplement link code BibTeXGoodness-of-Fit Tests for High-dimensional Discrete Distributions

with Application to Convergence Diagnostics in Approximate Bayesian Inference

Saad , Freer, Ackerman, Mansinghka

AABI, 1st Symposium on Advances in Approximate Bayesian Inference, 2018

paper link BibTeXDetecting Dependencies in Sparse, Multivariate Databases

Using Probabilistic Programming and Non-parametric Bayes

Saad , Mansinghka

AISTATS, Proc. 20th International Conf. on Artificial Intelligence and Statistics, 2017

paper supplement link arXiv code BibTeXProbabilistic Search for Structured Data via Probabilistic Programming

and Nonparametric Bayes

Saad , Casarsa, Mansinghka

PROBPROG, 1st International Conf. for Probabilistic Programming, 2018

arXiv code BibTeXTime Series Structure Discovery via Probabilistic Program Synthesis

Schaechtle*, Saad*, Radul, Mansinghka

PROBPROG, 1st International Conf. for Probabilistic Programming, 2018

arXiv BibTeXA Probabilistic Programming Approach to Probabilistic Data Analysis

Saad , Mansinghka

NIPS, Proc. 30th Conf. on Neural Information Processing Systems, 2016

paper link code BibTeXProbabilistic Data Analysis with Probabilistic Programming

Saad , Mansinghka

arXiv, Technical Report arXiv:1608.05347, 2016

Extended version of NIPS 2016.

arXiv BibTeXProbabilistic Data Analysis with Probabilistic Programming

Saad

MEng Thesis, Massachusetts Institute of Technology, 2016

MIT Charles & Jennifer Johnson MEng Thesis Award, 1st Place

Google Scholar dblp ORCID arXiv BibTeX

Teaching

| Carnegie Mellon University | ||

|---|---|---|

| 2026 Spring | 15-819 | Probabilistic Programming Languages |

| 2025 Fall | 15-259/559 | Probability and Computing |

| 2025 Spring | 15-251 | Great Ideas in Theoretical Computer Science |

| 2024 Fall | 15-259/559 | Probability and Computing |

| 2024 Spring | 15-251 | Great Ideas in Theoretical Computer Science |

| Massachusetts Institute of Technology | ||

| 2015 Fall | 9.S915 | Introduction to Probabilistic Programming |

Software

GitHub: https://github.com/probsys

SPPL: Probabilistic programming language with fast exact symbolic inference

https://github.com/probsys/spplGen.jl: General-purpose probabilistic programming with programmable inference

https://gen.devGenSQL: Probabilistic query language for generative models of database tables

https://github.com/OpenGen/GenSQL.queryBayesDB: Probabilistic programming for data analysis on sqlite

https://github.com/probcomp/bayesliteCGPM: Library of composable probabilistic generative models

https://github.com/probcomp/cgpm

BayesNF: Bayesian neural fields for spatiotemporal data modeling

https://github.com/google/bayesnfAutoGP.jl: Automated Bayesian model discovery for time series data

https://github.com/probsys/AutoGP.jlHIRM: Bayesian nonparametric structure learning for relational systems

https://github.com/probsys/hierarchical-irmTRCRPM: Bayesian method for clustering, imputing, and forecasting

https://github.com/probcomp/trcrpmlibrvg: C library for synthesizing random variate generators with formal guarantees

https://github.com/probsys/librvgRR: C library for recycling random bits in prominent sampling algorithms

https://github.com/probsys/randomness-recyclingALDR: Faster exact sampler for discrete probability distributions

https://github.com/probsys/amplified-loaded-dice-rollerFLDR: Fast exact sampler for discrete probability distributions

https://github.com/probsys/fast-loaded-dice-roller

See also: code generator by Peter Occil.OPTAS: Optimal limited-precision sampler for discrete distributions

https://github.com/probsys/optimal-approximate-sampling

Probabilistic Programming Systems

Statistical Modeling

Random Variate Generation

Sublime Text Users: Productivity plugins (9000+ users):

AddRemoveFolder;

RemoveLineBreaks;

ViewSetting.

Talks

Random Variate Generation with Formal Guarantees

PLDI 2025, Seoul, Republic of Korea

Keynote: Scalable Spatiotemporal Prediction with Bayesian Neural Fields

UAI 2024 Workshop on Tractable Probabilistic Modeling, Barcelona, Spain

Automated Gaussian Processes and Sequential Monte Carlo

Learning Bayesian Statistics Podcast, Episode #104, 2024

Keynote: Domain-Specific Probabilistic Programs for Time Series Modeling

Google Forecasting Summit 2023, Mountain View, CA

Programmable Systems for Probabilistic Modeling and Inference

SCS Faculty Lightning Talks 2023

Scalable Structure Learning and Inference via Probabilistic Programming

MIT Thesis Defense, 2022, Virtual

MIT Thesis Defense, 2022, Cambridge, MA

Scalable Structure Learning and Inference for Domain-Specific Probabilistic Programs

LAFI 2022, Philadelphia, PA

SPPL: Probabilistic Programming with Fast Exact Symbolic Inference

PROBPROG 2021, Virtual

SPLASH 2021, Chicago, IL

MIT PLSE Seminars 2021, Virtual

PLDI 2021 [Extended] [Lightning], VirtualFairer and Faster AI Using Probabilistic Programming Languages

MIT Horizon 2021, VirtualHierarchical Infinite Relational Model

UAI 2021 (Oral Presentation), VirtualFast Loaded Dice Roller

AISTATS 2020, VirtualOptimal Approximate Sampling from Discrete Probability Distributions

POPL 2020, New Orleans, LABayesian Synthesis of Probabilistic Programs for Automatic Data Modeling

CICS Seminars 2019, Notre Dame, IN

POPL 2019, Cascais, Portugal

PROBPROG 2018, Boston, MA

Press

MIT researchers introduce generative AI for databases. MIT News, Jul. 2024

Estimating the informativeness of data. MIT News, Apr. 2022

Exact symbolic AI for faster, better assessment of AI fairness. MIT News, Aug. 2021

How and why computers roll loaded dice. Quanta Magazine, Jul. 2020

How Rolling Loaded Dice Will Change the Future of AI. Intel Press Release, Jul. 2020

Algorithm quickly simulates a roll of loaded dice. MIT News, Jan. 2020

MIT Debuts Gen, a Julia-Based Language for Artificial Intelligence. InfoQ, Jul. 2019

New AI programming language goes beyond deep learning. MIT News, Jun. 2019

Democratizing data science. MIT News, Jan. 2019

MIT lets AI ``synthesize'' computer programs to aid data scientists. ZDNet, Jan. 2019