Depth-supervised NeRF: Fewer Views and Faster Training for Free

Kangle Deng1 Andrew Liu2 Jun-Yan Zhu1 Deva Ramanan1,3

1CMU 2Google 3Argo AI

In CVPR 2022

Paper | GitHub | YouTube

Abstract

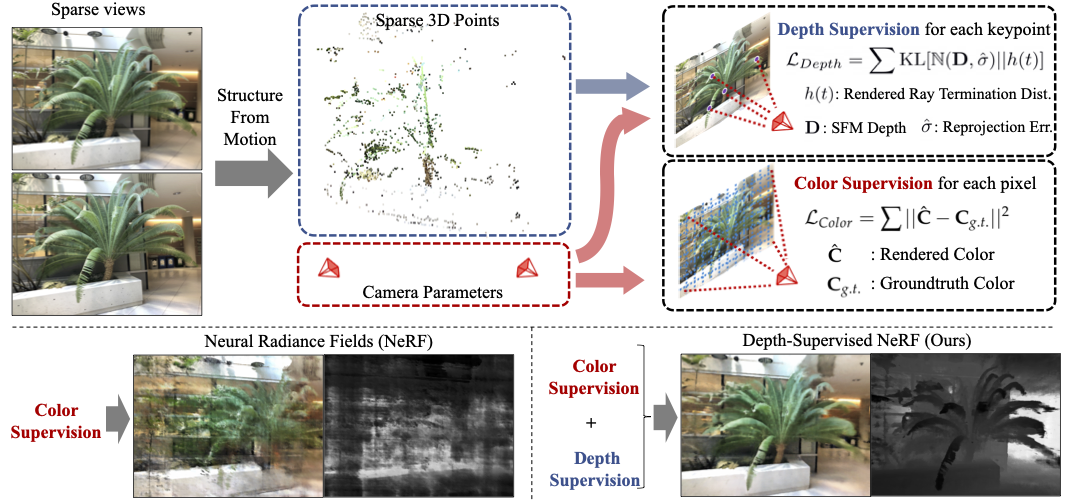

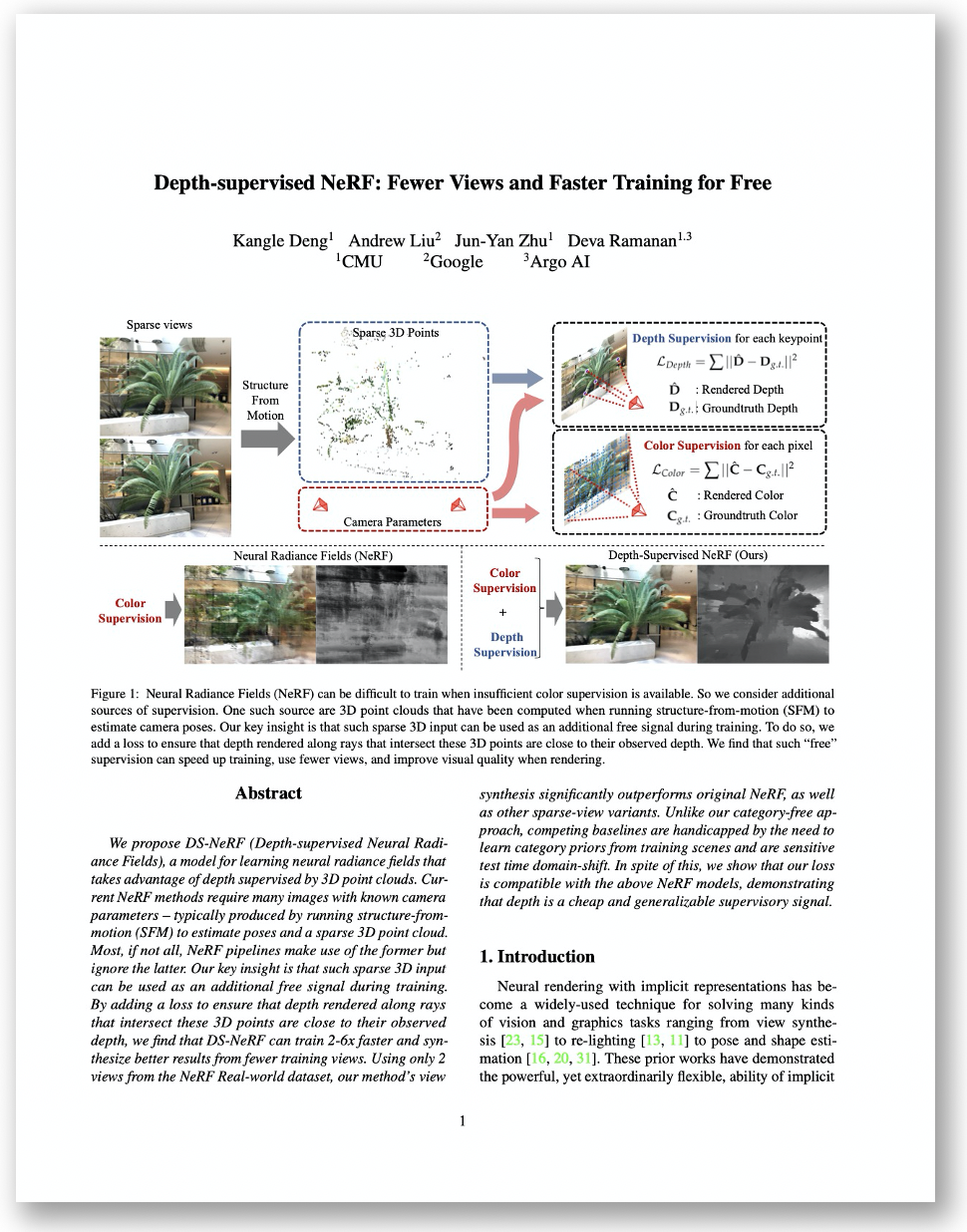

A commonly observed failure mode of Neural Radiance Field (NeRF) is fitting incorrect geometries when given an insufficient number of input views. One potential reason is that standard volumetric rendering does not enforce the constraint that most of a scene's geometry consist of empty space and opaque surfaces. We formalize the above assumption through DS-NeRF (Depth-supervised Neural Radiance Fields), a loss for learning radiance fields that takes advantage of readily-available depth supervision. We leverage the fact that current NeRF pipelines require images with known camera poses that are typically estimated by running structure-from-motion (SFM). Crucially, SFM also produces sparse 3D points that can be used as ``free" depth supervision during training: we add a loss to encourage the distribution of a ray's terminating depth matches a given 3D keypoint, incorporating depth uncertainty. DS-NeRF can render better images given fewer training views while training 2-3x faster. Further, we show that our loss is compatible with other recently proposed NeRF methods, demonstrating that depth is a cheap and easily digestible supervisory signal. And finally, we find that DS-NeRF can support other types of depth supervision such as scanned depth sensors and RGB-D reconstruction outputs.

Paper

arxiv:2107.02791 , 2021.

Citation

Kangle Deng, Andrew Liu, Jun-Yan Zhu, and Deva Ramanan. "Depth-supervised NeRF: Fewer Views and Faster Training for Free", in CVPR 2022.

Bibtex

Summary Video

Ray Termination Distribution Visualization

We visualize the ray termination distribution for some selected points. Hover over the red/blue points to see the ray termination distribution.Visual Comparisons

Trained on 2 views:

Ours

NeRF

Trained on 5 views:

Make use of RGB-D input

DS-NeRF is able to use different sources of depth information other than COLMAP, such as RGB-D input. We derive dense depth maps for each training view with RGB-D input from the Redwood dataset. With dense depth supervision, our DS-NeRF can render even higher quality images and depth maps than DS-NeRF with COLMAP sparse depth supervision.

NeRF

DS-NeRF with COLMAP

DS-NeRF with RGB-D

NeRF

DS-NeRF with COLMAP

DS-NeRF with RGB-D

Acknowledgment

We thank Takuya Narihira, Akio Hayakawa, Sheng-Yu Wang, and for helpful discussion. We are grateful for the support from Sony Corporation, Singapore DSTA, and the CMU Argo AI Center for Autonomous Vehicle Research.