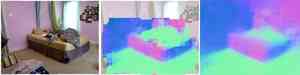

Data-Driven 3D Primitives for Single Image Understanding

(Prediction Code)

The Important Stuff

Downloads:

[Code (2.9MB .zip), version 1.02, updated 8/14/2014]

Data (926MB .tar.gz)

[Model for standard train/test (1.5GB zip)]

Contact:

David Fouhey (please note that we cannot provide support)

This is a prediction-only version of the code for Data-Driven 3D Primitives.

It contains a model pre-trained on one our 4x cross-validation splits on the NYU Dataset.

Important: This data must not be used for prediction on the NYU dataset.

Setup

- Download the code and the data and unpack each in their own directory ($code and $data respectively).

- You will have to update the code to match the location of the data. Edit getResourcePath.m and set dataPath to ${data}/.

- You may have to recompile the feature computation code; run compile.m. This distribution includes mex files for linux x86_64, but even if you're using that, recompiling may be a good idea.

Important parameters

The code is encapsulated with a single function run3DP.m that can run everything described in the paper, and is controlled by an options structure much like MATLAB's optimset. Here are the important options (other options are either internal parameters or have to do with data formats):- options.sparseThresholds: a set of thresholds (in [0,1]) to use for the sparse algorithm (one result is returned per threshold)

- options.denseThresholds:a set of thresholds to use for the dense algorithm (one result is returned per threshold)

- options.makeRectifiedCopies: whether to run a Manhattan-world vanishing point detector (from Hedau, Hoiem and Forsyth) on the input image and snap the results to the vanishing points.

| 0.35 | 0.45 | 0.55 | 0.65 | 0.75 | 0.85 | 0.95 | |

|

|

|

|

|

|

|

|

| Sparse Results | |||||||

|

|

|

|

|

|

|

|

| Dense Results | |||||||

|

|

|

|

|

|

|

|

| Sparse Results (Rectified) | |||||||

|

|

|

|

|

|

|

|

| Dense Results (Rectified) | |||||||

Acknowledgments

The data in this package comes from the NYU Depth v2 Dataset available here

and collected by Nathan Silberman, Pushmeet Kohli,

Derek Hoiem and Rob Fergus.

This code builds off of the following other code-bases, subsets of which are bundled into the code:

- Unsupervised Discovery of Mid-Level Discriminative Patches, S. Singh, A. Gupta, and A.A. Efros.

- Discriminatively trained deformable part models, R.B. Girshick, P.F. Felzenszwalb, and D. McAllester.

- Recovering the Spatial Layout of Cluttered Rooms, V. Hedau, D. Hoiem, D. Forsyth.

- Geometric Reasoning for Single Image Structure Recovery, D.C. Lee, M. Hebert, T. Kanade.