|

This paper

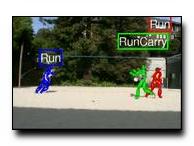

describes a system that can annotate a video sequence with:

a description of the appearance of each actor; when the actor is in

view; and a representation of the actor's activity while in view. The

system does not require a fixed background, and is automatic. The

system works by (1) tracking people in 2D and then, using an annotated

motion capture dataset, (2) synthesizing an annotated 3D motion

sequence matching the 2D tracks. The 3D motion capture data is manually

annotated off-line using a class structure that describes everyday

motions and allows motion annotations to be composed --- one may jump

while running, for example. Descriptions computed from video of real

motions show that the method is accurate.

Ramanan, D., Forsyth, D. A. "Automatic Annotation of

Everyday Movements." Neural Info. Proc. Systems (NIPS),

Vancouver, Canada, Dec 2003.

Paper

Journal version (draft)

Poster

|