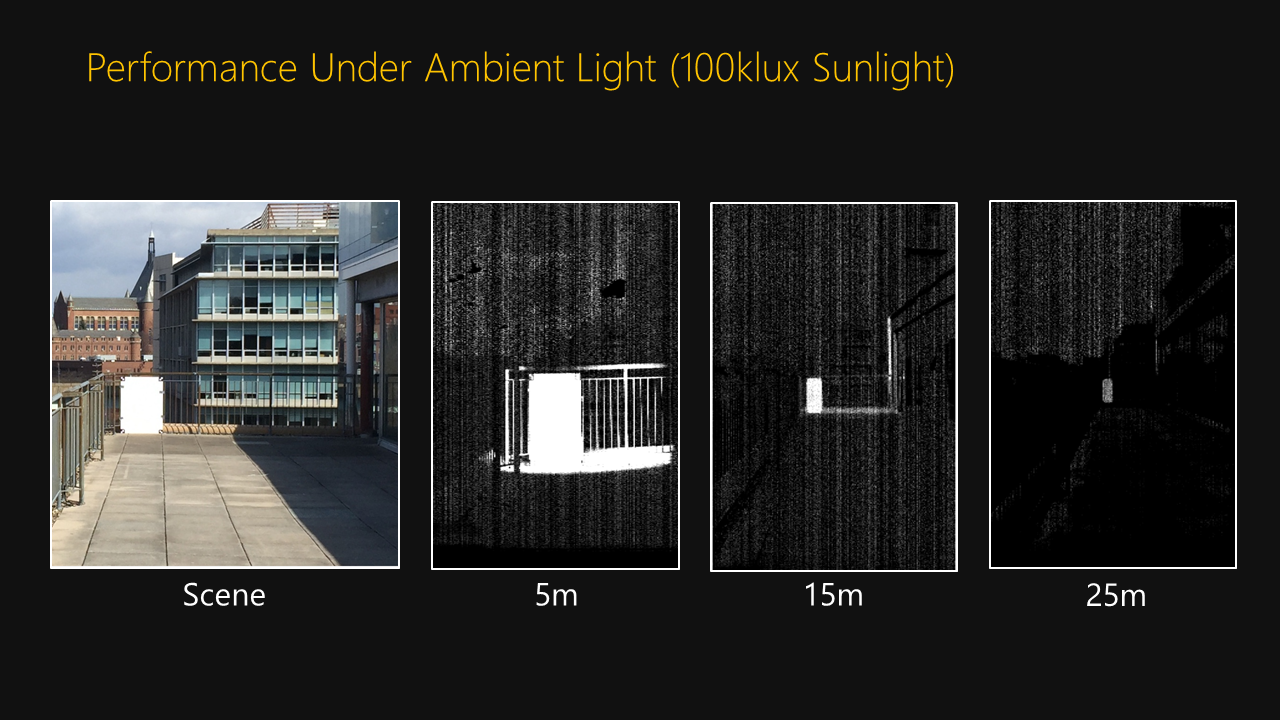

Outdoor Performance

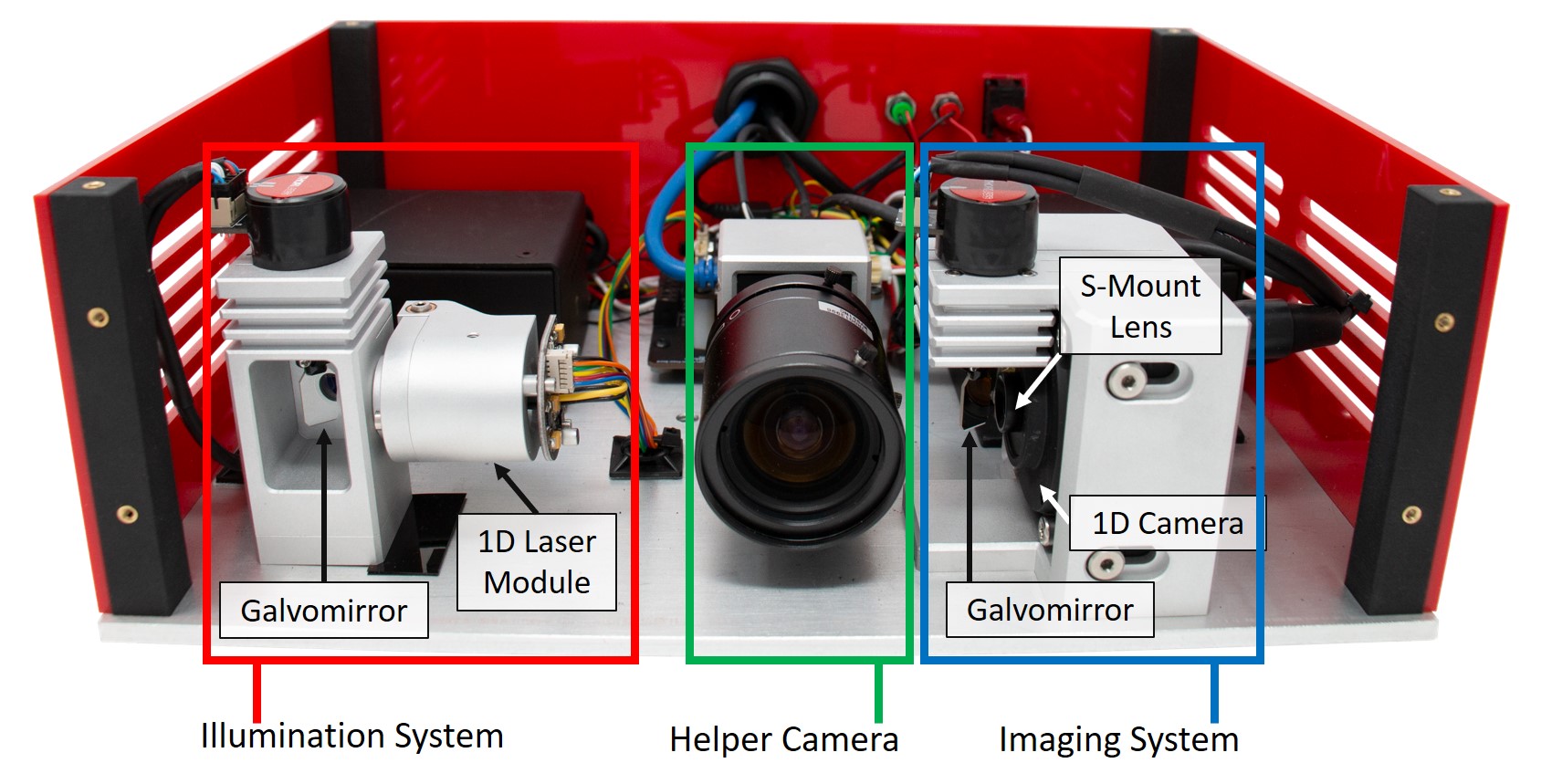

Programmable light curtains have excellent performance in ambient light. One reason for this is the concentration of light and imaging into lines. By projecting lines of light and using lines of imaging rather than emitting broad flashes of light, the received light per unit area is greater enabling long range imaging. This is similar to our previous work we’ve demonstrated with the Episcan3D and EpiToF prototypes.

Another reason for the high-performance is ambient light subtraction. To increase detection ability of the light curtain we capture two images with the light curtain at each step. One with the laser on and one with it off to capture just the ambient light. This ambient image is then directly subtracted from the other image to get only the contribution from the laser light. With this approach the programmable light curtain device can see a white board over 25 meters away in bright sunlight.

Jian Wang

Jian Wang

Joe Bartels

Joe Bartels

William "Red" Whittaker

William "Red" Whittaker

Aswin Sankaranarayanan

Aswin Sankaranarayanan Srinivasa Narasimhan

Srinivasa Narasimhan