Our goal is to design a fast and reliable computational sensor

which, given a task, provides useful information for coherent interaction

with the environment. Low-latency visual tracking of salient targets

in the field of view (FOV) is an important task in machine vision. Several

issues must be addressed: 1) the problem of selecting a target from the background,

2) the problem of maintaining the tracking engagement (i.e., target locking),

and 3) the problem of shifting tracking to new targets either when the presently

engaged feature leaves the FOV, or when the user or a host computer decides

to select and track another target in the FOV.

The problem of visual attention in biological systems concerns similar

issues: 1) select a salient location in the image, 2) transfer local data

from such a location to the higher processing stage, and 3) proceed by selecting

a new interesting location [11]. By selecting only relevant retinotopic information

in intermediate processing levels, the visual attention protects the limited

communication and processing resources from an information overload at higher

levels. However, Alport suggested that the need for attention goes beyond

protecting limited resources during complex object recognition: attention

is needed to ensure behavioral coherence. Namely, selective processing

is necessary in order to isolate parameters (e.g., target location, velocity,

size) for the appropriate action. For behavioral coherence, the following

requirements for attention are suggested [1]: 1) low latency requirement

— attentional system must operate in fast-changing environments; 2) locking

requirement—the appropriate attentional engagement has to be maintained

while action is executed; 3) purposive shift requirement—the system

must be able to override the attentional engagement when faced by environmental

threats or opportunities. The purposive shift requirement contradicts the

locking requirement, but humans and other species adopt a combination of

two partial solutions: 1) intentional shift—generate a shift based

on a range of heuristics and experiences; and 2) opportunistic shift

—elicit a shift in response to detecting a more “attractive” sensory cue.

The requirements for visual attention are analogous to requirements for

low-latency robust tracking. In fact, if the salient features for the tracking

device are also salient for the attentional system, the distinction between

the tracking and attention shifts fades. It is then permissible to speak

of our implementation as the implementation of a primitive sensory attention

system. The term sensory attention (as opposed to visual attention)

is used to emphasize the fact that the data selection in our sensor is performed

over the sensory signal, rather than over the retinotopic scene representation

in intermediate processing levels on which attention may operate in brains.

Our implementation of sensory attention meets both the low-latency requirement

and the locking requirement while providing mechanisms for both intentional

and opportunistic shifts. These features are weakly addressed in other recently

reported attention-related circuits. In one example, a one-dimensional array of cells

electrically receives a saliency map and uses delayed transient inhibition

at the selected location to model covert attentional scanning as suggested

by Koch and Ullman. The circuit mimics one aspect of the object recognition

process in which the attention roams across the conspicuous features of an

object (e.g., discontinuities, contours, etc.). However, it is questionable how this implementation would meet the low

latency requirement in fast-changing scenes; the attention may take a long

time, if ever, before it comes across the task-relevant target when

several equally salient (but not equally relevant) targets are in the FOV.

In, the reduced version of this circuit is used in a one-dimensional optical

tracking sensor which computes a saliency map on-chip, but has a weak mechanism

for ensuring a locking requirement with complex scenes. Both designs inherently

implement the opportunistic shifts, but neither provides the mechanism for

the intentional shifts.

A winner-take-all

circuit is responsible for selecting a salient feature and reporting the

location of the attention. The location of the attention is global information which is

reported as the output. The location of the attention is also used

internally to adapt the attention to meet the

locking requirement.

In practical applications, there are often several targets in the

scene. The target of interest is not necessarily the strongest or the most

salient. We

need to direct the sensor's attention toward that target. Once the

target is selected, we need a mechanism that will lock and track

the target while the target is of interest and/or a perceptually

guided goal is being executed. Recalling the analogy with the

visual attention, the selection mechanism corresponds to

opportunistic attention shifts initiated by "telling" the observer

where to "look," while the locking mechanism meets the locking

requirement and maintains attention engagement under motion.

Our implementation solves these issues by inhibiting a portion of

the saliency map, thus restricting the activity of the WTA circuit

to a programmable active region--a subset of the array. The active

region is programmed by appropriate row and column addressing. There are two modes of operation: 1) select mode and 2)

lock mode. In the select mode, the active region is

user-defined by the external addressing (see figure on the right) . The active

region is of arbitrary size and location. The target selected by

the paradigm is the absolute maximum within this region.

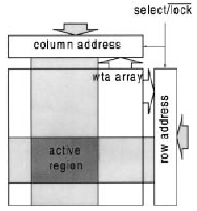

Our implementation solves these issues by inhibiting a portion of

the saliency map, thus restricting the activity of the WTA circuit

to a programmable active region--a subset of the array. The active

region is programmed by appropriate row and column addressing. There are two modes of operation: 1) select mode and 2)

lock mode. In the select mode, the active region is

user-defined by the external addressing (see figure on the right) . The active

region is of arbitrary size and location. The target selected by

the paradigm is the absolute maximum within this region.

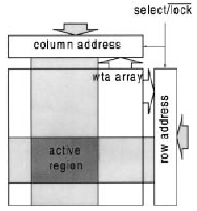

In lock

mode, the sensor itself dynamically defines a small (e.g., 3x3 in our implementation) active region centered at the most recent

location of the target (see figure on the left). The select mode directs the

attention toward a feature that is useful for the task at hand.

For example, a user may want to specify an initial active region,

aiding the sensor in attending to the relevant local peak in the

scene. Then, the lock mode is enabled for locking onto the

selected feature. In the lock mode, the 3x3 cell active region is

centered at the location of current attention target. If the

target moves, one of the eight active neighbors in the WTA array

will receive the winning intensity peak and automatically update

the position of the 3x3 active region. It is now clear that the

salient target is not necessarily the peak of the absolute maximum

intensity in the image. The ability of the sensor to define its

own active region is an example of the top-down sensory adaptation

presently missing in conventional machine vision systems.

In lock

mode, the sensor itself dynamically defines a small (e.g., 3x3 in our implementation) active region centered at the most recent

location of the target (see figure on the left). The select mode directs the

attention toward a feature that is useful for the task at hand.

For example, a user may want to specify an initial active region,

aiding the sensor in attending to the relevant local peak in the

scene. Then, the lock mode is enabled for locking onto the

selected feature. In the lock mode, the 3x3 cell active region is

centered at the location of current attention target. If the

target moves, one of the eight active neighbors in the WTA array

will receive the winning intensity peak and automatically update

the position of the 3x3 active region. It is now clear that the

salient target is not necessarily the peak of the absolute maximum

intensity in the image. The ability of the sensor to define its

own active region is an example of the top-down sensory adaptation

presently missing in conventional machine vision systems.

We implemented

the Sensory Attention in the Tracking

Sensor.

Our implementation solves these issues by inhibiting a portion of

the saliency map, thus restricting the activity of the WTA circuit

to a programmable active region--a subset of the array. The active

region is programmed by appropriate row and column addressing. There are two modes of operation: 1) select mode and 2)

lock mode. In the select mode, the active region is

user-defined by the external addressing (see figure on the right) . The active

region is of arbitrary size and location. The target selected by

the paradigm is the absolute maximum within this region.

Our implementation solves these issues by inhibiting a portion of

the saliency map, thus restricting the activity of the WTA circuit

to a programmable active region--a subset of the array. The active

region is programmed by appropriate row and column addressing. There are two modes of operation: 1) select mode and 2)

lock mode. In the select mode, the active region is

user-defined by the external addressing (see figure on the right) . The active

region is of arbitrary size and location. The target selected by

the paradigm is the absolute maximum within this region.

In lock

mode, the sensor itself dynamically defines a small (e.g., 3x3 in our implementation) active region centered at the most recent

location of the target (see figure on the left). The select mode directs the

attention toward a feature that is useful for the task at hand.

For example, a user may want to specify an initial active region,

aiding the sensor in attending to the relevant local peak in the

scene. Then, the lock mode is enabled for locking onto the

selected feature. In the lock mode, the 3x3 cell active region is

centered at the location of current attention target. If the

target moves, one of the eight active neighbors in the WTA array

will receive the winning intensity peak and automatically update

the position of the 3x3 active region. It is now clear that the

salient target is not necessarily the peak of the absolute maximum

intensity in the image. The ability of the sensor to define its

own active region is an example of the top-down sensory adaptation

presently missing in conventional machine vision systems.

In lock

mode, the sensor itself dynamically defines a small (e.g., 3x3 in our implementation) active region centered at the most recent

location of the target (see figure on the left). The select mode directs the

attention toward a feature that is useful for the task at hand.

For example, a user may want to specify an initial active region,

aiding the sensor in attending to the relevant local peak in the

scene. Then, the lock mode is enabled for locking onto the

selected feature. In the lock mode, the 3x3 cell active region is

centered at the location of current attention target. If the

target moves, one of the eight active neighbors in the WTA array

will receive the winning intensity peak and automatically update

the position of the 3x3 active region. It is now clear that the

salient target is not necessarily the peak of the absolute maximum

intensity in the image. The ability of the sensor to define its

own active region is an example of the top-down sensory adaptation

presently missing in conventional machine vision systems.