| Crowd Flow Segmentation & Stability Analysis

Related Publication: Saad Ali and Mubarak Shah, A Lagrangian Particle Dynamics Approach for Crowd Flow Segmentation and Stability Analysis, IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2007. |

|||||||||||||||||

|

|

|||||||||||||||||

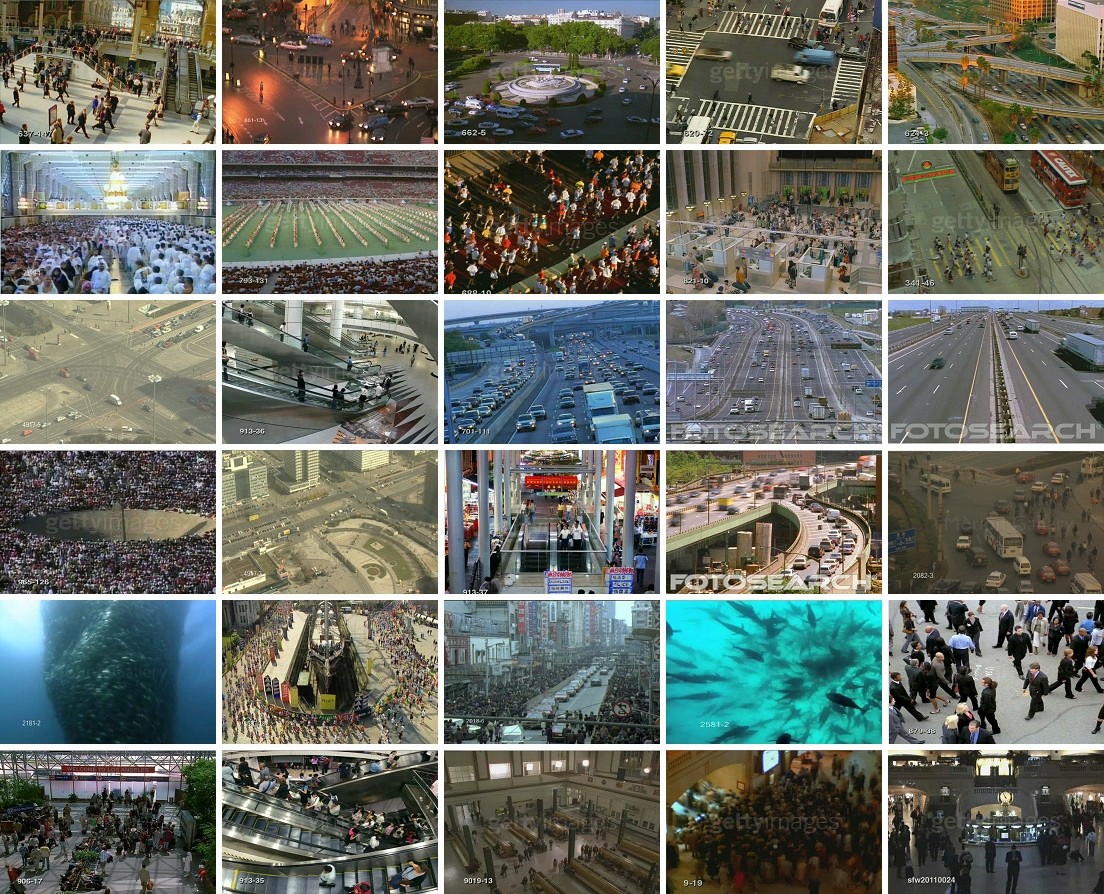

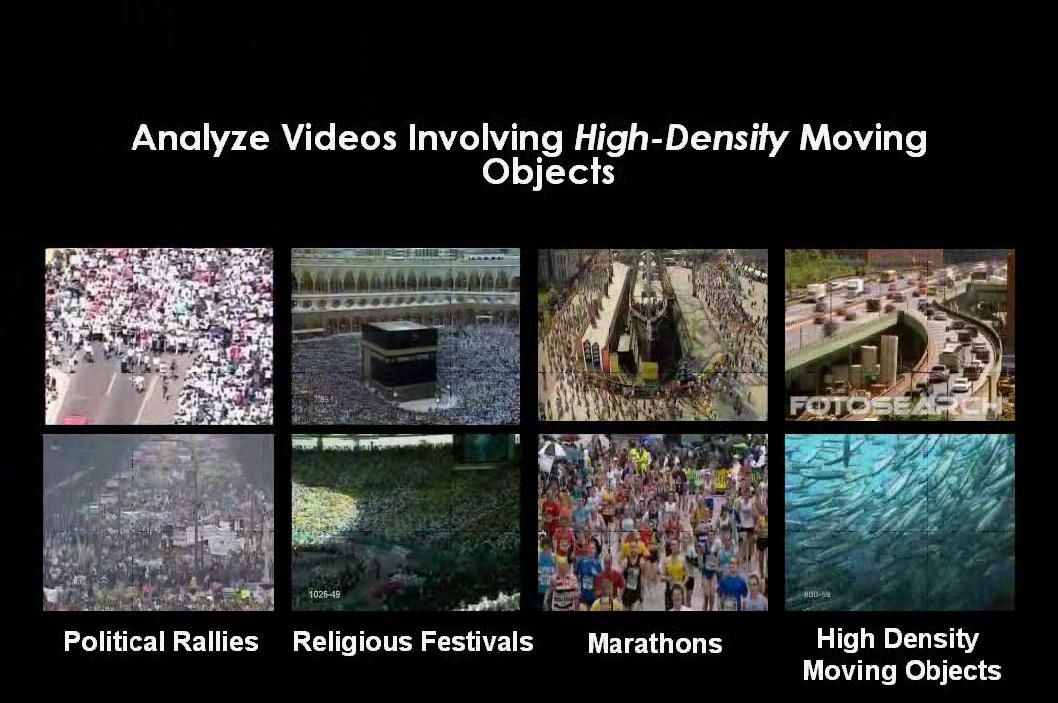

IntroductionVideo surveillance in public places is proliferating at an unprecedent rate, from closed-circuit security systems that can monitor individuals at airports, subways, concerts, sporting events etc., to network of cameras blanketing important locations within a city. Over the years, a number of intelligent surveillance systems have been developed for effective and efficient processing of the video footage generated by these surveillance cameras. However, despite the sophistication of these systems, they have not yet attained the desirable level of applicability and robustness required for the real-world settings and uncontrolled conditions. This is largely due to the algorithmic assumptions about the density of objects in a scene that are often violated in the real world environment. That is, the algorithms built into these systems assume that the observed scene will have a low density of objects. With video feeds from real-world settings like train stations, airports, city centers, malls, concerts, political rallies, sporting events, contain high to moderate density of crowds, simply being able to automatically and reliably detect, track and infer events remains a big hurdle for these surveillance systems.To overcome this shortcoming, we have developed a framework that models the crowded scene at a global level and bypasses the low-level object localization and tracking altogether. This is achived by treating the crowded scene as a fluid flow where Lagrangian Particle Dynamics is employed to detect dominant crowd flow segments. The dynamical behavior of each flow segment is then modelled to infer any abnormal activity taking place within the crowd. |

|||||||||||||||||

Algorithmic StepsGiven a video of a crowded scene, the first step is to compute the optical flow between consecutive frames. The optical flow fields are stacked up to generate a 3D volume. Next, a grid of Lagrangian particles is overlaid on the flow field volume, and advected using a numerical integration scheme. The evolution of particles through the flow is tracked using Flow Maps, which relate the initial position of the particles with their final position. The third steps computes gradients of the flow maps, and uses it to quantify the amount by which the neighboring particles have diverged by setting up a Cauchy-Green deformation tensor. The maximum eigenvalue of this tensor is used to construct the Finite Time Lyapunov Exponent (FTLE) field, which reveals Lagrangian Coherent Structures (LCS) present in the underlying flow. The LCS, which appeara as ridges in the FTLE field, divide the flow field into regions of qualitatively different dynamics and therefore can be used to locate the boundaries of the crowd flow segments. This is done by segmenting the FTLE field, which is a scalar field, in a normalized cuts framework. Finally, any change in the learned dynamics of the underlying flow is regarded as an instability, which is detected by signalling out new flow segments by establishing correspondence between flow segments over time. A brief description of each step along and associated results are provided in the following sections. (a) The block diagram of the crowd flow segmentation and instability detection algorithm. |

|||||||||||||||||

i) Optical Flow ComputationGiven a video sequence containing crowds, the first task is to estimate the flow field. We employ a scheme consisting of block based correlation in fourier domain for this purpose. Here we show results of the optical flow computation on two sequences from our data set. |

|||||||||||||||||

Video of the optical flow for the Mecca sequence. |

Video of the optical flow for the Pilgrims sequence. |

||||||||||||||||

ii) Flow Map ComputationThe next step is to carry out particle advection under the influence of the stacked up flow fields. To perform this step, a grid of particles is launched over the first the first flow field. The particles in the grid are advected usinga fourth order Runge-Kutta-Fehlberg algorithm. For the following sequences, we show two videos where first video depicts the evolution of the x-coordinate of the partciles, while the second video depicts the evolution of the y-coordinate of the particles. |

|||||||||||||||||

X-Particle Flow Map Y-Particle Flow Map |

X-Particle Flow Map Y-Particle Flow Map |

||||||||||||||||

|

|||||||||||||||||

|

|||||||||||||||||

| |||||||||||||||||

Downloads

| |||||||||||||||||

Video Presentation [220MB] [220MB]

| |||||||||||||||||

Related LinksThe following list includes links to papers and websites that I found extremely useful when I was working on the project.

| |||||||||||||||||