Generalization Vs In camera WALT Optimization Comparision

Results showcasing generalization of our method trained on 12 cameras on a new camera compared to the results of retraining with the new camera data into the pipeline can be seen below.

Rendered Watch and Learn Time-Lapse(WALT) Dataset

We replicate the WALT Dataset using computer graphics rendering. We use this rendered dataset only to evaluate different segment of our algorithm. We use a parking lot 3D model and simulate object trajectories similar to the real-world parking lot. We render 1000 time-lapse images of the scene from multiple viewpoints. The cameras for rendering are placed on the dashboard of the vehicles or on infrastructure around the parking lot. Sample rendered images from the dataset are shown below.

More Details

For an in-depth description of WALT, please refer to our paper.

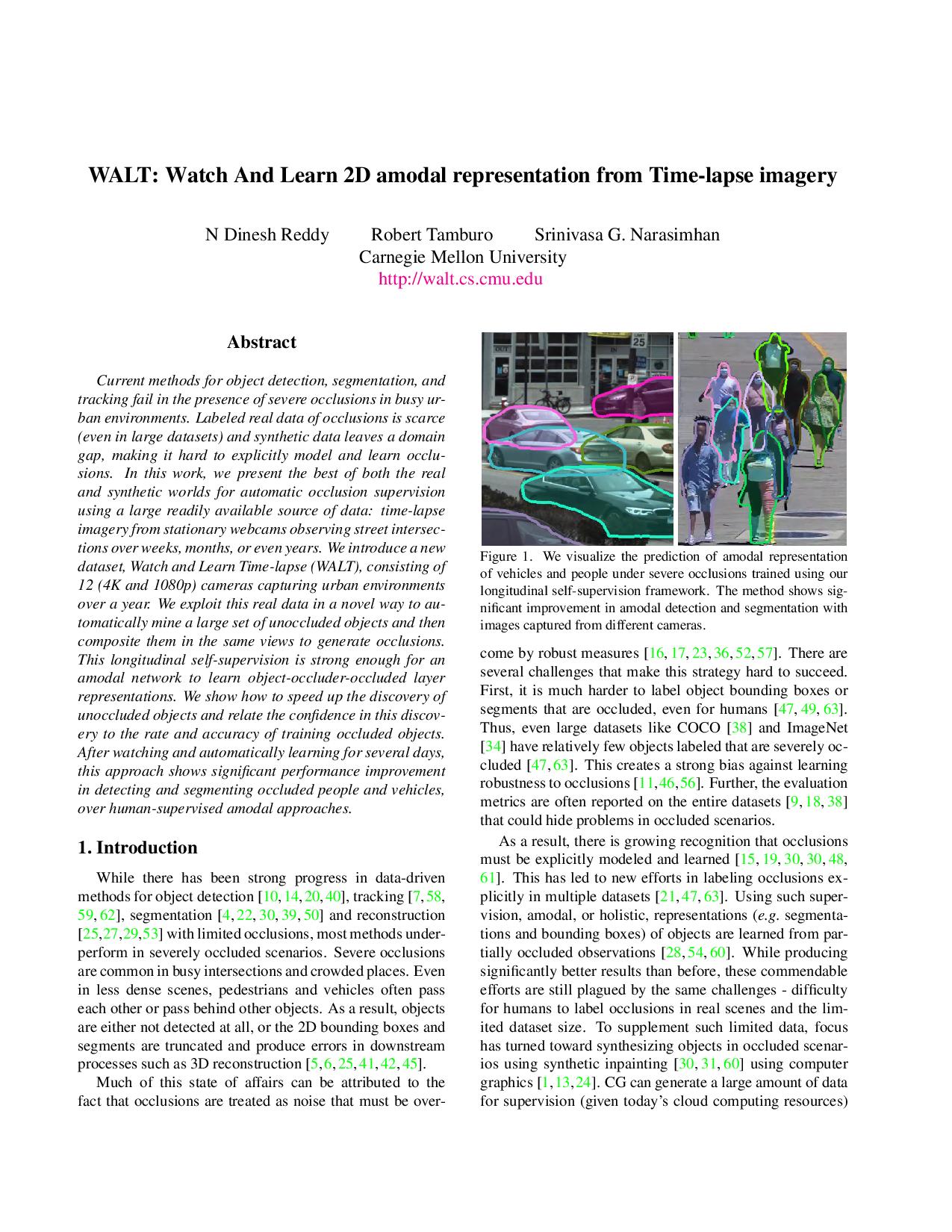

"WALT: Watch And Learn 2D Amodal Representation using Time-lapse Imagery",

N. Dinesh Reddy, Robert Tamburo, and Srinivasa NarasimhanIEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2022.

[pdf][supp][poster] [code] [bibtex]