Military Relevance

Calibration-free View Augmentation for Semi-Autonomous VSAMs

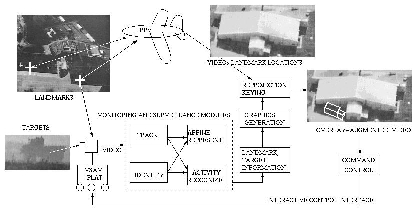

Mobile VSAM platforms are deployed in an area; perhaps an RPV platform circles above. Targets and their activities are sometimes visible, sometimes invisible to any given VSAM or the RPV. At a remote observation and command center an augmented view is presented featuring graphic overlays on the live video stream from a VSAM, RPV, or other observation post. In the augmented view the targets, activities, or other features of interest appear visible ``through walls'', ``through hills'', or ``through cover''. The graphic overlays are correctly registered with the live video without open-loop platform calibration techniques or visible calibration objects

The VSAM platforms are semi-autonomous, receiving intermittent commands from the center. The commander has a unified, interactive command and informational interface. He can annotate his computer-held representation of the surveillance area and interact with the graphical objects in his display. By interaction he can send high-level re-deployment or positioning commands to the VSAMs to allow reconfigurable surveillance, to ignore certain areas and focus on others, to form co-operative surveillance teams, etc. The VSAMs comply by using local computation and sensing. Such semi-autonomous (``deictic'') control is more robust and practical than either full autonomy or full teleoperation.

The impact of the proposed core work is to increase the effectiveness of VSAM exploitation by the new visualization technique of view augmentation which has produced dramatic improvements in operator performance in other domains (e.g. medical). In an augmented view, the operator or field personnel see a graphic rendition of the target correctly registered with a live video stream or a canonical view, even if the target is obscured or hidden in the video.

The major impact and appeal of non-Euclidean (affine and projective) representations is that they render calibration unnecessary .

The impact of adaptive, context-based tracking techniques and Araujo's algorithm is that tracking is made more accurate and robust against dropout, prediction is improved, and they are more informative of target state .

The impact of new activity recognition algorithms is that important new types of activities are recognized (including impulsive activities like throwing an object, and transitional activities like starting or stopping a vehicle). Situational context constrains classifications and decreases type I and type II errors. Detecting and classifying activities lets natural distractions be ignored, and attention be focused on processes of interest to the observer - commander.

The same graphics that augment the view provide a means for annotation of targets or regions of 3-D space and for interactivity; their impact is a unified observation and command interface for more effective deployment, positioning, cueing, and control of the semi-autonomous VSAMs.

This page is maintained by Mike Van Wie.

Last update: 11/11/96.