Research Projects in Omnidirectional Surveillance

Today, video surveillance and monitoring (VSAM) systems rely heavily

on conventional imaging systems as sources of visual information. A conventional imaging

system is severely limited in its field of view. It is only capable of acquiring visual

information through a relatively small solid angle subtended in front of the image

detector. To alleviate this problem, pan/tilt/zoom (PTZ) camera systems

are often employed. Though this enables a remote user to control the viewing direction of

the sensor, at any given instant, the field of view remains very limited. In short,

conventional VSAM systems have blind areas that are far larger that their visible areas.

Imagine that we had at our disposal an image sensor that could ``see'' in all

directions from its location (single viewpoint) in space, i.e. the entire ``sphere of

view''. We refer to such a sensor as being omnidirectional. It is easy to see that such a

device would have a profound impact on the nature of VSAM systems and their capabilities.

(a) An omnidirectional sensor would allow the VSAM system to be, at all times, aware of

its complete surrounding.

(b) Tracking a moving object would be feasible in software, without the need for any

moving parts (i.e. no panning, tilting, and rotating).

(c) Unlike physically tracking cameras, the omnidirectional sensor would have no

problem simultaneously detecting several objects (or intruders) in distinctly different

parts of its environment.

The goal of the omnidirectional VSAM research program at Columbia University

and Lehigh University is to create novel omnidirectional video sensors,

develop algorithms for omnidirectional visual processing, and use these sensors and

algorithms to build intelligent surveillance systems. Below, several research projects

within the omnidirectional VSAM program are briefly summarized. Most of these projected

are funded by DARPA's VSAM initiative. Some of the projects are funded in part by the

National Science

Foundation, the David and Lucile Packard Foundation, and an ONR MURI Grant.

Omnidirectional Video Cameras

There are several ways to enhance the field of view of an imaging system. Our approach

is to incorporate reflecting surfaces (mirrors) into conventional imaging systems that use

lenses. This is what we refer to as catadioptric imaging system. It is easy to see that

the field of view of a catadioptric system can be varied by changing the shape of the

mirror it uses. However, the entire imaging system must have a single effective viewpoint

to permit the generation of pure perspective images from a sensed image. At Columbia

University, a new camera with a hemispherical field of view has been developed. Two such

cameras can be placed back-to-back, without violating the single viewpoint constraint, to

arrive at a truly omnidirectional

sensor. Columbia's camera uses an optimized optical design that includes a parabolic

mirror and a telecentric lens. It turns out that, in order to achieve high optical

performance (resolution, for example), the mirror and the imaging lens system must be

matched and the device must be carefully implemented. Several early prototypes of

Columbia's omnidirectional camera are shown below. Further information related to

omnidirectional image sensing can be found here.

References:

"Omnidirectional Video Camera," Shree K. Nayar, Proc. of DARPA

Image Understanding Workshop, New Orleans, May 1997.

"Catadioptric Omnidirectional Camera," Shree K. Nayar, Proc. of

IEEE Conference on Computer Vision and Pattern Recognition,

Puerto Rico, June 1997.

A Complete Class of Catadioptric Cameras

As mentioned above, a catadioptric sensor uses a combination of lenses and mirrors

placed in a carefully arranged configuration to capture a much wider field of view while

maintaining a single fixed viewpoint. A single viewpoint is desirable because it permits

the generation of pure perspective images from the sensed image(s). The parabolic

catadioptric system described in the previous project is an example of such a single

viewpoint system. In more recent work, we derived the complete class of single-lens

single-mirror catadioptric sensors, which have single viewpoints. Some of the solutions

turn out to be degenerate with no practical value, while other solutions lead to

realizable sensors. We have also derived an expression for the spatial resolution of a

catadioptric sensor in terms of the resolution of the image detector used to construct it.

Finally, we have conducted a preliminary analysis of the defocus blur caused by the use of

a curved mirror. Our results provide insights into the merits and drawbacks of previously

proposed catadioptric cameras as well as draw our attention to some unexplored designs.

References:

"Catadioptric Image Formation," Shree K. Nayar and Simon Baker,

Proc. of DARPA Image Understanding Workshop, New Orleans, May 1997.

"A Theory of Catadioptric Image Formation,"

Simon Baker and Shree K. Nayar, Proc. of IEEE International

Conference on Computer Vision, Bombay, January 1998.

"A Theory of Catadioptric Image Formation,"

Simon Baker and Shree K. Nayar, Technical Report,

Department of Computer Science, Columbia University,

November 1997.

Software Generation of Perspective and Panoramic Video

Interactive visualization systems, such as Apple's QuickTime VR, allow a user to

navigate around a visual environment. This is done by simulating a virtual camera whose

parameters are controlled by the user. A limitation of existing systems is that they are

restricted to static environments, i.e. a single wide-angle image of a scene. The static

image is typically obtained by stitching together several images of a static scene taken

by rotating a camera about its center of projection. Alternatively, a wide-angle capture

device is used to acquire the image. Our video-rate omnidirectional camera makes it

possible to acquire wide-angle images at video rate. This has motivated us to develop a

software system that can create perspective and panoramic video streams from an

omnidirectional one. This capability adds a new dimension to the concept of remote visual

exploration. Our software system, called omnivideo, can generate (at 30 Hz) a large number

of perspective and panoramic video streams from a single omnidirectional video input,

using no more than a PC. A remote user can control the viewing parameters (viewing

direction, magnification, and size) of each perspective and panoramic stream using an

interactive device such as a mouse or a joystick. The output of the omnivideo system (as

seen on a PC screen) is shown below. Further details related to omnivideo can be found at here. Our current work is

geared towards the incorporation of a variety of image enhancement techniques into the

omnivideo system.

References:

"Generation of Perspective and Panoramic Video from Omnidirectional Video,"

Venkata N. Peri and Shree K. Nayar, Proc. of DARPA

Image Understanding Workshop, New Orleans, May 1997.

"Omnidirectional Video Processing,"

Venkata N. Peri and Shree K. Nayar,

Proc. of IROS U.S.-Japan Graduate Student Forum on Robotics, Osaka,

November 1996.

Remote Reality

The generation of a perspective view within a small window of a PC provides one type of

interface. Over the past six months we have made advances in both the speed with which one

can change the desired viewpoint and the speed of image generation. These advanced made it

practical for us to increase the field of view generated and allow us to integrate it with

a Head-Mounted-Display and head-tracker. The result is a system for visualization of

"live" action from a remote omnidirectional camera, i.e. a system for Remote

Reality.

This remote reality environment allows the user to naturally look around, within the

hemispherical field of view of the omnidirectional, and see objects/action at the remote

location.

If the omnidirectional video is recorded, say using a standard camcorder, the

visualization can be remote in both space and time. Current the system provides 320x240

resolution color images of 30frame-per-second (fps) video with position updates from the

head-tracker at between 15 and 30 fps. While the resolution is limited compared to today's

high end graphics simulators, we believe this has much to offer for training and very

significant advantages for very-short turn around "VR model acquisition" for

in situ training and mission rehearsal. The system is quite inexpensive. The

HMD/display system costs under $3000 for us to build from Common-Off-The-Shelf (COTS)

components, with omnidirectional recording system costing about the same. The image insert

shows the HMD component of system and an Omnidirectional camera on a vehicle mounting

bracket.

For the general training the system has the advantage of having very realistic, albeit

lower resolution, motion/action while not limiting the users viewing direction. The

omnidirectional camera can be mounted on a vehicle or carried in a field-back to allow

remote users to better experience the field conditions. Further its low cost combined with

the ease with which one can easy acquire a new training "environment" would mean

that it could be used at local facilities and maintained/customized with very little

training. It can also be used for recording/review of training exercises.

For the pre-mission rehearsal, the system provides a very unique capability. If a

omnivideo were acquired either by a vehicle drive through an area of interest and/or by a

very-low altitude UAV fly-through, then an in-field remote-reality system could provide in

situ rehearsal where users could review the site, in any direction. As the system could

either use live video transmissions from the remote camera, or if more practical, a

recorded tape, the "turn-around time" from acquiring the data to mission

rehearsal would minimal. With a monocular display the system and the next generation of

wearable computers, the system could be extended to allow a group of mobile agent to

independently view remote sites, e.g. with a robotic vehicle carrying the camera into

forward locations.

The evaluation of this work has two components. The first evaluation is to insure the

design constraints, 30fps video and 15fps head tracking are met. The second evaluation is

tied to image quality which will be evaluated other aspects of this project. Over the next

few months we will continue to enhance the software, to port it to Windows-NT (its

currently Linux based), and increase the types of HMD and head-trackers that are

supported. We are also investigating, at a lower priority, auditory processing and

software to aid in development in better/easier augmentation of the remote environments

with information such as textual labels or active icons.

Omnidirectional Monitoring Over the Internet

The Internet can serve as a powerful platform for video monitoring. When the scenes of

interest are truly remote, it is hard to access the outputs of the cameras located in the

scene. In such cases, a client-server architecture that facilitates interactive video is

attractive. Today, there are quite a few video cameras that are hooked up to the internet.

Images produced by such a camera can be accessed by a remote user with the push of a

button. In some cases, the orientation of the camera can also be controlled remotely.

Unfortunately, clients must be scheduled (say, on a first-come-first-serve basis); if two

users wish to interact with the camera at the same time, one must be served first while

the other waits. This greatly limits the extent of interaction possible. Our

omnidirectional cameras and the accompanying software provide a powerful means for remote

visual monitoring. Since the camera captures an omnidirectional field of view, each remote

viewer can request his/her own view, which is efficiently computed from the same

omnidirectional image. Scores of users can therefore be serviced simultaneously, without

any conflicts. In addition, we have used

state-of-the-art video compression and transmission techniques to ensure that users can

view and manipulate their video streams close to real-time (a good link provides about 5

frames a second). A live demonstration of the first omnidirectional web-camera can be seen

here.

Catadioptric Stereo

Catadioptric stereo, as the name suggests, uses mirrors to capture multiple views of a

scene, simultaneously. Based on our results on catadioptric image formation, we have

studied a class of stereo systems that use multiple mirrors, a single lens, and a single

camera. When compared to conventional stereo systems that use two cameras, this approach

has a number of significant advantages such as wide field of view, identical camera

parameters and ease of calibration. While a variety of mirror shapes can be used to obtain

the multiple stereo views, it is convenient from a computational perspective to ensure

that each view is captured from a single viewpoint. We have analyzed four such stereo

systems. They use a single camera pointed towards planar, ellipsoidal, hyperboloidal, and

paraboloidal mirrors, respectively. In each case, we have derived epipolar constraints. In

addition, we have studied exactly what can be seen by each system and formalized the

notion of field of view. In particular, we have implemented two catadioptric stereo

systems and conducted experiments to obtain 3-D structure. Below you see sketches of the

four different catadioptric stereo systems. Also shown is an image captured by a parabolic

stereo system and a depth map computed from it.

References:

"Sphereo: Recovering Depth Using a Single Camera and Two Specular Spheres,"

Shree K. Nayar, Proc. of SPIE: Optics, Illumination, and Image Sensing

for Machine Vision II, Cambridge, November 1988.

"Stereo Using Mirrors," Sameer Nene and Shree K. Nayar,

Proc. of IEEE International Conference on Computer Vision,

Bombay, January 1998.

"Stereo Using Mirrors," Sameer Nene and Shree K. Nayar,

Technical Report,

Department of Computer Science, Columbia University,

November 1997.

A Handy Stereo Camera

We have implemented a compact portable version of our catadioptric stereo design that

uses planar mirrors. The complete stereo system is shown below. It uses two planar mirrors

that are hinged together. The angles of the two mirrors can be adjusted independently to

vary the field of view of the stereo system.

A single Hi-8 video camera records reflections from the two planar mirrors. The camera

has a built in zoom lens that permits further control of the stereo field of view. The

baseline of the stereo system is determined by the angles of the with respect to the

optical axis of the camera and the distance of the camera from the mirrors.

An image of an object produced by the stereo system is shown below. Since both views of

the scene are captured using the same camera, the image centers, the focal lengths, aspect

ratios, and gamma functions

for both views are identical. This makes stereo calibration much simpler than in a

two-camera stereo system, even when the positions and orientations of the mirrors are

unknown. Furthermore, both views

are captures at the same instance and hence there are no synchronization issues to be

dealt with. The calibration simply involves computing the rotation and translation between

the two virtual camera coordinate

frames corresponding to the two mirrors. These unknowns are easily computed by using

selected a small number of corresponding pairs in the two views and then computing the

fundamental matrix. Below the result of such a calibration are shown. The computed

fundamental matrix is used to plot epipolar lines in the right view for the marked points

in the left view. Also shown are the computed depth map, textured mapped and a novel view

of the depth map.

Combined Omnidirectional and Pan/Tilt/Zoom System

This project is geared towards the use of an omnidirectional imaging system to guide

(or control) one or more conventional pan/tilt/zoom (PTZ) imaging systems. Such a combined

system has several advantages. While the omnidirectional camera provides relatively

low-resolution images compared to narrow field-of-view camera, it is able to provide a

complete view of activities in an area. The omnidirectional video can therefore be used to

drive conventional PTZ systems (popularly known as "domes" in the security

industry). The control can either be manual or automatic. In the manual mode, interesting

regions of activity are selected by clicking on the omnidirectional video display. A

pre-computed map that relates coordinates in

the omnidirectional and PTZ systems is used to orient the PTZ system to provide a

high-quality video stream of the region of interest. In the automatic mode, a motion

detection algorithm is used to detect moving objects

in the omnidirectional video. Since there can be several moving objects, a scheduling

algorithm is used to determine the sequence in which the PTZ system watches the moving

objects as well as determine the time it devotes to each object. Below, the combined

omnidirectional and PTZ system is shown. The omnidirectional camera hangs beneath the PTZ

system. Our current work is geared towards developing sophisticated control laws for the

trajectories of the PTZ system, the use of approximate site models to determine the

mapping between the omnidirectional and PTZ system, the design of novel scheduling

algorithms that would emulate different surveillance behaviors and the scaling of the

current system to one that includes multiple omnidrectional and PTZ systems.

Omnidirectional Egomotion Analysis

An application that clearly benefits from a wide field of view is the computation of

ego-motion. Traditional cameras suffer from the problem that the direction of translation

may lie outside of the field of view of the

camera, making the computation of camera motion sensitive to noise. We have developed a

method for the recovery of ego-motion using omnidirectional cameras. Noting the

relationship between spherical projection and wide-angle imaging devices, we propose

mapping the local image velocity vectors to a sphere, using the Jacobian of the

transformation between the projection model of the camera and spherical projection. Once

the velocity vectors are mapped to a sphere, we have shown how existing ego-motion

algorithms can be applied to recover the motion parameters (rotation and translation). We

are currently exploring real-time variants of our optical flow and egomotion algorithms.

These algorithms would have direct applications such as: navigation , dead-reckoning,

stabilization, time to collision estimation, obstacle avoidance, and 3D structure

estimation.

References:

"Egomotion and Omnidirectional Sensors,"

Joshua Gluckman and Shree K. Nayar,

Proc. of IEEE International Conference on Computer Vision,

Bombay, January 1998.

Smooth Object Tracking without Moving Parts

This project is geared towards developing algorithms for smooth tracking of individual

objects moving within the omnidirectional field of view. The key idea is to use our

omnivideo software system to create a perspective video stream that is focused on a moving

object; i.e. the viewing direction and magnification are updated so as to keep the tracked

object in the center of the computed perspective stream. This algorithm uses a combination

of differencing and background subtraction, followed by sequential labeling, to find large

moving regions. Simple temporal analysis is done to keep the labels of moving objects

consistent over frames. A Kalman filter is then employed to generate smooth trajectories

for the viewing parameters. These parameters are used in real-time to update the

perspective video stream. The system therefore behaves very much like a pan/tilt/zoom

system but without any moving parts. Below, a perspective video stream is shown that is

focused on a fast moving object. We are currently exploring ways to use our computed

trajectories to smoothly control a pan/tilt/zoom system.

Frame-Rate Multi-Body OmniDirectional Tracking

Fast tracking is an important component of automated video surveillance systems. The

non-traditional geometry of the omnidirection sensor means that many existing tracking

algorithms, which presume motion is well approximated by translation in the image, cannot

be used. Thus a significant component of the first 6 months of our project has been the

design and implementation of a low lever tracking system for omnidirectional images.

The omnidirectional tracker is designed to handle multiple independent moving bodies

undergoing non-ridged motion. The algorithm is based on background subtraction followed by

connected component labeling, with subtle differences from previous work to better handle

the variations of outdoor scenes, tracking very small objects and fast moving objects. The

tracker can localize moving objects in the omnidirectional image and generate perspective

views centered on each object. The system runs at 30fps on a Pentium II class processor

when tracking and generating a few perspective images.

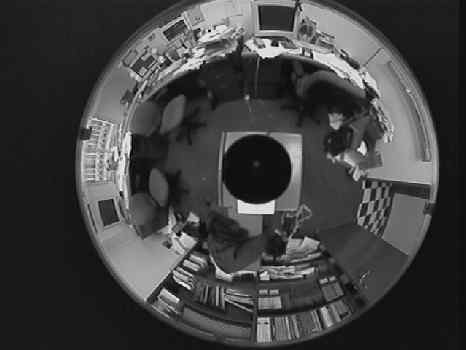

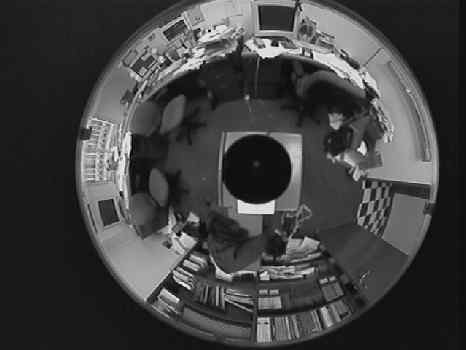

The example here shows an omnidirectional image on Lehigh's campus with

"boxes" superimposed on all the tracked regions. The four windows at the bottom

show the four most significant targets. The targets are (left to right): a car and three

different people walking. Rounded boxes and arrows were added to highlight the

relationships. (Note: web-encoding reduces image quality :-) Image showing omnidirectional

tracker and generated views.

The current software will provide a base for the more significant research issues we

will address over the next few months. The ongoing research includes:

- how to better adapt to changing outdoor conditions and how to evaluate such algorithms

- How to selectively ignore "unimportant" motions such as swaying trees.

- increasing the length of time where an objects identity is maintained, especially when

it is first "seen"

- How to provide an aid in focus of attention among a large number of moving targets

including how to smoothly control the virtual "camera view" and when to switch

views between targets.

OmniMovie Compression

An Omni-Directional image sequence provides some unique advantages and also unique

challenges for compression. For surveillance applications, the omnidirectional nature

removes the need for a continuously panning/tilting camera. Thus the omnidirectional image

stream provides wide-field coverage while maintaining a mostly static image sequence. For

the quasi-static regions traditional MPEG compression of the difference from the reference

image works reasonably well. However when there is motion, either of objects

or of the camera, MPEG compression starts to break down. The fundamental problem is

that MPEG is strongly biased toward motions that are well approximated as pure

translational motion within the image. Unfortunately, object/camera translations in the

world are not well approximate by translations in the Omni-directional image, thereby

limiting the image quality and compression rate of MPEG compressed omnidirectional scenes,

especially for a moving omnicamera.

We are currently exploring different schemes to provide lower-bandwidth and higher

quality compression. The approach currently under investigation is a generalization of

MPEG to allow different spatial "biases". Rather than considering a 3x3 regular

grid of potential "motions", we consider 9 more generally specified regions to

which an block could move --- in an omnidirectional image sequence these regions include a

significant rotation component. The basic compression algorithm is then very MPEG like:

For each 8x8 block, compute the difference between that block and each of the 9

"predicted locations" from the previous frame and then encode the

"direction" of motion and DCT compress the difference image. The predicted

locations are spatially varying but temporally constant making them easy to encode. Since

for the surveillance applications we will already be doing object tracking, and probably

transmitting some representation of object motion, we will be exploring using this

tracking data to further bias the "motion". The quality and performance of this

algorithm is currently being evaluated and is expected to be finished in the next few

months.

SuperResolution OmniImages

With a 360 degree by 180 degree field of view, all packed within a standard video

image, resolution is often an issue. To help mitigate this, we are developing algorithms

that combine tracking, high quality image warping and robust estimation to fuse produce

" super-resolution" images. We have already demonstrated our techniques for more

traditional sensors, see our DARPA Image

Understanding Workshop paper for a summary of our two different approaches as well as

our detailed web docs/papers.

- Ming-Chao Chiang and

Terrance E. Boult, "Local Blur Estimation and

Super-Resolution, CVPR '97. or download the PostScript Version

- Ming-Chao Chiang and

Terrance E. Boult, Efficient Image Warping and Super-Resolution,

Proceedings of the Third IEEE Workshop on Applications of Computer Vision, pages

56-61, Dec 1996. or download the PostScript Version

- Ming-Chao Chiang and

Terrance E. Boult, The Integrating Resampler and Efficient Image

Warping, Proceedings of the ARPA Image Understanding Workshop, pages

843-849, Feb 1996.

We are currently concluding a quantitative evaluation of the image-based technique

using recognition rates as the primary metric. Over the next six months we will be

adapting the technique to the omnidirectional sensor, where the warping and blurring

models need to be more complex. Outdoor lighting issues should be addressed with our

edge-based technique, though it will be limited by the quality of matching with varying

shadows and non-ridge objects.

Live Images in the IUE and Distributed IUE

Over the past few months we have been working on extending the IUE to better support

VSAM applications. As VSAM applications tend to be near real time, we needed to provide a

means of interaction with a smaller/fast subset of the IUE. We have prototyped, a new set

of classes for image filtering which will make it possible to develop standalone image

processing oriented programs with no IUE linkage but which can be recompiled/linked to

allow full IUE interactions. These classes are highly templated, including the input and

output image types, and they allow "non-IUE" image representations to be used.

This should allow more rapid integration of this IUE subset into existing systems, with

the benefit that the resulting classes will then be usable within the IUE. Over the next

few months we will extend our prototype to include a frame grabber interface with

processing via IUE "callbacks", and then extend the image classes to provide for

bounded ring buffer type storage. We will also be porting our tracking code to the IUE to

ensure the extensions will provide support

A second aspect of our IUE related work has been addressing distributed communication

and processing using the IUE. We have built upon MPI MPI,

a low-level message passing interface, to allow communication of IUE objects between a

collection of processes, with the IUE Data Exchange providing for serialization, see .

Over the six months we will be developing a "string-oriented" remote access

mechanism that will permit remote function execution, and over the next year will

explore/development of a CORBA wrapper for the IUE.