ShapeMap 3-D: Efficient shape mapping through dense touch and vision

ICRA 2022

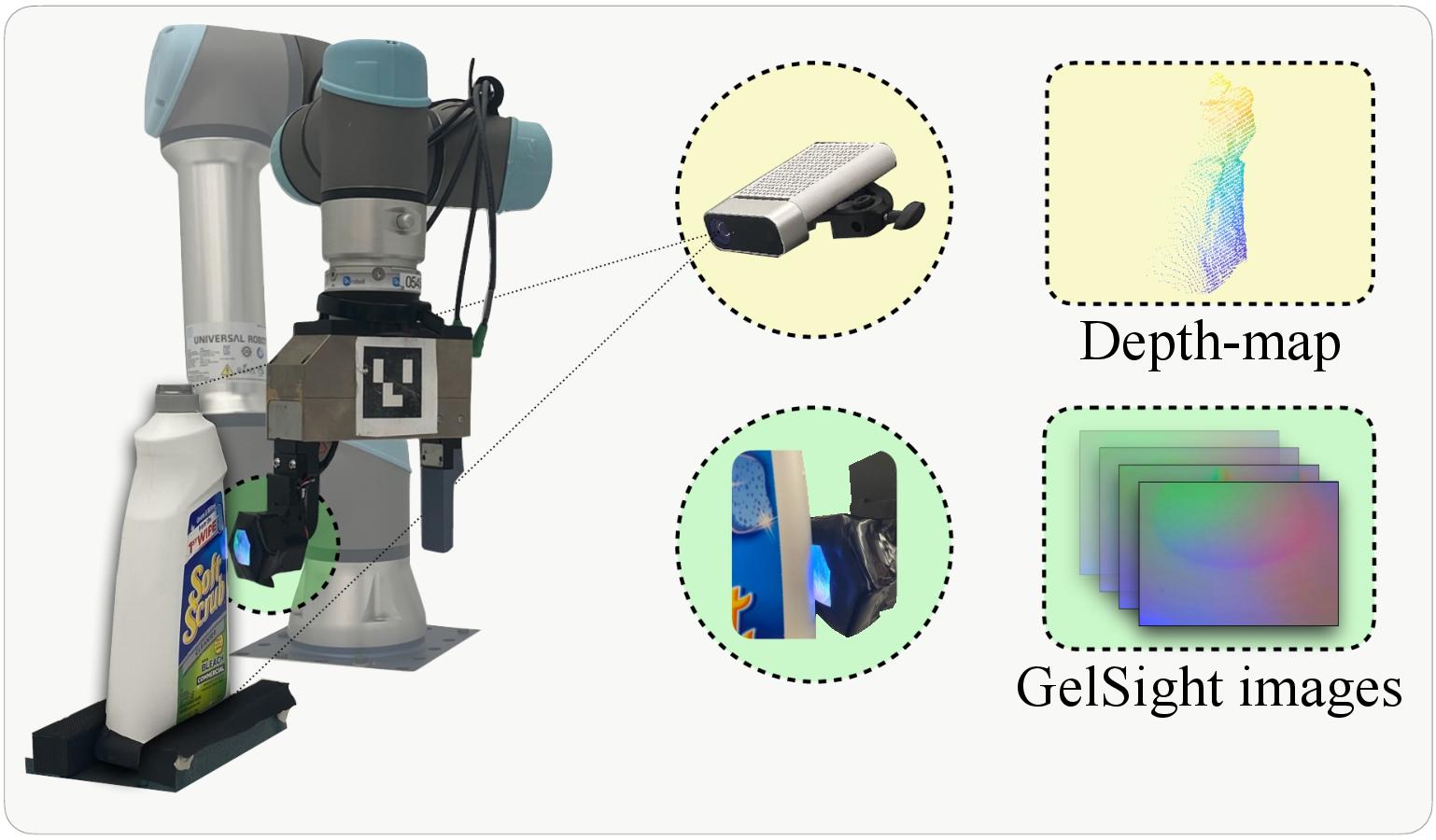

Knowledge of 3-D object shape is important for robot manipulation, but may not be readily available in unstructured environments. We propose a framework that incrementally reconstructs tabletop 3-D objects from a sequence of tactile images and a noisy depth-map. Our contributions include: ( i ) recovering local shape from GelSight images, learned via tactile simulation ( ii ) incremental shape mapping through inference on our Gaussian process spatial graph (GP-SG). We demonstrate visuo-tactile mapping in both our simulated and real-world datasets.

We train a GelSight-to-depth model with Taxim, a tactile simulator that mimics intensity distributions from the real sensor. Simulation allows us to scale supervised-learning to a wider range of objects and ground-truth.

A GP is a nonparametric method to learn a continuous function from data, well-suited to model spatial phenomena. We represent the scene as a spatial factor graph, comprising of nodes we optimize for and factors that constrain them. Our optimization goal is to recover the posterior, which represents the SDF and its underlying uncertainty. Implementing the full GP in the graph is costly, as each measurement constrains all query nodes. Motivated by prior work in spatial partitioning, we decompose the GP into local unary factors as a sparse approximation.

A 2-D illustration of our GP spatial graph (GP-SG), an efficient local approximation to a full GP. Each surface measurement produces a unary factor at query node (within the local radius). This represents a local Gaussian potential for the GP implicit surface. The optimization yields posterior SDF mean + uncertainty. The zero-level set of the SDF gives us the implicit surface.

We generate 60 GelSight-object interactions uniformly spread across each object in our YCBSight-Sim dataset. We render a depth-map from the perspective of an overlooking camera using Pyrender. Finally, zero-mean Gaussian noise is added to tactile point-clouds, sensor poses, and depth-map. Hover over each object to visualize the incremental visuo-tactile mapping.

We use a UR5e 6-DoF robot arm, mounting the GelSight sensor on a WSG50 parallel gripper. The objects are secured by a mechanical bench vise at a known pose, to ensure they remain static. After capturing the depth-map, we approach each object from a discretized set of angles and heights. We detect contact events by thresholding the tactile images. We collect 40 tactile images of the object's lateral surface, along with the gripper poses via robot kinematics.

We the mapping results for the YCBSight-Real dataset is similar to that of simulation. Hover over each object to visualize the incremental visuo-tactile mapping.

@inproceedings{Suresh22icra,

author = {S. Suresh and Z. Si and J. Mangelson and W. Yuan and M. Kaess},

title = {{ShapeMap 3-D}: Efficient shape mapping through dense touch

and vision},

booktitle = {Proc. IEEE Intl. Conf. on Robotics and Automation, ICRA},

address = {Philadelphia, PA, USA},

month = may,

year = {2022}

}