Shawn Arseneau

Shawn Arseneau

Affiliation Address:

Robotics

Institute

Carnegie

Mellon University

5000

Forbes Avenue, Pittsburgh, PA, USA, 15213

|

Research Interests |

My

research topics of choice are image analysis and segmentation with a focus on

real-world applications. In particular, I’m interested in occlusion

detection and classification to allow for person-tracking from video

sequences. This research is also pertinent to applications where

gradient-based contours form key features in the image. For example, my

most recent work represents key features from an image that aid in fingerprint

analysis and defect detection. The theories and algorithms are also

applicable to higher dimensional data that is useful in 4D medical images

(x,y,z,t) as well as for defining decision boundaries in data mining. I

am also interested in artificial intelligence, augmented reality and cutting-edge

human-computer interfaces.

I am currently working on several projects including:

- Accelerating Computer Vision Algorithms using the Graphics Processing Unit (GPU) for

* Feature Detection/Selection/Correspondence/Tracking

* Motion Estimation

* Object Recognition

* Feature Description

- Self Localization of Unmanned Aerial Vehicles

|

Projects - Archive |

(Ph.D. thesis)

A

junction is defined as the point where two or more contours meet, such as at

the point of intersection between overlapping lines. Asymmetric junctions

arise from the merging of an odd number of contour end-points. In

computer vision, where contours are created from gradient information,

junctions play a prominent role. For example, they can be used as salient

features for object classification algorithms or to improve edge

detection.

Current

junction analysis methods include convolution, which applies a mask over a

sub-region of the image, and diffusion, which propagates gradient information

from point-to-point based on a set of rules.

A

novel method is proposed, which results in an improved approximation of the

underlying contours. The method combines the ability to represent

asymmetric junctions, as do a number of convolution methods, with the

robustness of local support obtained from diffusion schemes. This is

achieved by a two-stage process that first transforms the gradient information

into a voting field, followed by an iterative update of junction

estimates. Several design choices were evaluated with respect to their

reinforcement of asymmetry. The proposed approach proved superior to

existing techniques in representing asymmetric junctions over a wide range of

scenarios.

An Asymmetrical Diffusion Framework for Junction

Analysis

An Asymmetrical Diffusion Framework for Junction

Analysis

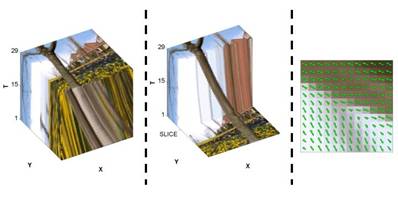

The diffusion framework exhibits many

promising properties for the purposes of junction analysis. In its most

common form, images are diffused either isotropically or with respect to

gradient information in an anisotropical fashion. This information is

then collected into an orientational distribution function (ODF) and the

resulting features are modeled as 'X', 'Y' or 'T'-shaped junctions.

Specific to the spatio-temporal domain, and in particular, a 2D spatio-temporal

slice, points of kinetic-based occlusion are identified by T-junctions while

points of kinetic-transparency form X-junctions. The challenge is that

most forms of diffusion are symmetric in their representation and are unable to

properly distinguish between these two junction types. This work proposes

to diffuse information asymmetrically and investigates the differences between

weighting the iterative diffusion isotropically versus as an ODF-shaped region

of influence function.

An Improved Representation of Junctions through

Asymmetric Tensor Diffusion

S. Arseneau and J. Cooperstock

International Symposium on Visual Computing, November 2006.

(accepted, to appear)

An Asymmetrical Diffusion Framework for Junction Analysis

S. Arseneau and J. Cooperstock

British Machine Vision Conference, (BMVC 2006), Vol.2, pp.689-698, 2006.

Asymmetrical Tensor Diffusion

Asymmetrical Tensor Diffusion

Junction structures are rich with information. For example, junctions play a key role in motion segmentation through occlusion detection. It also aids in pattern recognition for fingerprint analysis, and in enhancing images by preserving edges while suppressing noise to name but a few applications.

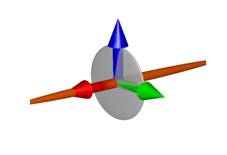

This

work proposes a two-stage process that transforms the local structure tensor

data into an asymmetric directional field, followed by the application of a diffusion

framework that allows for multiple estimates to express the local junction

information in a more representative, asymmetrical (non

pi-periodic) format.

Asymmetrical Tensor Diffusion

S. Arseneau

Centre

for Intelligent Machines Symposium, Montreal, Canada, May 2006.

Structure Tensors: Tutorial and Demonstration

Structure Tensors: Tutorial and Demonstration

Structure tensors are a matrix representation of partial derivative information. In the field of image processing and computer vision, it is typically used to represent the gradient or "edge" information. It also has a more powerful description of local patterns as opposed to the directional derivative through its coherence measure. It has several other advantages that are detailed in the structure tensor section of this tutorial. This information is designed specifically as either an introduction or refresher to structure tensors to those in the image processing field; however, it should also be useful to those from a more general math background who want to learn more about this matrix representation. To provide more detail than the typical tutorial, I have included the MATLAB source code both as inline with the text as well as in a comprehensive zip file at the end of this document.

Online contribution to:

Robust Estimation of Local Orientation Analysis

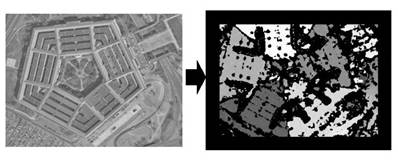

Robust Estimation of Local Orientation Analysis

Typically when performing local orientation analysis, the single-orientation constraint is applied. It is well known that this restriction is often violated in the presence of such phenomenon as occlusion or complex textures in the spatial domain, as well as in the form of transparency or motion boundaries in the spatiotemporal domain where multiple, local motions appear within the window of interest.

From trivial edge-detection techniques to the use of Gabor filters, the status quo in orientation analysis involves some form of convolution over the entire window of interest. Although Gabor filters have gained in popularity, most likely due to its computationally efficiency as well as being a satisfactory model for the human visual system, it is not without its flaws. Most prominent of those are the inherent smoothing of high gradient regions of the input data due to its embedded Gaussian window, as well as its dependency upon the single-orientation constraint. This paper proposes a viable alternative to Gabor-type approaches that allows for the presence of multiple orientations, offers increased robustness to noise while preserving such crucial scene elements as lines and edges.

Scale-Invariant results of CGM

Robust Estimation for Orientation Analysis

S. Arseneau and J. Cooperstock

Toronto-Montreal Vision Workshop, (TMVW), June 2004.

Real-Time Person Tracker

Real-Time Person Tracker

This project dealt with

tracking a single individual (professor) in a classroom environment. It

was a very challenging problem as the initial criteria were that the professor would

not interact in any way with the tracker and that no restrictions were placed

on the classroom itself. This implied that the tracker had to correctly

initialize the professor as the target: not a student or some other anomaly

such as a piece of equipment with the same colors as the clothing of the

professor. Since there were no restrictions on the classroom, there was

no a-priori information known about the lighting conditions. To add even

more challenge, the tracker made use of a pan-tilt camera, which implied a

great deal of camera jitter.

The final application was based on a combination of motion and color tracking of the professor with only minor heuristics added into the architecture. The end-product became a part of the ‘Classroom 2000’ project, which recorded the professor onto a web-cast over the entire semester. The complete camera-tracking algorithm was filed as a provisional patent by the office of technology transfer at McGill in 2000. The original prototype was implemented and installed in the McConnell engineering building while a full-version was later installed in the Department of Business at McGill University.

Combined Sony-Skin Tracker and Arseneau Person Tracker Movie (Test #1)

Combined Sony-Skin Tracker and Arseneau Person Tracker Movie (Test #2)

Professor Tracking Results Movie

Automated Feature Registration

for Robust Tracking Methods

S. Arseneau and J. Cooperstock

International Conference on Pattern Recognition, (ICPR), Vol.2, pp.

1078-1081, 2002.

Presenter Tracking in a Classroom Environment

S. Arseneau and J. Cooperstock

IEEE Industrial Electronics Conference, (IECON), Vol.1, pp.145-148,

November 1999.

Real-Time Image Segmentation for Action Recognition

S. Arseneau and J. Cooperstock

IEEE Pacific Rim Conference, (PACRIM), pp.86-89, August 1999.

Gesture Recognition for a Shared Reality Environment

Gesture Recognition for a Shared Reality Environment

A gesture recognition

algorithm was designed, developed and implemented that made use of three

cameras that captured the top, front and side-views. The goal was to allow

a user to entire a shared reality environment where the position and

orientation of the index finger would dictate the position of the 3D

cursor. The challenge was that it had to run in real-time and apply no

restrictions to the user. This implied that the user would not have to

restrict the color of their clothes (which is normally a requirement of

blue-screen or chroma-keying technology) nor restrict the user as to their

relative placement in the environment.

Gesture Recognition for QUAKE Movie

Telepresence with No Strings

Attached: An Architecture for a Shared Reality Environment

C. Cote, S. Arseneau and J. Cooperstock

International Symposium on

Mixed Reality, March 2001.

Automated Camera Tracking in a Real-World Environment

S. Arseneau and J. Cooperstock.

Graphical Interfaces, (GI 2000), Montreal, Canada, May 2000.

International RoboCup Competition

International RoboCup Competition

Participated in a team software competition at the International Joint Conference on Artificial Intelligence (IJCAI) in Stockholm, Sweden. The objective was to program three Sony Aibo dogs to cooperate and score a goal in a soccer match. I contributed to the path-planning behavior as well as the vision system.

Robotic Mimicking Control System

As

a part of my engineering thesis work at the Royal Military College and in

conjunction with the Canadian Space Agency, this work aimed to tele-operate a

robotic arm using a novel vision-based approach.

Minimal Spanning Tree

Minimal Spanning Tree

Unsupervised learning tutorial written for the partial fulfillment of the requirements for course Pattern Recognition [308-644B] by Shawn Arseneau and Rene van Wijhe.

[Unsupervised learning tutorial]

Inter-Layer Learning towards Emergent Cooperative

Behavior

Inter-Layer Learning towards Emergent Cooperative

Behavior

As applications for artificially intelligent agents increase in complexity we can no longer rely on clever heuristics and hand-tuned behaviors to develop their programming. Even the interaction between various components cannot be reduced to simple rules, as the complexities of realistic dynamic environments become unwieldy to characterize manually. To cope with these challenges, we propose an architecture for inter-layer learning where each layer is constructed with a higher level of complexity and control. Using RoboCup soccer as a testbed, we demonstrate the potential of this architecture for the development of effective, cooperative, multi-agent systems. At the lowest layer, individual basic skills are developed and refined in isolation through supervised and reinforcement learning techniques. The next layer uses machine learning to decide, at any point in time, which among a subset of the first layer tasks should be executed. This process is repeated for successive layers, thus providing higher levels of abstraction as new layers are added. The inter-layer learning architecture provides an explicit learning model for deciding individual and cooperative tactics in a dynamic environment and appears to be promising in real-time competition.

Inter-Layer Learning Towards Emergent Cooperative

Behavior

S. Arseneau, W. Sun, C. Zhao and J. Cooperstock

American Association for Artificial Intelligence, (AAAI), pp.3-8, July

2000.