| Chaotic Invariants for Human Action Recognition

Related Publication: Saad Ali, Arslan Basharat and Mubarak Shah, Chaotic Invariants for Human Action Recognition, IEEE International Conference on Computer Vision (ICCV), Rio de Janeiro, Brazil, October 14-20, 2007. |

|||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||

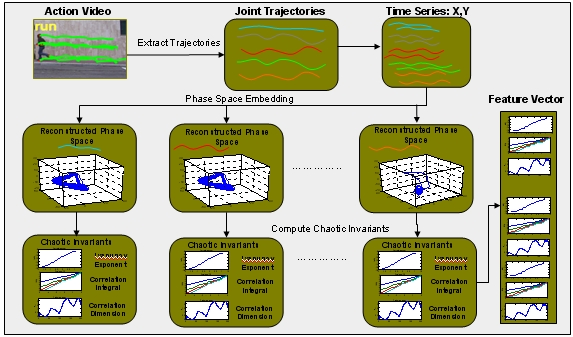

IntroductionThe aim of this project is to derive a representation of the dynamical system generating the human actions directly from the experimental data. This is achieved by proposing a computational framework that uses concepts from the theory of chaotic systems to model and analyze nonlinear dynamics of human actions. The trajectories of human body joints are used as the input representation of the action.Our contributions include :1) investigation of the appropriateness of theory of chaotic systems for human action modelling and recognition, 2) a new set of features to characterize nonlinear dynamics of human actions, 3) experimental validation of the feasibility and potential merits of carrying out action recognition using methods from theory of chaotic systems.  |

|||||||||||||||||||||||||

Algorithmic StepsThis section describes the algorithmic steps of the proposed action recognition framework. These are: i) Given a video of an exemplar action, obtain trajectories of reference body joints, and break each trajectory into a time series by considering each data dimension separately; ii) obtain chaotic structure of each time series by embedding it in a phase space of an appropriate dimension using the mutual information, and false nearest neighborhood algorithms; iii) apply determinism test to verify the existence of deterministic structure in the reconstructed phase space; iv) represent dynamical and metric structure of the reconstructed phase space in terms of the phase space invariants, and v) generate global feature vector of exemplar action by pooling invariants from all time series, and use it in a classification algorithm. Now, we describe each step of the algorithm in more detail in following subsections. (a) The block diagram of the crowd flow segmentation and instability detection algorithm. |

|||||||||||||||||||||||||

i) Trajectory ComputationTrajectories of six body joints (two hands, two feet, head, belly) are used for representing an action. The trajectories are normalized with respect to the belly point, resulting in five trajectories per action. In case of the motion capture data set, each point of the trajectory in represented by a three-dimensional coordinate (x,y,z). In case of the videos, we used a semi-supervised joint detection and tracking approach for generating these trajectories. That is, first we extracted the body skeletons and their endpoints by using morphological operations on the foreground silhouettes of the actor. An initial set of trajectories is generated by joining extracted joint locations using the spatial and motion similarity constraint. The broken trajectories and wrong associations were corrected manually. |

|||||||||||||||||||||||||

Trajectories for the ballet action from the motion capture data set. |

Trajectories for the walk action from the video data set. |

||||||||||||||||||||||||

| Next, each dimension of the trajectory is treated as a separate univariate time series. The next figure shows these univariate time series for the walk action from the motion capture data set. | |||||||||||||||||||||||||

|

|||||||||||||||||||||||||

ii) Phase Space EmbeddingEmbedding is a mapping from one dimensional space to a m-dimensional space. It is an important part of study of chaotic systems, as it allows one to study the systems for which the state space variables and the governing differential equations are unknown. The underlying idea of embedding is that all the variables of a dynamical system influence one another. Thus, every subsequent point of the given one dimensional time series results from an intricate combination of the influences of all the true state variables of the system. This observation allows us to introduce a series of substitute variables to obtain the whole m-dimensional phase space, where substitute variables carry the same information as the original variables of the system. This is pictorially described in the following figure:  The values of optimal m and tau are computed using the mutual infomration and false nearest neighbor algorithms respectively. The details of these algorithms are avilable in our ICCV 2007 publication. |

|||||||||||||||||||||||||

Three dimensional visualization of reconstructed phase spaces of different trajectories. |

|||||||||||||||||||||||||

|

|||||||||||||||||||||||||

|

|||||||||||||||||||||||||

Downloads | |||||||||||||||||||||||||

Related LinksThe following list includes links to books, websites, and Matlab toolboxes that we benefited from during the course of the project.

| |||||||||||||||||||||||||