NeuLab Presentations at EMNLP 2018

NeuLab members have ten main conference paper presentations, three workshop papers, and one invited talk at EMNLP 2018! Come check them out if you’re in Brussels for the conference.

Main Conference Papers

MTNT: A Testbed for Machine Translation of Noisy Text

- Authors: Paul Michel, Graham Neubig.

- Time: Friday November 2, 11:00–12:30. Grand Hall.

Noisy or non-standard input text can cause disastrous mistranslations in most modern Machine Translation (MT) systems, and there has been growing research interest in creating noise-robust MT systems. However, as of yet there are no publicly available parallel corpora of with naturally occurring noisy inputs and translations, and thus previous work has resorted to evaluating on synthetically created datasets. In this paper, we propose a benchmark dataset for Machine Translation of Noisy Text (MTNT), consisting of noisy comments on Reddit (www.reddit.com) and professionally sourced translations. We commissioned translations of English comments into French and Japanese, as well as French and Japanese comments into English, on the order of 7k-37k sentences per language pair. We qualitatively and quantitatively examine the types of noise included in this dataset, then demonstrate that existing MT models fail badly on a number of noise-related phenomena, even after performing adaptation on a small training set of in-domain data. This indicates that this dataset can provide an attractive testbed for methods tailored to handling noisy text in MT. Project Website

Neural Cross-lingual Named Entity Recognition with Minimal Resources

- Authors: Jiateng Xie, Zhilin Yang, Graham Neubig, Noah A. Smith, Jaime Carbonell.

- Time: Friday November 2, 11:00-12:30. Grand Hall.

For languages with no annotated resources, unsupervised transfer of natural language processing models such as named-entity recognition (NER) from resource-rich languages would be an appealing capability. However, differences in words and word order across languages make it a challenging problem. To improve mapping of lexical items across languages, we propose a method that finds translations based on bilingual word embeddings. To improve robustness to word order differences, we propose to use self-attention, which allows for a degree of flexibility with respect to word order. We demonstrate that these methods achieve state-of-the-art or competitive NER performance on commonly tested languages under a cross-lingual setting, with much lower resource requirements than past approaches. We also evaluate the challenges of applying these methods to Uyghur, a low-resource language. Code is available here.

Contextual Parameter Generation for Universal Neural Machine Translation

- Authors: Emmanouil Antonios Platanios, Mrinmaya Sachan, Graham Neubig, Tom Mitchell.

- Time: Friday November 2, 11:00–12:30. Grand Hall.

We propose a simple modification to existing neural machine translation (NMT) models that enables using a single universal model to translate between multiple languages while allowing for language specific parameterization, and that can also be used for domain adaptation. Our approach requires no changes to the model architecture of a standard NMT system, but instead introduces a new component, the contextual parameter generator (CPG), that generates the parameters of the system (e.g., weights in a neural network). This parameter generator accepts source and target language embeddings as input, and generates the parameters for the encoder and the decoder, respectively. The rest of the model remains unchanged and is shared across all languages. We show how this simple modification enables the system to use monolingual data for training and also perform zero-shot translation. We further show it is able to surpass state-of-the-art performance for both the IWSLT-15 and IWSLT-17 datasets and that the learned language embeddings are able to uncover interesting relationships between languages. Code is available here.

SwitchOut: an Efficient Data Augmentation Algorithm for Neural Machine Translation

- Authors: Xinyi Wang, Hieu Pham, Zihang Dai, Graham Neubig.

- Time: Friday November 2, 15:00–15:12. Gold Hall.

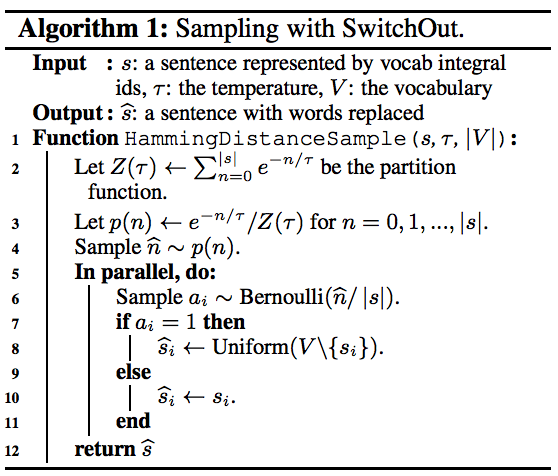

In this work, we examine methods for data augmentation for text-based tasks such as neural machine translation (NMT). We formulate the design of a data augmentation policy with desirable properties as an optimization problem, and derive a generic analytic solution. This solution not only subsumes some existing augmentation schemes, but also leads to an extremely simple data augmentation strategy for NMT: randomly replacing words in both the source sentence and the target sentence with other random words from their corresponding vocabularies. We name this method SwitchOut. Experiments on three translation datasets of different scales show that SwitchOut yields consistent improvements of about 0.5 BLEU, achieving better or comparable performances to strong alternatives such as word dropout (Sennrich et al., 2016a). Code to implement this method is included in the appendix.

Retrieval-Based Neural Code Generation

- Authors: Shirley Anugrah Hayati, Raphael Olivier, Pravalika Avvaru, Pengcheng Yin, Anthony Tomasic, Graham Neubig.

- Time: Friday November 2, 15:12–15:24. Silver Hall/Panoramic Hall.

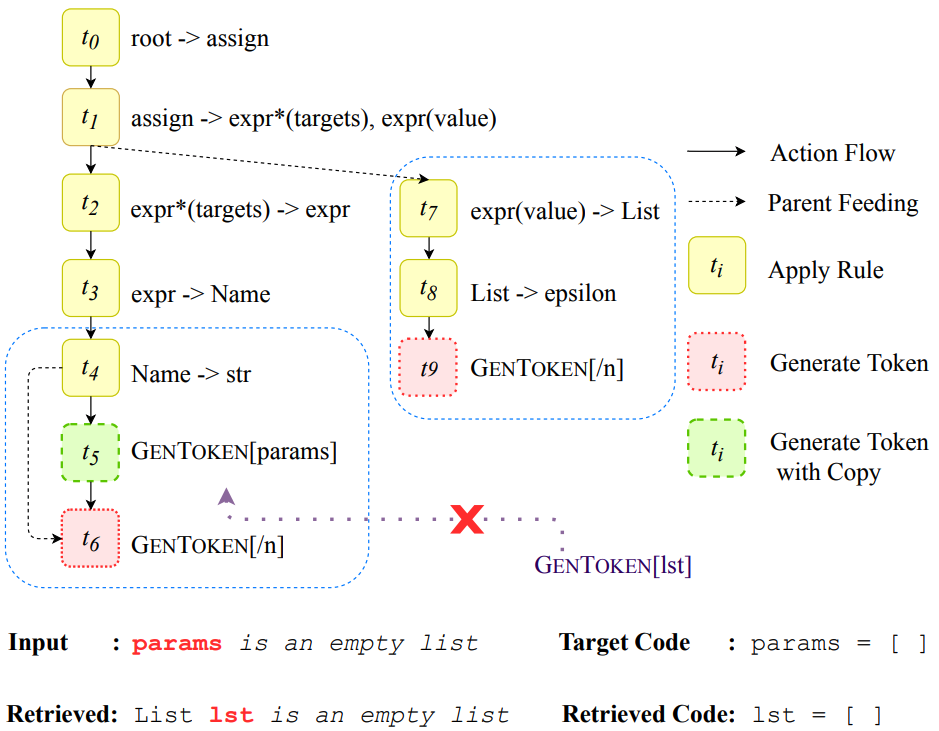

In models to generate program source code from natural language, representing this code in a tree structure has been a common approach. However, existing methods often fail to generate complex code correctly due to a lack of ability to memorize large and complex structures. We introduce ReCode, a method based on subtree retrieval that makes it possible to explicitly reference existing code examples within a neural code generation model. First, we retrieve sentences that are similar to input sentences using a dynamic-programming-based sentence similarity scoring method. Next, we extract n-grams of action sequences that build the associated abstract syntax tree. Finally, we increase the probability of actions that cause the retrieved n-gram action subtree to be in the predicted code. We show that our approach improves the performance on two code generation tasks by up to +2.6 BLEU. Code is available here.

Rapid Adaptation of Neural Machine Translation to New Languages

- Authors: Graham Neubig, Junjie Hu.

- Time: Friday November 2, 15:36–15:48. Gold Hall.

This paper examines the problem of adapting neural machine translation systems to new, low-resourced languages (LRLs) as effectively and rapidly as possible. We propose methods based on starting with massively multilingual “seed models”, which can be trained ahead-of-time, and then continuing training on data related to the LRL. We contrast a number of strategies, leading to a novel, simple, yet effective method of “similar-language regularization”, where we jointly train on both a LRL of interest and a similar high-resourced language to prevent over-fitting to small LRL data. Experiments demonstrate that massively multilingual models, even without any explicit adaptation, are surprisingly effective, achieving BLEU scores of up to 15.5 with no data from the LRL, and that the proposed similar-language regularization method improves over other adaptation methods by 1.7 BLEU points average over 4 LRL settings. Code is available here.

TranX: A Transition-based Neural Abstract Syntax Parser for Semantic Parsing and Code Generation (Demo Track)

- Authors: Pengcheng Yin, Graham Neubig.

- Time: Friday November 2, 16:30–18:00. Grand Hall.

We present TranX, a transition-based neural semantic parser that maps natural language (NL) utterances into formal meaning representations (MRs). TRANX uses a transition system based on the abstract syntax description language for the target MR, which gives it two major advantages: (1) it is highly accurate, using information from the syntax of the target MR to constrain the output space and model the information flow, and (2) it is highly generalizable, and can easily be applied to new types of MR by just writing a new abstract syntax description corresponding to the allowable structures in the MR. Experiments on four different semantic parsing and code generation tasks show that our system is generalizable, extensible, and effective, registering strong results compared to existing neural semantic parsers. Project Website, Online Demo

Unsupervised Learning of Syntactic Structure with Invertible Neural Projections

- Authors: Junxian He, Graham Neubig, Taylor Berg-Kirkpatrick.

- Time: Friday November 2, 16:48–17:06. Silver Hall/Panoramic Hall.

Unsupervised learning of syntactic structure is typically performed using generative models with discrete latent variables and multinomial parameters. In most cases, these models have not leveraged continuous word representations. In this work, we propose a novel generative model that jointly learns discrete syntactic structure and continuous word representations in an unsupervised fashion by cascading an invertible neural network with a structured generative prior. We show that the invertibility condition allows for efficient exact inference and marginal likelihood computation in our model so long as the prior is well-behaved. In experiments we instantiate our approach with both Markov and tree-structured priors, evaluating on two tasks: part-of-speech (POS) induction, and unsupervised dependency parsing without gold POS annotation. On the Penn Treebank, our Markov-structured model surpasses state-of-the-art results on POS induction. Similarly, we find that our tree-structured model achieves state-of-the-art performance on unsupervised dependency parsing for the difficult training condition where neither gold POS annotation nor punctuation-based constraints are available. Code is available here.

Adapting Word Embeddings to New Languages with Morphological and Phonological Subword Representations

- Authors: Aditi Chaudhary, Chunting Zhou, Lori Levin, Graham Neubig, David R. Mortensen, Jaime Carbonell.

- Time: Saturday November 3, 17:24–17:42. Hall 100/Hall 400.

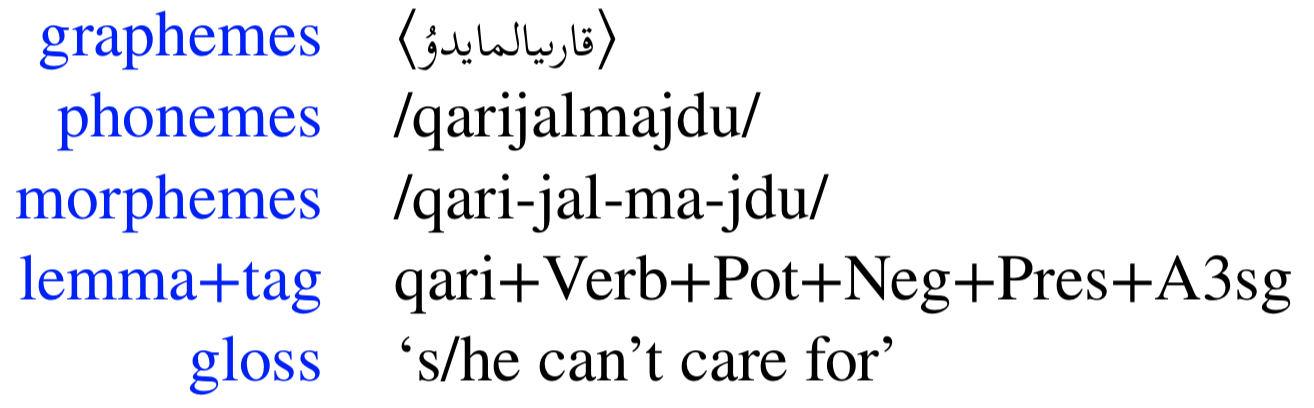

Much work in Natural Language Processing (NLP) has been for resource-rich languages, making generalization to new, less-resourced languages challenging. We present two approaches for improving generalization to low-resourced languages by adapting continuous word representations using linguistically motivated subword units: phonemes, morphemes and graphemes. Our method requires neither parallel corpora nor bilingual dictionaries and provides a significant gain in performance over previous methods relying on these resources. We demonstrate the effectiveness of our approaches on Named Entity Recognition for four languages, namely Uyghur, Turkish, Bengali and Hindi, of which Uyghur and Bengali are low resource languages, and also perform experiments on Machine Translation. Exploiting subwords with transfer learning gives us a boost of +15.2 NER F1 for Uyghur and +9.7 F1 for Bengali. We also show improvements in the monolingual setting where we achieve (avg.) +3 F1 and (avg.) +1.35 BLEU. Code is available here.

A Tree-based Decoder for Neural Machine Translation

- Authors: Xinyi Wang, Hieu Pham, Pengcheng Yin, Graham Neubig.

- Time: Sunday November 4, 13:45-13:57. Silver Hall/Panoramic Hall.

Recent advances in Neural Machine Translation (NMT) show that adding syntactic information to NMT systems can improve the quality of their translations. Most existing work utilizes some specific types of linguistically-inspired tree structures, like constituency and dependency parse trees. This is often done via a standard RNN decoder that operates on a linearized target tree structure. However, it is an open question of what specific linguistic formalism, if any, is the best structural representation for NMT. In this paper, we (1) propose an NMT model that can naturally generate the topology of an arbitrary tree structure on the target side, and (2) experiment with various target tree structures. Our experiments show the surprising result that our model delivers the best improvements with balanced binary trees constructed without any linguistic knowledge; this model outperforms standard seq2seq models by up to 2.1 BLEU points, and other methods for incorporating target-side syntax by up to 0.7 BLEU. Code is available here.

Workshop Papers

3rd Conference on Machine Translation (WMT18)

Parameter Sharing Methods for Multilingual Self-Attentional Translation Models

- Authors: Devendra Singh Sachan, Graham Neubig.

- Time: Thursday November 1, 17:00–17:20. Bozar Hall (Salle M).

In multilingual neural machine translation, it has been shown that sharing a single translation model between multiple languages can achieve competitive performance, sometimes even leading to performance gains over bilingually trained models. However, these improvements are not uniform; often multilingual parameter sharing results in a decrease in accuracy due to translation models not being able to accommodate different languages in their limited parameter space. In this work, we examine parameter sharing techniques that strike a happy medium between full sharing and individual training, specifically focusing on the self-attentional Transformer model. We find that the full parameter sharing approach leads to increases in BLEU scores mainly when the target languages are from a similar language family. However, even in the case where target languages are from different families where full parameter sharing leads to a noticeable drop in BLEU scores, our proposed methods for partial sharing of parameters can lead to substantial improvements in translation accuracy. Code is available here.

Contextual Encoding for Translation Quality Estimation

- Authors: Junjie Hu, Wei-Cheng Chang, Yuexin Wu, Graham Neubig.

- Time: Thursday November 1, 11:00–12:30. Bozar Hall (Salle M).

The task of word-level quality estimation (QE) consists of taking a source sentence and machine-generated translation, and predicting which words in the output are correct and which are wrong. In this paper, propose a method to effectively encode the local and global contextual information for each target word using a three-part neural network approach. The first part uses an embedding layer to represent words and their part-of-speech tags in both languages. The second part leverages a one-dimensional convolution layer to integrate local context information for each target word. The third part applies a stack of feed-forward and recurrent neural networks to further encode the global context in the sentence before making the predictions. This model was submitted as the CMU entry to the WMT2018 shared task on QE, and achieves strong results, ranking first in three of the six tracks.

15th International Workshop on Spoken Language Translation 2018 (IWSLT 2018)

Multi-Source Neural Machine Translation with Data Augmentation

- Authors: Yuta Nishimura, Katsuhito Sudoh, Graham Neubig, Satoshi Nakamura.

- Time: Tuesday October 30, 9:00–9:30.

Best Student Paper Award! Multi-source translation systems translate from multiple languages to a single target language. By using information from these multiple sources, these systems achieve large gains in accuracy. To train these systems, it is necessary to have corpora with parallel text in multiple sources and the target language. However, these corpora are rarely complete in practice due to the difficulty of providing human translations in all of the relevant languages. In this paper, we propose a data augmentation approach to fill such incomplete parts using multi-source neural machine translation (NMT). In our experiments, results varied over different language combinations but significant gains were observed when using a source language similar to the target language.

Talks

Analyzing and Interpreting Neural Networks for NLP (BlackboxNLP)

Learning with Latent Linguistic Structure

- Speaker: Graham Neubig.

- Time: Thursday November 1, 14:00-14:50. Silver Hall/Hall 100.

Neural networks provide a powerful tool to model language, but also depart from standard methods of linguistic representation, which usually consist of discrete tag, tree, or graph structures. These structures are useful for a number of reasons: they are more interpretable, and also can be useful in downstream tasks. In this talk, I will discuss models that explicitly incorporate these structures as latent variables, allowing for unsupervised or semi-supervised discovery of interpretable linguistic structure, with applications to part-of-speech and morphological tagging, as well as syntactic and semantic parsing.