June 2008 @ Kobe, Japan

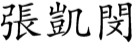

Kai-min Kevin Chang

Research Associate (Special Faculty),

Language Technologies Institute,

School of Computer Science,

Carnegie Mellon University.

Affiliated with

Center for Cognitive Brain Imaging,

Center for the Neural Basis of Cognition

Profile: CV, Resume Research Statement

Email: kaimin dot chang at gmail dot com

Phone: +1-412-268-1810

Fax: +1-412-268-6298

Office: Gates Hillman Center 5721 / Baker Hall 327M

Address:

Language Technologies Institute

Carnegie Mellon University

5000 Forbes Avenue

Pittsburgh, PA 15213

USA