Video Segmentation by Tracing Discontinuities in a Trajectory Embedding1 University of Pennsylvania

2 X'ian University

Abstract

We want to segment monocular videos into moving objects and the world scene, without using detectors.

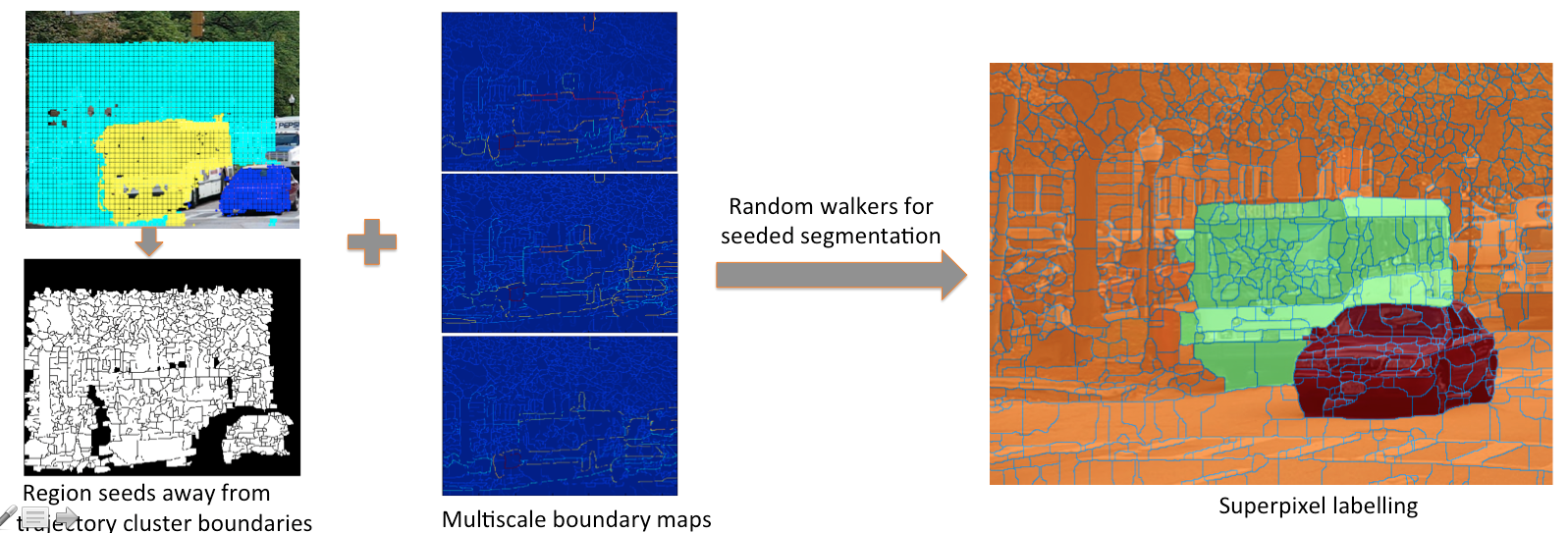

We employ long range motion similarity of dense point trajectories obtained by linking optical flow fields of consecutive frames, similar to [3]. We compute the trajectory spectral embedding from the trajectory motion affinities and discretize it using embedding discontinuities of [2]: discontinuity is measured between spatially neighboring trajectories and can indicate true versus false cluster boundaries. Starting from a trajectory (over)-clustering, we merge trajectory clusters with low discontinuity along their boundaries. We map trajectory clusters to image regions using random walkers of [4] on a spatiotemporal superpixel graph. Seed regions are the superpixels well overlapping with trajectory clusters. Superpixel affinities are derived from multiscale static image boundary maps. In this way, we obtain the right pixel labeling even in regions sparsely populated with trajectories - due to their low texturedness - and recover from optical flow "bleeding".

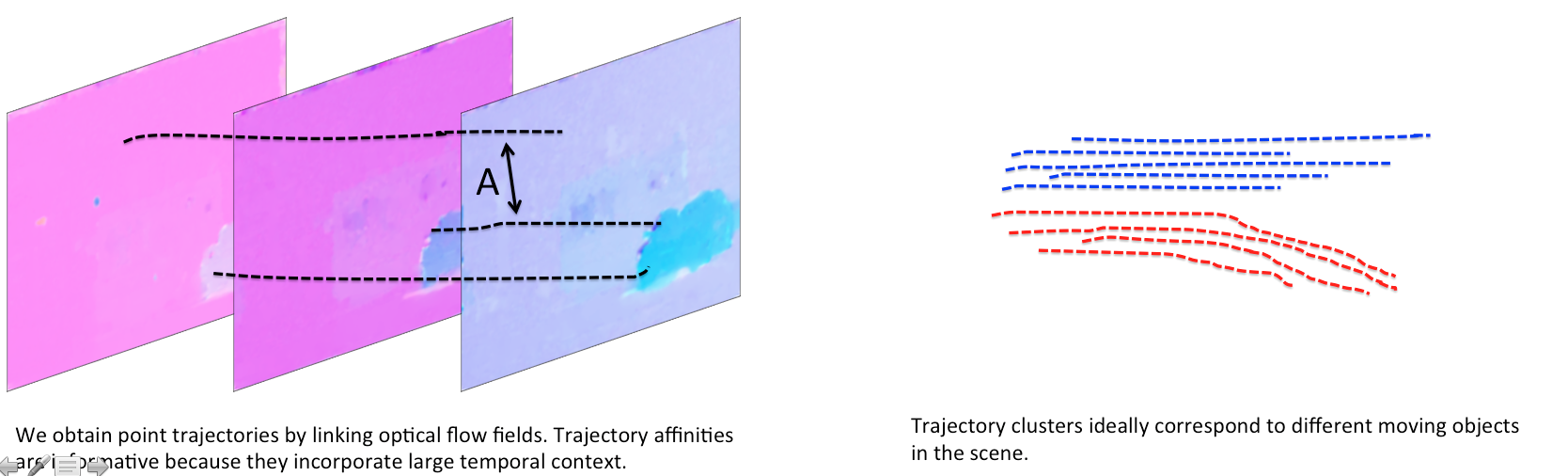

Trajectories and Motion Affinities

We obtain dense point trajectories by linking consecutive optical flow fields. A trajectory terminates when the corresponding forward-backward consistency check of the optial flow vectors fails. We compute affinities between each pair of trajectories within a spatial radius. Such affinities incorporate large time intervals and can correctly delineate objects even if the move similarly (or do not move at all) for a subset of frames.

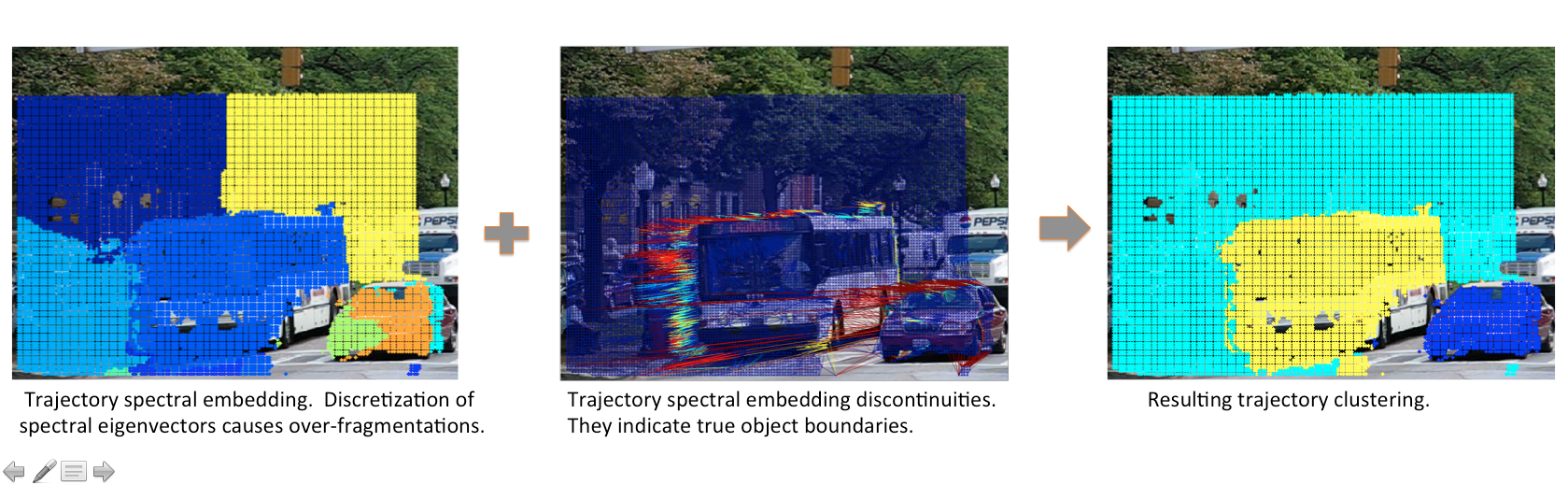

Trajectory Spectral Clustering and Discontinuities

We obtain an initial trajectory clustering by discretizing the eigenvectors of the normalized trajectory affinity matrix using eigenvector rotation of [5]. This produces many fake (interior) boundaries, not corresponding to object boundaries. We compute trajectory discontinuities between spatially neighboring trajectories, that is trajectories that are Delaunay neighbors in the Delaunay Triangulation on the trajectory points of any video frame. We merge clusters with low discontinuity on their common boundary.

From Trajectory Clusters to Image Regions

The source code can be downloaded from here.

Please report comments/bugs to katef at seas.upenn.edu.

Last update: July, 2013.

|