Parameterized Kernel Principal Component Analysis

People

Abstract

Kernel Principal Component Analysis (KPCA) is a popular generalization of linear PCA that allows non-linear feature extraction. In KPCA, data in the input space is mapped to higher (usually) dimensional feature space where the data can be linearly modeled. The feature space is typically induced implicitly by a kernel function, and linear PCA in the feature space is performed via the kernel trick. However, due to the implicitness of the feature space, some extensions of PCA such as robust PCA cannot be directly generalized to KPCA. This paper presents a technique to overcome this problem, and extends it to a unified framework for treating noise, missing data, and outliers in KPCA. Our method is based on a novel cost function to perform inference in KPCA. Extensive experiments, in both synthetic and real data, show that our algorithm outperforms existing methods.

Citation

|

Fernando de la Torre, Minh Hoai Nguyen,

"Parameterized Kernel Principal Component Analysis: Theory and Applications to Supervised and Unsupervised Image Alignment", Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2008 [PDF] [Bibtex] |

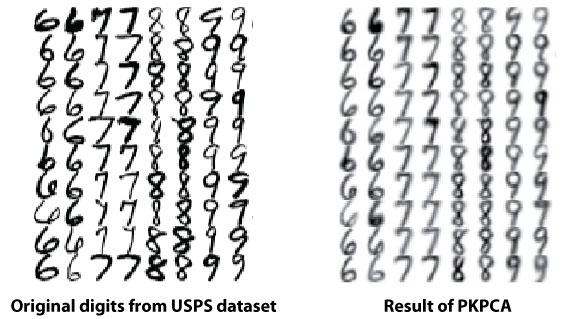

Results

Acknowledgements and Funding

This work was partially supported by National Institute of Justice award 2005-IJ-CX-K067 and National Institute of Health Grant R01 MH 051435.

Copyright notice

| Human Sensing Lab |