|

|

|

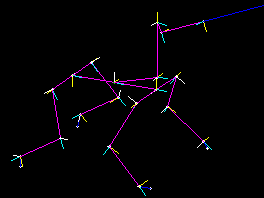

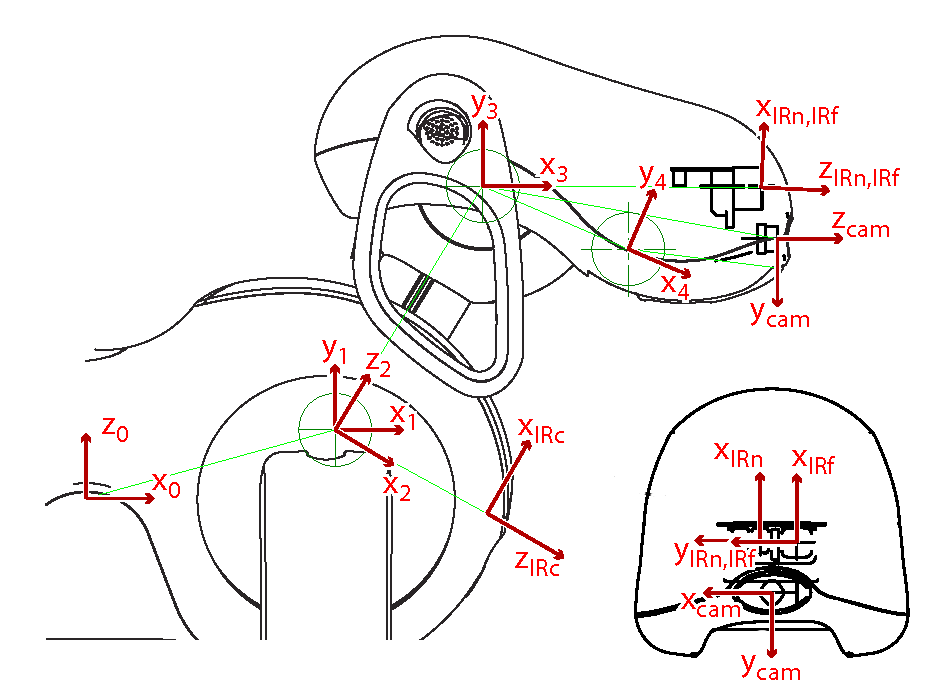

HeadOffset+TiltOffset. You

can see that its orientation differs from the base reference frame:

the x1 axis points forward, but it's the y1 axis

that points up. The head tilts about the z1 axis.

(Tekkotsu follows the Denavit-Hartenberg

convention, so all joints move about their reference frame's z

axis.) A joint reference frame does not rotate with its joint, so the

x1, y1 and z1 axes remain fixed with

respect to the base frame when the head tilts up or down.

The next joint in the head chain is the pan joint. The pan joint

reference frame has its origin at the same point as the tilt joint.

Its x2 and z2 axes rotate with the tilt angle

(but not the pan angle). In this reference frame, referred to as

HeadOffset+PanOffset, the head pans about the

z2 axis.

The nod joint's reference frame is referred to as

HeadOffset+NodOffset, and its x3 and

y3 axes rotate with the tilt and pan angles (but not the

nod angle). The head nods about the z3 axis.

At the distal end of the head chain is an additional reference frame

corresponding to the camera rather than a joint, so it has a special

name: CameraFrameOffset. Similarly, the chains for the

three IR sensors end in joints named NearIRFrameOffset,

FarIRFrameOffset, and ChestIRFrameOffset.

Distance from an IR sensor corresponds to depth along the z axis

(zIRn, zIRf, or zIRc,

respectively.)

In addition to the joint reference frames, which do not rotate

with their corresponding joints, Tekkotsu also provides a set of

link reference frames whose axes have the same origin point,

but do rotate with their joints. The names are the same as the

joint reference frame names. You specify which kind of reference

frame to use, joint or link, in the name of the function you call,

e.g., jointToBase vs. linkToBase.

linkToBase, converts from an arbitrary link reference

frame to the base frame. The inverse transformation is computed by

baseToLink. Tekkotsu provides for conversion between

reference frames on arbitrary chains. The general function is

linkToLink.

The global variable kine holds a Kinematics instance

whose joint angles are determined from current world state values,

udpated automatically from the robot's sensors. We can use this

instance to compute various reference frame transformations. Let's

start with the simplest possible transformation:

cout << "Transform Base to Base:" << endl;

cout.precision(3);

cout << setw(7) << kine->jointToBase(BaseFrameOffset) << endl;

The rotational submatrix (shown in red) is a 3x3 identity matrix, the translational component (shown in blue) is zero, and the entire transformation matrix is a 4x4 identity matrix.Transform Base to Base: 1.000 0.000 0.000 0.000 0.000 1.000 0.000 0.000 0.000 0.000 1.000 0.000 0.000 0.000 0.000 1.000

|

Now consider the transformation from the tilt to the base reference frame, i.e., from reference frame 1 to reference frame 0 on the head chain. The table at left gives the full definition of the head, mouth, and IR chains. The notation indicates that reference frame 1 (tilt) is defined with respect to reference frame 0 (base), with an x offset of 67.5 cm and z offset of 19.5 cm. The pan joint reference frame has the same origin as the tilt joint, so the x,y,z offsets from reference frame 2 to reference frame 1 are all zero. The nod joint (reference frame 3) is offset 80 mm along z2, the pan joint reference frame's z axis. The camera reference frame (cam) is defined with respect to reference frame 3 (nod), with an x offset of 81.06 cm and y offset of -14.6 cm. |

The code below computes both joint and link transformation matrices,

so you can compare them. Each time DstBehavior is activated it will

use the current joint angles to compute the matrices. Note that this

behavior automatically deactivates itself, by calling DoStart from

within DoStop.

|

The tilt frame and the base frame have the same orientation, but two of the axes are switched. In the tilt frame the y1 axis points up, while in the base frame it's the the z0 axis that points up. And in the tilt frame the z1 axis points to the left, while in the base frame the y0 axis points to the right. So the rotation submatrix above is a permutation of the identity matrix, with a sign flip in the second row. Note that the translational component of the transformation matrix (shown in blue) exactly matches the values in the "ERS-7 Head" table above. Since the angle of the tilt joint is close to zero, the linkToBase transformation matrix matches the jointToBase matrix. Any difference between their rotational components (green vs. red), e.g., the green values of +/-0.022 shown above, is due to the head tilt value not being precisely 0.000. Now let's use the Posture Editor to tilt the head down by 30 degrees and see what happens:DstBehavior is starting up. Head tilt is 1.25 degrees. tilt jointToBase= 1.000 0.000 0.000 67.500 0.000 0.000 -1.000 0.000 0.000 1.000 0.000 19.500 0.000 0.000 0.000 1.000 tilt linkToBase= 1.000 -0.022 0.000 67.500 0.000 0.000 -1.000 0.000 0.022 1.000 0.000 19.500 0.000 0.000 0.000 1.000 DstBehavior is shutting down.

The jointToBase transformation matrix is the same as before, since the tilt joint reference frame does not move with the tilt joint. But the link reference frame does, so the linkToBase transformation matrix now indicates a rotation of -30 degrees in the x0-z0 plane, shown in fuchsia above. You can verify that cos(-30o) is 0.866, and sin(-30o) is 0.500, closely matching the matrix values. (The discrepancy reflects the measured head angle being only -29.5 degrees in this example.) Explore more:DstBehavior is starting up. Head tilt is -29.5 degrees. tilt jointToBase= 1.000 0.000 0.000 67.500 0.000 0.000 -1.000 0.000 0.000 1.000 0.000 19.500 0.000 0.000 0.000 1.000 tilt linkToBase= 0.871 0.492 0.000 67.500 0.000 0.000 -1.000 0.000 -0.492 0.871 0.000 19.500 0.000 0.000 0.000 1.000 DstBehavior is shutting down.

jointToBase(HeadOffset+PanOffset) look like? (You should

be able to figure this out on paper.)

kine->jointToBase(CameraFrameOffset).i() *

kine->jointToBase(CameraFrameOffset) ?

kine->baseToJoint(CameraFrameOffset) *

kine->jointToBase(CameraFrameOffset) ?

kine->jointToJoint(HeadOffset+TiltOffset,HeadOffset+NodOffset)

* kine->baseToJoint(HeadOffset+TiltOffset) ?

kine->baseToJoint(HeadOffset+TiltOffset) *

kine->jointToJoint(HeadOffset+TiltOffset,HeadOffset+NodOffset)

instead?

jointToJoint

function produces very different results (not a mere sign change or

matrix inverse) when mapping from tilt to nod vs. from nod to tilt.

Why is that? (Hint: which joint moves in the other joint's reference

frame?)

|

|

|

getJointInterestPoint(BaseFrameOffset,"HeadButton") or

getLinkInterestPoint(BaseFrameOffset,"HeadButton"). (The

two are equivalent for the base frame.) Notice that the interest

point name is passed as a string. This string can also be a

comma-separated list of interest points, in which case their

coordinates are averaged together. So to get the coordinates of the

tip of the lower lip in the reference frame of the camera, you would

write

getJointInterestPoint(CameraFrameOffset,"LowerLeftLowerLip,LowerRightLowerLip").

The result is a 4x1 ColumnVector.

Exercise: Measuring Distance Using Interest Points

The program below prints out the distance between the two front paws.

It does this by calculating the coordinates of two paw interest points

in the base frame. It then subtracts one from the other. The

distance is the norm of the result.

This behavior uses a recurring timer established by DoStart to wake up

once each second. The inter-paw distance is computed and printed in

processEvent. The distance is measured between

LowerInnerMiddleLFrShin and

LowerInnerMiddleRFrShin, both of which are marked as B in

the leg interest point diagram discussed in the next section.

|

RBkLegOffset+ElevatorOffset, and it rotates about

the zh2R axis to move the leg closer to or further away

from the body.

Axis subscripts f and h indicate fore and hind legs; L and R indicate left and right legs. Italics in table at right indicate estimated values. |

|

PawFrameOffset and

LegOrder_t. The reference frame for the ball of the

right hind leg is named PawFrameOffset+RBkLegOrder.

Body and leg interest points are shown in the diagram excerpt below

(click for full diagram.) The naming scheme in the table

exploits the multiple symmetries of the body to compactly express the

names of 94 interest points. For example, point H indicates 8

interest points. One of these, the lower outer front edge of the left

front leg's thigh, is named "LowerOuterFrontLFrThigh".

|

|

lookAtPoint method of HeadPointerMC, which

accepts x, y, and z coordinates in the base reference frame. All we

need to do is calculate the coordinates of the tip of the paw in the

base frame. This is easily accomplished using

getJointInterestPoint. The interest point name is

"ToeLFrPaw".

This program uses two motion commands. One relaxes the left front leg

(by zeroing the joint forces) so you can move the leg easily. The other

moves the head to keep it 30 mm from the coordinates we calculate

for the left front paw. Each time the world state is updated, new

joint angles are available; a sensor event will be thrown which we can

detect with processEvent. We respond by calling

getJointInterestPoint to get the new paw coordinates in

the base frame, and then update the headpointer motion command.

To run this demo, start up DstBehavior and turn off Emergency stop

mode. Press the RawCam button in the ControllerGUI to turn on the

camera viewer . Then grasp the left front leg and move it slowly.

The head should follow the paw. The paw should remain roughly

centered in the camera's field of view, as in the image at right.

A more elaborate version of this demo, which allows you to select

which paw to stare at by pressing the button on the ball of the foot,

is included in Tekkotsu. You can find it at "Root Control > Mode Switch

> Kinematics Demos > New Stare At Paw".

To run this demo, start up DstBehavior and turn off Emergency stop

mode. Press the RawCam button in the ControllerGUI to turn on the

camera viewer . Then grasp the left front leg and move it slowly.

The head should follow the paw. The paw should remain roughly

centered in the camera's field of view, as in the image at right.

A more elaborate version of this demo, which allows you to select

which paw to stare at by pressing the button on the ball of the foot,

is included in Tekkotsu. You can find it at "Root Control > Mode Switch

> Kinematics Demos > New Stare At Paw".

|

|

|

|