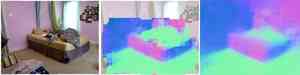

Data-Driven 3D Primitives for Single Image Understanding

(Training Code)

The Important Stuff

Downloads:

Code

Natural World Data (906MB .zip)

(From Hays and Efros, CVPR 2008)

Contact:

David Fouhey (please note that we cannot provide support)

This is a reference version of the training code. It also contains a dataset setup script that will download

the NYU v2 dataset and prepare it correctly.

Setup

- Download the code and the data and unpack each.

- Follow the README inside the code folder. You will first need to run a setup script that will download and extract the data (NYU v2 dataset). You can then run the training code.

- For inference, download the inference code and follow the simple instructions for putting things together so the inference package can read your trained primitives.

Acknowledgments

The data used by default is the NYU Depth v2 Dataset available here

and collected by Nathan Silberman, Pushmeet Kohli,

Derek Hoiem and Rob Fergus.

This code builds heavily on:

- Unsupervised Discovery of Mid-Level Discriminative Patches, S. Singh, A. Gupta, and A.A. Efros.

- Discriminatively trained deformable part models, R.B. Girshick, P.F. Felzenszwalb, and D. McAllester (feature extraction and convolution).

- LIBSVM, C.-C. Chang and C.-J. Lin (svm training)

- Bilateral filter implementation Douglas R. Lanman (bilateral filtering in data-set processing)