05-830, User Interface Software, Spring 2001

The Composer

Software Agents to change musical composition

Markus Flueckiger

Introduction

The Composer is a benchmark that investigates how user interface

agents can be used to compose simple songs.

I donít know of any similar interface and therefore cannot give any

reference. There are however many products for composing and score notation

available on the market. Such products often provide intelligent features

like automatic rhythms and harmonic support and there are many products

using software agents, e.g. the grammar and spelling correction in Microsoft

Word.

The primary focus of this benchmark is the cooperation of a user with

an interface agent and between interface agents, which happens (quasi-)parallel

to the userís input. It will also need some basic 2D drawing features to

draw the score, simple text-editing operations to add edit the score (insert

a note, delete a note, overwrite a note, select a couple of notes) and

some simple way of audio output.

Specification

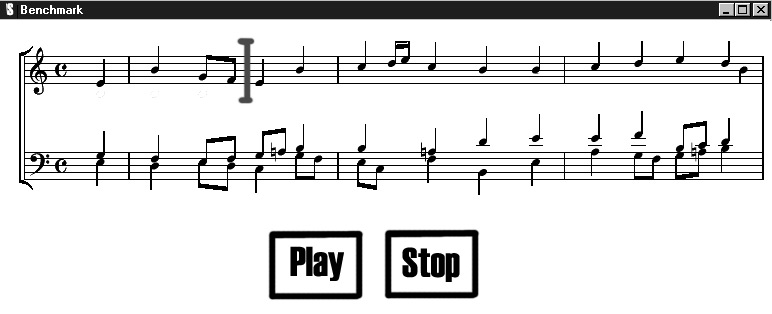

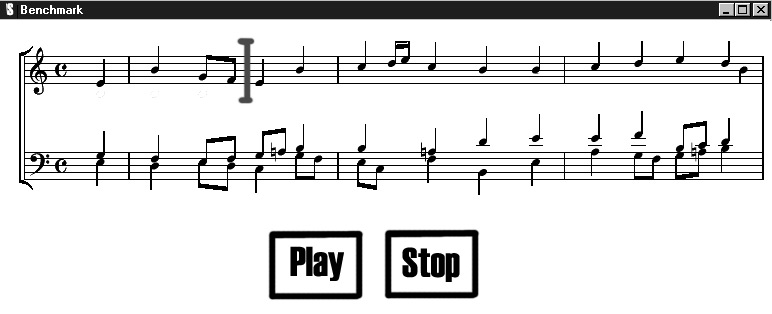

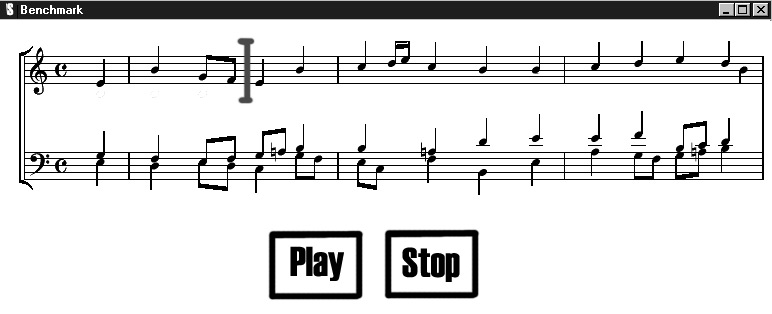

The following image shows an illustration of the main screen:

The main screen shows a representation of a musical score. The user

can add notes with different length as well as pauses to the score. Agents

complete and change the composerís idea to bring in an element of unpredictability

and support. Editing is done similar to text edition: cursor keys are used

to move the caret and other keys to insert a note at the caret or delete

a note under the caret.

Functionality:

display a score sheet

use cursor and other keys to enter notes and pauses. This needs a caret

that can be moved between notes and between tracks.

Add a new note, delete a note, select a note, mark a note as unchangeable.

provide agents that edit the score in parallel to the user

It must be clear, when agents make changes: added and changed things must

be communicated to the user, e.g. flashing, bolding, inverting, and so

on. Agents can leave changes visible for a certain amount of time so that

the user can see what has changed recently or they can just signal the

changes.

The user must also be able to mark a selected note in the arpeggio track

as fixed (use bold, shades of gray etc. to display this). While the user

selects a note, the agents are not allowed to change anything that affects

that note or the appearance of that note.

To playback the composition, the functionality for play all and stop are

needed

Restrictions to make it feasible:

Notation capabilities:

The aim is not to create a printable score sheet, but to look at the interaction

style, so make it simple:

Time signature is 4/4 and fixed

Number of tracks is 2 and fixed

The key is fixed to C, no sharp or flat notes are possible

Notes and pauses can have the following lengths: 1, 1/2 , 1/4, 1/8 plus

the dotted notes/pauses 1+1/2, 1/2+1/4, 1/4+1/8.

Notes are always standing single; no connecting beams are possible.

No other notation capabilities are needed.

Two tracks only: a melody track and an arpeggio track.

The melody track contains only one voice, so no notes are played in parallel

for that track. The arpeggio track can have several notes in parallel,

but from an editing point of view they are treated as a unit.

if notes are in parallel, they have the same length.

Composing capabilities:

Stress lies on the interaction style and not on the quality of music:

the resulting music doesnít need to sound great!

Interaction and agent functionality

The user only edits the melody track.

The user can mark things on the arpeggio track as fix, so the system

does not change this, if several notes are notated as parallel, then they

are treated as a unit.

One agent derives the harmony and chords, a second agent applies arpeggio

patterns to that chords. An easy way to apply chords and arpeggio patterns

is to define a small amount of them and select according to some simple

cues found in the melody track, supported by a random number.

Agents only make changes on the arpeggio track.

Agent work as parallel as possible to the user, they do not need to wait

on users response. So the agent might wake up at specific intervals and

rethink the result on the lines.

Sound output:

any way will do, just make it audible.