Carnegie Mellon Researchers Create Brand Associations By Mining Millions of Images From Social Media Sites Technique Complements Online Text Data Now Analyzed by Marketers

Byron SpiceThursday, December 5, 2013Print this page.

PITTSBURGH—The images people share on social media — photos of favorite products and places, or of themselves at bars, sporting events and weddings — could be valuable to marketers assessing their customers’ “top-of-mind” attitudes toward a brand. Carnegie Mellon University researchers have taken a first step toward this capability in a new study in which they analyzed five million such images.

Eric Xing, associate professor of machine learning, computer science and language technologies, and Gunhee Kim, then a Ph.D. student in computer science, looked at images associated with 48 brands in four categories — sports, luxury, beer and fast food. The images were obtained through popular photo sharing sites such as Pinterest and Flickr.

Their automated process unsurprisingly produced clusters of photos that are typical of certain brands – watch images with Rolex, tartan plaid with Burberry. But some of the highly ranked associations underscored the type of information particularly associated with images and especially with images from social media sites.

For instance, clusters for Rolex included images of horse-riding and auto-racing events, which were sponsored by the watchmaker. Many wedding clusters were highly associated with the French fashion house of Louis Vuitton. Both instances, Kim noted, are events where people tend to take and share lots of photos, each of which is an opportunity to show brands in the context in which they are used and experienced.

Marketers are always trying to get inside the head of customers to find out what a brand name causes them to think or feel. What does “Nike” bring to mind? Tiger Woods? Shoes? Basketball? Questionnaires have long been used to gather this information but, with the advent of online communities, more emphasis is being placed on analyzing texts that people post to social media.

“Now, the question is whether we can leverage the billions of online photos that people have uploaded,” said Kim, who joined Disney Research Pittsburgh after completing his Ph.D. earlier this year. Digital cameras and smartphones have made it easy for people to snap and share photos from their daily lives, many of which relate in some way to one brand or another.

“Our work is the first attempt to perform such photo-based association analysis,” Kim said. “We cannot completely replace text-based analysis, but already we have shown this method can provide information that complements existing brand associations.”

Kim will present the research Dec. 7 at the IEEE Workshop on Large Scale Visual Commerce in Sydney, Australia, where he will receive a Best Paper Award, and at WSDM 2014, an international conference on search and data mining on the Web, Feb. 24-28 in New York City.

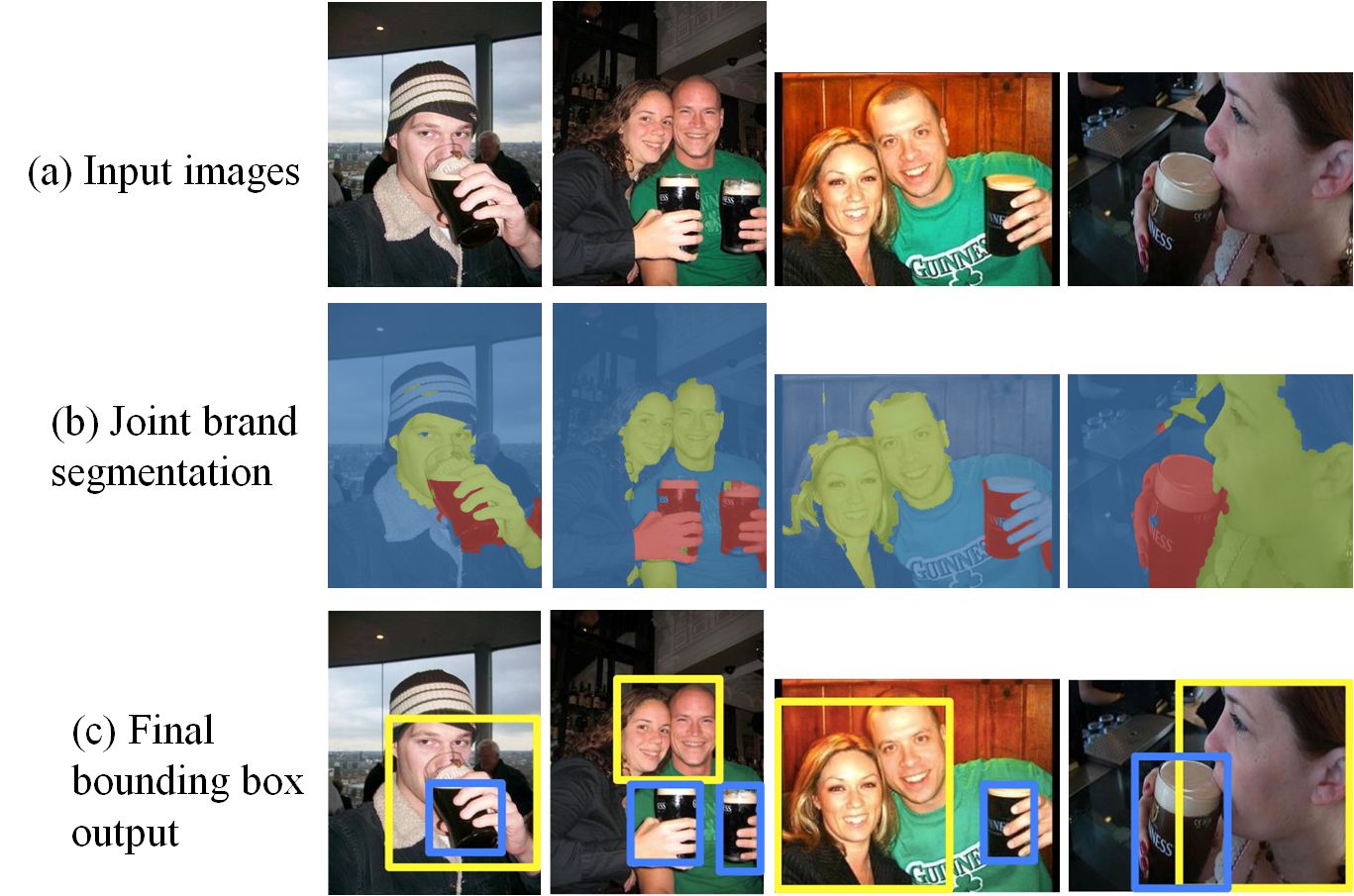

Kim and Xing obtained photos that people had shared and had tagged with one of 48 brand names. They developed a method for analyzing the overall appearance of the photos and clustering similar appearing images together, providing core visual concepts associated with each brand. They also developed an algorithm that would then isolate the portion of the image associated with the brand, such as identifying a Burger King sign along a highway, or adidas apparel worn by someone in a photo.

Kim emphasized that this work represents just the first step toward mining marketing data from images. But it also suggests some new directions and some additional applications of computer vision in electronic commerce. For instance, it may be possible to generate keywords from images people have posted and use those keywords to direct relevant advertisements to that individual, in much the same way sponsored search now does with text queries.

This research was supported by the National Science Foundation and by Google.

Byron Spice | 412-268-9068 | bspice@cs.cmu.edu