|

The

Sorting Image Sensor receives an optical image, ranks pixels by

their input intensity, and assigns appropriate indices to each pixel

forming what we call "image of indices".

The values in the image of indices range for 1 to N, where N is the

number of pixels in the array. Therefore, the image of indices

never saturate regardless of the dynamic range of the optical

image. Since sorting is a global operation, the resultant

indices are optimally influenced by the content of the entire scene.

Thus the rapid adaptation to any dynamic range scenes.

In the process of sorting pixels (in analog domain) the sensor

generates a voltage waveform representative of the scene's

cumulative histogram. Cumulative histogram is one global scene

descriptor that is reported with low latency before image of indices

is even read out.

The image of indices, together with the cumulative histogram is all

that is needed to recover optical images of any dynamic range.

The project was originally sponsored by Office of Naval

Research. Currently, NSF

supports investigation of novel on-chip signal encoding techniques

that will make sorting-like adaptive imaging practical for many

robotic applications.

You can learn more about sorting sensor

chips, the Intensity-to-Time Processing Paradigm, or view some experimental

data and demos. Related publications are listed here.

|

|

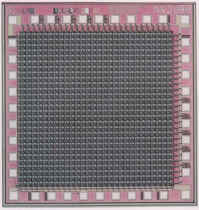

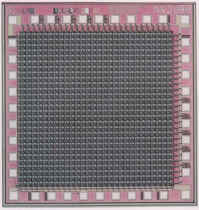

Sorting

Sensor Ver.2 |

more... |

|

Pixel

size: 60um x 60um

Array size: 32 x 32 points

Frame rate: self-adaptive

Technology: 2um CMOS

Die size: 2.2 x 2.2mm |

|