Gus's research statement from a musician's perspective (view it in PDF)

A longer version from a computer scientist's perspective: http://www.cs.cmu.edu/~gxia/rs_long.html

Overview

As both a computer scientist and a musician, I design intelligent systems with artificial musicianship to understand and extend human musical expression. To understand means to model the musical expression conveyed through acoustic, gestural, and emotional signals. To extend means to use this understanding to create expressive, interactive, and autonomous agents. Such systems not only open new forms of music performance, serving both amateur and professional musicians, but also point to future directions of music appreciation, music education, and music therapy.

Some demos are available at: www.cs.cmu.edu/~gxia/demo.html .

Previous

achievements

To understand and extend musical expression,

the main obstacle for most current computer music systems is the lack of rich

musical representations and capabilities. My dissertation breaks through this

limitation by combining music domain knowledge with machine learning

techniques, where I create interactive artificial performers capable of

performing expressively in concert with human musicians by learning musicianship from rehearsal experience.

In particular, I consider pitch, timing, and

dynamics features of musical expression and model these features across different performers as co-evolving time series that vary

jointly with hidden mental states.

Then, I apply spectral learning, a state-of-the-art machine learning technique, to

discover the relationship between the high-dimensional features and the

low-dimensional hidden states using singular

value decomposition. Based on the trained model, the artificial performer

generates an expressive rendition of a given score by interacting with human

musicians.

Compared with the baseline, which assumes steady local tempo and dynamics and has been used for over 30 years, my method achieves four critical advances towards more human-like interaction. First, it improves the timing prediction by 50 milliseconds and dynamics prediction by 8 MIDI velocity units on average, trained on only 4 rehearsals of the same piece of music. This is very significant, as listeners can easily perceive asynchronous notes that differ by 30 milliseconds and dynamics differences of 4 MIDI velocity units. Second, it shows further improvements by learning from the same performer, which demonstrates the capability of the model to capture the style of individual performers. Third, these improvements carry over to different pieces in similar music styles, which demonstrates that my model is capable of generalizing the learned musicianship in new situations. Lastly, I extend artificial musical expression to include facial and body gestures using cutting-edge humanoid music robots.

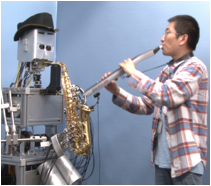

Figure 1. Human-robot

music interaction between the saxophone robot and the flutist (myself).

In summary, the system leverages learned musicianship

to extend human musical expression by playing collaboratively and generating

facial and body gestures. This is a big step towards artificial musical

interaction at a professional level. With further development of artificial

musicianship, such technology has the potential to fundamentally change how

people interact with music.

A future

direction for music performance

If we can build a cello robot and collect enough interactive performance data from Yo-Yo Ma, we can essentially "record" his style and create avatars of him. Such avatars can serve as personal music partners, even centuries after he retires. We can generalize this idea and imagine a personal robot orchestra composed of master players of different instruments, with which even amateur musicians can hold world-class solo concerts easily. Furthermore, we can incorporate one's performance style into interactive music toys or giant robots. To achieve these goals, we need richer features to represent more nuanced musical expression (such as sliding and vibrato) and more distributed algorithms to coordinate a large number of autonomous agents. But the underlying models and machine learning schemes are identical to my work on interactive artificial performers.

A future direction for music appreciation

A special case of human-robot interactive performance is to just duplicate human motions. In other words, we can "copy" a performance from a concert hall and adaptively "paste" it to our homes in order to appreciate "live" symphonies using robot orchestras. With this technology, audiences around the world can appreciate more vivid performances of BSO, where robot orchestras replace video simulcasts. To achieve this, robots will need to adapt their performances to different instrument qualities and acoustic environments; such adaptability can be gained through reinforcement learning.

A

future direction for music education and music therapy

Today, humans design algorithms to develop artificial musicianship; tomorrow, machines can help teach music to humans. My current work on human-robot interaction derives machine expression from human expression; we can reverse this process to explore how different teaching strategies affect the way students learn, since robot behaviors can be easily configured by a set of parameters. This can potentially uncover the mechanisms of music training and help us develop musicianship effectively. Also, an artificial tutor can give us feedback at any time, making music training a lot easier and potentially much cheaper.

My vision for unifying

music education and therapy is inspired by Eurhythmics,

a traditional music training method that focuses on the intrinsic

relationship between body movements and musical expression. For example, a

Eurhythmics instructor plays a tricky music segment on the piano; students are

asked to step on the downbeats while clapping on the upbeats to show their

mastery of a certain rhythm. This procedure of training rhythmic feeling can be

turned easily into a human-computer interaction, where computers can play the

piano part while evaluating the movements of the students. Moreover, this

method can be adapted to physical therapy. Compared with current approaches in

physical therapy for Parkinson's disease where doctors still use metronomes to

help patients recover their ability to walk smoothly, an interactive process

involving musical expression will be a huge

improvement.

Above all, I would like to carry on my research on music intelligence, to

build my music robots, and to share ideas on further evolving musical

expression with brilliant colleagues. Let's envision a more expressive,

creative, and interactive world, and make it so.