|

Journal of Artificial Intelligence Research, 28 (2007) 453-515. Submitted 8/06; published 4/07

© 2007 AI Access Foundation and Morgan Kaufmann Publishers. All rights reserved.

Bradley J. Clement

Edmund H. Durfee

Anthony C. Barrett

Jet Propulsion Laboratory, Mail Stop: 126-347

Pasadena, CA 91109 USA

brad.clement@jpl.nasa.gov

Electrical Engineering and Computer Science Department

University of Michigan,Ann Arbor, MI 48109 USA

durfee@umich.edu

Jet Propulsion Laboratory, Mail Stop: 126-347

Pasadena, CA 91109 USA

tony.barrett@jpl.nasa.gov

The judicious use of abstraction can help planning agents to identify key interactions between actions, and resolve them, without getting bogged down in details. However, ignoring the wrong details can lead agents into building plans that do not work, or into costly backtracking and replanning once overlooked interdependencies come to light. We claim that associating systematically-generated summary information with plans' abstract operators can ensure plan correctness, even for asynchronously-executed plans that must be coordinated across multiple agents, while still achieving valuable efficiency gains. In this paper, we formally characterize hierarchical plans whose actions have temporal extent, and describe a principled method for deriving summarized state and metric resource information for such actions. We provide sound and complete algorithms, along with heuristics, to exploit summary information during hierarchical refinement planning and plan coordination. Our analyses and experiments show that, under clearcut and reasonable conditions, using summary information can speed planning as much as doubly exponentially even for plans involving interacting subproblems.

Abstraction is a powerful tool for solving large-scale planning and scheduling problems. By abstracting away less critical details when looking at a large problem, an agent can find an overall solution to the problem more easily. Then, with the skeleton of the overall solution in place, the agent can work additional details into the solution [Sacerdoti 1974, Tsuneto, Hendler, Nau, 1998]. Further, when interdependencies are fully resolved at abstract levels, then one or more agents can flesh out sub-pieces of the abstract solution into their full details independently (even in parallel) in a ``divide-and-conquer'' approach [Korf, 1987, Lansky, 1990, Knoblock, 1991].

Unfortunately, it is not always obvious how best to abstract large, complex problems to achieve these efficiency improvements. An agent solving a complicated, many-step planning problem, for example, might not be able to identify which of the details in earlier parts will be critical for later ones until after it has tried to generate plans or schedules and seen what interdependencies end up arising. Even worse, if multiple agents are trying to plan or schedule their activities in a shared environment, then unless they have a lot of prior knowledge about each other, it can be extremely difficult for one agent to anticipate which aspects of its own planned activities are likely to affect, and be affected by, other agents.

In this paper, we describe a strategy that balances the benefits and risks of abstraction in large-scale single-agent and multi-agent planning problems. Our approach avoids the danger of ignoring important details that can lead to incorrect plans (whose execution will fail due to overlooked interdependencies) or to substantial backtracking when abstract decisions cannot be consistently refined. Meanwhile, our approach still achieves many of the computational benefits of abstraction so long as one or more of a number of reasonable conditions (listed later) holds.

The key idea behind our strategy is to annotate each abstract operator in a plan hierarchy with summary information about all of its potential needs and effects under all of its potential refinements. While this might sound contrary to the purpose of abstraction as reducing the number of details, in fact we show that it strikes a good balance. Specifically, because all of the possibly relevant conditions and effects are modeled, the agent or agents that are reasoning with abstract operators can be absolutely sure that important details cannot be overlooked. However, because the summary information abstracts away details about under which refinement choices conditions and effects will or will not be manifested, and information about the relative timing of when conditions are needed and effects achieved, it still often results in an exponential reduction in information compared to a flat representation.

Based on the concept of summary information, this paper extends the prior work summarized below and in Section 8 to make the following contributions:

This ability to coordinate at abstract levels rather than over detailed plans allows each of the agents to retain some local flexibility to refine its operators as best suits its current or expected circumstances without jeopardizing coordination or triggering new rounds of renegotiation. In this way, summary information supports robust execution systems such as PRS [Georgeff Lansky, 1986], UMPRS [Lee, Huber, Durfee, Kenny, 1994], RAPS [Firby, 1989], JAM [Huber, 1999], etc. that interleave the refinement of abstract plan operators with execution.

Our approach also extends plan coordination (plan merging) techniques [Georgeff, 1983, Lansky, 1990, Ephrati Rosenschein, 1994] by utilizing plan hierarchies and a more expressive temporal model. Prior techniques assume that actions are atomic, meaning that an action either executes before, after, or at exactly the same time as another. In contrast, we use interval point algebra [Vilain Kautz, 1986] to represent the possibility of several actions of one agent executing during the execution of one action of another agent. Because our algorithms can choose from alternative refinements in the HTN dynamically in the midst of plan coordination, they support interleaved local planning, multiagent coordination, and concurrent execution.

As a running example to motivate this work, consider a manufacturing plant where a production manager, a facilities manager, and an inventory manager each have their own goals with separately constructed hierarchical plans to achieve them. However, they still need to coordinate over the use of equipment, the availability of parts used in the manufacturing of other parts, storage for the parts, and the use of transports for moving parts around. The state of the factory is shown in Figure 1. In this domain, agents can produce parts using machines M1 and M2, service the machines with a tool, and move parts to and from the shipping dock and storage bins on the shop floor using transports. Initially, machines M1 and M2 are free for use, and the transports (transport1 and transport2), the tool, and all of the parts (A through E) shown in their storage locations are available.

The production manager is responsible for creating a part H

using machines M1 and M2. Either M1 and M2 can consume parts A and B

to produce G, and M2 can produce H from G. The production manager's

hierarchical plan for manufacturing H involves using the transports to

move the needed parts from storage to the input trays of the

machines, manufacturing G and H, and transporting H back to storage.

This plan is shown in Figure 2. Arcs through

subplan branches mean that all subplans must be executed.

Branches without arcs denote alternative choices

to achieving the parent's goal. The decomposition of ![]() is similar to that of

is similar to that of ![]() .

.

The facilities manager services each machine by equipping it

with a tool and then calibrating it. The machines are unavailable for

production while being serviced. The facilities manager's

hierarchical plan branches into choices of servicing the machines in

different orders and uses the transports for getting the tool from storage

to the machines (Figure 3). The decomposition of

![]() is similar to that of

is similar to that of ![]() .

.

The parts must be ``available'' on the space-limited shop floor in order for an agent to use them. Whenever an agent moves or uses a part, it becomes unavailable. The inventory manager's goal is just to move part C to the dock and move D and E into bins on the shop floor (shown in Figure 4).

To accelerate the coordination of their plans, each factory manager can analyze his hierarchical plan to derive summary information on how each abstract plan operator can affect the world. This information includes the summary pre-, post-, and in-conditions that intuitively correspond to the externally required preconditions, externally effective postconditions, and the internally required conditions, respectively, of the plan based on its potential refinements. Summary conditions augment state conditions with modal information about whether the conditions must or may hold and when they are in effect. Examples are given at the end of Section 3.2.

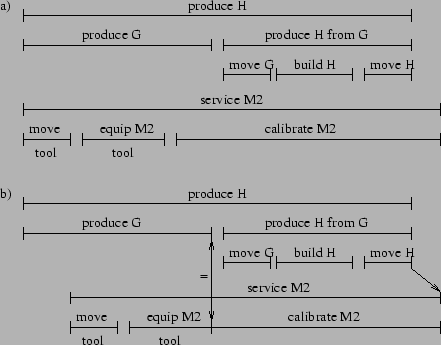

Once summary information is computed,

the production and inventory managers each could send this information

for their top-level plan to the facilities manager. The

facilities manager could then reason about the top-level summary

information for each of their plans to determine that if the

facilities manager serviced all of the machines before the production

manager started producing parts, and the production manager finished

before the inventory manager began moving parts on and off the dock,

then all of their plans can be executed (refined) in any

way, or ![]() .

Then the facilities manager could instruct the others to add communication

actions to their plans so that they synchronize their actions appropriately.

.

Then the facilities manager could instruct the others to add communication

actions to their plans so that they synchronize their actions appropriately.

This top-level solution maximizes robustness in that the choices in

the production and facilities managers' plans are preserved, but the

solution is inefficient because there is no concurrent activity--only

one manager is executing its plan at any time. The production manager

might not want to wait for the facilities manager to finish maintenance and could negotiate for a solution

with more concurrency. In that case, the facilities manager could

determine that they could not overlap their plans in any way without risking

conflict (![]() ).

However, the summary information could tell them

that there might be some way to overlap their plans

(

).

However, the summary information could tell them

that there might be some way to overlap their plans

(![]() ),

suggesting that a search for a solution with more concurrency (at the cost of perhaps committing to specific refinement choices) has hope of success.

In this case, the facilities manager could request the production manager for

the summary information of each of

),

suggesting that a search for a solution with more concurrency (at the cost of perhaps committing to specific refinement choices) has hope of success.

In this case, the facilities manager could request the production manager for

the summary information of each of ![]() 's subplans, reason

about the interactions of lower level actions in the same way, and

find a way to synchronize the subplans for a more fine-grained

solution where the plans are executed more concurrently. We give an algorithm for finding such solutions in Section 5.

's subplans, reason

about the interactions of lower level actions in the same way, and

find a way to synchronize the subplans for a more fine-grained

solution where the plans are executed more concurrently. We give an algorithm for finding such solutions in Section 5.

We first formally define a model of a concurrent hierarchical plan, its execution, and its interactions (Section 2). Next, we describe summary information for propositional states and metric resources, mechanisms determining whether particular interactions must or may hold based on this information, and algorithms for deriving the information (Section 3). Built upon these algorithms are others for using summary information to determine whether a set of CHiPs must or might execute successfully under a set of ordering constraints (Section 4). These in turn are used within a sound and complete multilevel planning/coordination algorithm that employs search techniques and heuristics to efficiently navigate and prune the search space during refinement (Section 5). We then show how planning, scheduling, or coordinating at abstract levels can exponentially improve the performance of search and execution (Section 6). We provide experimental results demonstrating that the search techniques also greatly reduce the search for optimal solutions (Section 7). Finally, in Section 8 we differentiate our approach from related work that we did not mention elsewhere and conclude.

A representation of temporal extent in an HTN is important not only for modeling concurrently executing agents but also for performing abstract reasoning with summary information. If an agent is scheduling abstract actions and can only sequentially order them, it will be severely restricted in the kinds of solutions it can find. For example, the agent may prefer solutions with shorter makespans, and should seek plans with subthreads that can be carried out concurrently.

In this section we define concurrent hierarchical plans (CHiPs), how the state changes over time based on their executions, and concepts of success and failure of executions in a possible world, or history. Because we later define summary information and abstract plan interactions in terms of the definitions and semantics given in this section, the treatment here is fairly detailed (though for an even more comprehensive treatment, see [Clement, 2002]). However, we begin by summarizing the main concepts and notation introduced, to give the reader the basic gist.

A CHiP (or plan

![]() ) is mainly differentiated from an HTN by including in its

definition inconditions,

) is mainly differentiated from an HTN by including in its

definition inconditions, ![]() ,

(sometimes called ``during conditions'') that affect (or assert a

condition on) the state just after the start time of

,

(sometimes called ``during conditions'') that affect (or assert a

condition on) the state just after the start time of ![]() (

(![]() ) and

must hold throughout the duration of

) and

must hold throughout the duration of ![]() . Preconditions (

. Preconditions (![]() )

must hold at the start, and postconditions (

)

must hold at the start, and postconditions (![]() ) are asserted at

the finish time of

) are asserted at

the finish time of ![]() (

(![]() ). Metric resource (

). Metric resource (![]() ) consumption

(

) consumption

(![]() ) is instantaneous at the start time and, if the resource

is defined as non-consumable,

is instantaneously restored at the end. The decompositions of

) is instantaneous at the start time and, if the resource

is defined as non-consumable,

is instantaneously restored at the end. The decompositions of ![]() (

(![]() ) is in the

style of

) is in the

style of ![]() /

/![]() tree, having either a partial ordering

(

tree, having either a partial ordering

(![]() ) or a choice of child tasks that each can have their own

conditions.

) or a choice of child tasks that each can have their own

conditions.

An execution ![]() of

of ![]() is an instantiation of its start

time, end time, and decomposition. That is, an execution nails down

exactly what is done and when. In order to reason about plan

interactions, we can quantify over possible histories, where each history

corresponds to a combination of possible executions of the

concurrently-executing CHiPs for a partial ordering over their

activities and in the context of an initial state.

A run (

is an instantiation of its start

time, end time, and decomposition. That is, an execution nails down

exactly what is done and when. In order to reason about plan

interactions, we can quantify over possible histories, where each history

corresponds to a combination of possible executions of the

concurrently-executing CHiPs for a partial ordering over their

activities and in the context of an initial state.

A run (![]() ) specifies the state at

time

) specifies the state at

time ![]() for history

for history ![]() .

.

Achieve, clobber, and undo interactions are

defined in terms of when the executions of some plans assert a positive literal

![]() or negative literal

or negative literal ![]() relative to when

relative to when ![]() is required by another

plan's execution for a history. By looking at the literals achieved, clobbered,

and undone in the set of executions in

a history, we can identify the conditions that must hold prior to the

executions in the history as external preconditions and those that must hold after

all of the executions in the history as external postconditions.

is required by another

plan's execution for a history. By looking at the literals achieved, clobbered,

and undone in the set of executions in

a history, we can identify the conditions that must hold prior to the

executions in the history as external preconditions and those that must hold after

all of the executions in the history as external postconditions.

The value of a metric resource at time ![]() (

(![]() ) is

calculated by subtracting from the prior state value the usage of all

plans that start executing at

) is

calculated by subtracting from the prior state value the usage of all

plans that start executing at ![]() and (if non-consumable) adding back

usages of all that end at

and (if non-consumable) adding back

usages of all that end at ![]() . An execution

. An execution ![]() of

of ![]() fails if a

condition that is required or asserted at time

fails if a

condition that is required or asserted at time ![]() is not in the state

is not in the state ![]() at

at ![]() , or if the value of a resource (

, or if the value of a resource (![]() ) used by the plan

is over or under its limits during the execution.

) used by the plan

is over or under its limits during the execution.

In the remainder of this section, we give more careful, detailed descriptions of the concepts above, to ground these definitions in firm semantics; the more casual reader can skim over these details if desired. It is also important to note that, rather than starting from scratch, our formalization weaves together, and when necessary augments, appropriate aspects of other theories, including Allen's temporal plans allen:83b, Georgeff's theory for multiagent plans georgeff:84, and Fagin et al.'s theory for multiagent reasoning about knowledge RAK.

A concurrent hierarchical plan ![]() is a tuple

is a tuple ![]() ,

, ![]() ,

,

![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() .

. ![]() ,

, ![]() , and

, and

![]() are sets of literals (

are sets of literals (![]() or

or ![]() for some propositional

variable

for some propositional

variable ![]() ) representing the preconditions, inconditions, and

postconditions defined for plan

) representing the preconditions, inconditions, and

postconditions defined for plan ![]() .1

.1

We borrow an existing model of metric resources [Chien, Rabideu, Knight, Sherwood, Engelhardt, Mutz,

Estlin, Smith, Fisher, Barrett, Stebbins, Tran, 2000b, Laborie Ghallab, 1995].

A plan's ![]() is a function mapping from resource variables to an amount used. We write

is a function mapping from resource variables to an amount used. We write ![]() to indicate the amount

to indicate the amount ![]() uses of resource

uses of resource ![]() and sometimes treat

and sometimes treat ![]() as a set of pairs

as a set of pairs ![]() .

A metric resource

.

A metric resource ![]() is a tuple

is a tuple ![]() ,

, ![]() ,

, ![]() .

The min and max values can be integer or real

values representing bounds on the capacity or amount available. The

.

The min and max values can be integer or real

values representing bounds on the capacity or amount available. The ![]() of the resource is either consumable or

non-consumable.

For example, fuel

and battery energy are consumable resources because, after use, they

are depleted by some amount. A non-consumable resource is available

after use (e.g. vehicles, computers, power).

of the resource is either consumable or

non-consumable.

For example, fuel

and battery energy are consumable resources because, after use, they

are depleted by some amount. A non-consumable resource is available

after use (e.g. vehicles, computers, power).

Domain modelers typically only specify state conditions and resource usage for primitive actions

in a hierarchy. Thus, the conditions and usage of a CHiP are used to derive summary conditions,

as we describe in Section 3.4, so that algorithms can reason about any action in the hierarchy.

In order to reason about plan hierarchies as

and/or trees of actions, the ![]() of plan

of plan ![]() , or

, or ![]() , is given

a value of either

, is given

a value of either ![]() ,

, ![]() , or

, or ![]() . An

. An ![]() plan is a

non-primitive plan that is accomplished by carrying out all of its

subplans. An

plan is a

non-primitive plan that is accomplished by carrying out all of its

subplans. An ![]() plan is a non-primitive plan that is accomplished

by carrying out exactly one of its subplans. So,

plan is a non-primitive plan that is accomplished

by carrying out exactly one of its subplans. So, ![]() is a set of

plans, and a

is a set of

plans, and a ![]() plan's

plan's ![]() is the empty set.

is the empty set.

![]() is only defined for an

is only defined for an ![]() plan

plan ![]() and is a consistent set of

temporal relations [Allen, 1983] over pairs of subplans. Plans left unordered

with respect to each other are interpreted to potentially execute

concurrently.

and is a consistent set of

temporal relations [Allen, 1983] over pairs of subplans. Plans left unordered

with respect to each other are interpreted to potentially execute

concurrently.

The decomposition of a CHiP is in the same style as that of an HTN as

described by erol:94. An ![]() plan is a

task network, and an

plan is a

task network, and an ![]() plan is an extra construct representing a

set of all methods that accomplish the same goal or compound task.

A network of tasks corresponds to the subplans of a plan.

plan is an extra construct representing a

set of all methods that accomplish the same goal or compound task.

A network of tasks corresponds to the subplans of a plan.

For the example in Figure 2, the

production manager's highest level plan ![]() (Figure 2) is the tuple

(Figure 2) is the tuple

![]() In

In ![]() (0,1), 0 and 1 are indices of the subplans in the

decomposition referring to

(0,1), 0 and 1 are indices of the subplans in the

decomposition referring to ![]() and

and ![]() respectively. There are no conditions defined because

respectively. There are no conditions defined because

![]() can rely on the conditions defined for the primitive

plans in its refinement. The plan for moving part A from bin1 to the

first input tray of M1 using transport1 is the tuple

can rely on the conditions defined for the primitive

plans in its refinement. The plan for moving part A from bin1 to the

first input tray of M1 using transport1 is the tuple

![]() This plan decomposes into two half moves which help capture important

intermediate effects. The parent orders its children with the

This plan decomposes into two half moves which help capture important

intermediate effects. The parent orders its children with the ![]() relation

to bind them together into a single move.

The

relation

to bind them together into a single move.

The ![]() plan is

plan is

The

The ![]() plan is

plan is

We split the move plan into these

two parts in order to ensure that no other action that executes

concurrently with this one can use transport1, part A, or the input tray

to M1. It would be incorrect to instead specify

We split the move plan into these

two parts in order to ensure that no other action that executes

concurrently with this one can use transport1, part A, or the input tray

to M1. It would be incorrect to instead specify ![]() (transport1) as an incondition to a

single plan because another agent could, for instance, use transport1 at the

same time because its

(transport1) as an incondition to a

single plan because another agent could, for instance, use transport1 at the

same time because its ![]() (transport1) incondition would agree with

the

(transport1) incondition would agree with

the ![]() (transport1) incondition of this move action.

However, the specification here is still insufficient since two pairs of (

(transport1) incondition of this move action.

However, the specification here is still insufficient since two pairs of (![]() ,

, ![]() ) actions could start and end at the same time without conflict. We can get around this by only allowing the planner to reason about the

) actions could start and end at the same time without conflict. We can get around this by only allowing the planner to reason about the ![]() and its parent plans, in effect, hiding the transition between the start and finish actions.

So, by

representing the transition from

and its parent plans, in effect, hiding the transition between the start and finish actions.

So, by

representing the transition from ![]() to

to ![]() without knowing when that transition will take place

the modeler ensures that

another move plan that tries to use transport1 concurrently with this one

will cause a conflict.2

without knowing when that transition will take place

the modeler ensures that

another move plan that tries to use transport1 concurrently with this one

will cause a conflict.2

A postcondition is required for each incondition to specify whether the incondition changes. This clarifies the semantics of inconditions as conditions that hold only during plan execution whether because they are caused by the action or because they are necessary conditions for successful execution.

Informally, an execution of a CHiP is recursively defined as an instance of a decomposition and an ordering of its subplans' executions. Intuitively, when executing a plan, an agent chooses the plan's start time and how it is refined, determining at what points in time its conditions must hold, and then witnesses a finish time. The formalism helps us reason about the outcomes of different ways to execute a group of plans, describe state transitions, and define summary information.

An execution ![]() of CHiP

of CHiP ![]() is a tuple

is a tuple ![]() .

. ![]() and

and ![]() are positive, non-zero

real numbers representing the start and finish times of execution

are positive, non-zero

real numbers representing the start and finish times of execution ![]() ,

and

,

and ![]() . Thus, instantaneous actions are not explicitly represented.

. Thus, instantaneous actions are not explicitly represented. ![]() is a set of subplan executions representing

the decomposition of plan

is a set of subplan executions representing

the decomposition of plan ![]() under this execution

under this execution ![]() . Specifically,

if

. Specifically,

if ![]() is an

is an ![]() plan, then it contains exactly one execution from each of

the subplans; if it is an

plan, then it contains exactly one execution from each of

the subplans; if it is an ![]() plan, then it contains only one

execution of one of the subplans; and it is empty if it is

plan, then it contains only one

execution of one of the subplans; and it is empty if it is

![]() . In addition, for all subplan executions,

. In addition, for all subplan executions, ![]() ,

,

![]() and

and ![]() must be consistent with the relations

specified in

must be consistent with the relations

specified in ![]() . Also, the first subplan(s) to start must

start at the same time as

. Also, the first subplan(s) to start must

start at the same time as ![]() ,

, ![]() , and the last

subplan(s) to finish must finish at the same time as

, and the last

subplan(s) to finish must finish at the same time as ![]() ,

,

![]() . The possible executions of a plan

. The possible executions of a plan ![]() is the set

is the set

![]()

![]() that includes all possible instantiations of an

execution of

that includes all possible instantiations of an

execution of ![]() , meaning all possible values of the tuple

, meaning all possible values of the tuple ![]() , obeying the rules just stated.

, obeying the rules just stated.

For the example in Section 1.1, an execution for the

production manager's top-level plan ![]() would be some

would be some

![]()

![]() .

. ![]() might be

might be ![]() ,

,

![]() , 2.0, 9.0

, 2.0, 9.0 ![]() where

where ![]()

![]() ,

and

,

and ![]()

![]() . This means that the execution

of

. This means that the execution

of ![]() begins at

time 2.0 and ends at time 9.0.

begins at

time 2.0 and ends at time 9.0.

For convenience, the subexecutions of an execution ![]() , or

, or

![]() , is defined recursively as the set of

subplan executions in

, is defined recursively as the set of

subplan executions in ![]() 's decomposition unioned with their

subexecutions.

's decomposition unioned with their

subexecutions.

An agent reasoning about summary information to make planning decisions at abstract levels needs to first be able to reason about CHiPs. In this section we complete the semantics of CHiPs by describing how they affect the state over time. Because an agent can execute a plan in many different ways and in different contexts, we need to be able to quantify over possible worlds (or histories) where agents fulfill their plans in different ways. After defining a history, we define a run as the transformation of state over time as a result of the history of executions. The formalization of histories and runs follows closely to that of RAK in describing multiagent execution.

A state of a world, ![]() , is a truth

assignment to a set of propositions, each representing an aspect of

the environment. We will refer to the state as the set of true

propositional variables.

A history,

, is a truth

assignment to a set of propositions, each representing an aspect of

the environment. We will refer to the state as the set of true

propositional variables.

A history, ![]() , is a tuple

, is a tuple ![]() .

. ![]() is the

set of all

plan executions of all agents occurring in

is the

set of all

plan executions of all agents occurring in ![]() , and

, and ![]() is the initial state of

is the initial state of ![]() before any plan begins executing. So, a history

before any plan begins executing. So, a history ![]() is a hypothetical

world that begins with

is a hypothetical

world that begins with ![]() as the initial state and where only

executions in

as the initial state and where only

executions in ![]() occur. In particular, a history for the manufacturing domain

might have an initial state as shown in

Figure 1 where all parts and machines are available,

and both transports are free. The set of executions

occur. In particular, a history for the manufacturing domain

might have an initial state as shown in

Figure 1 where all parts and machines are available,

and both transports are free. The set of executions ![]() would contain

the execution of

would contain

the execution of ![]() ,

, ![]() ,

, ![]() , and their subexecutions.

, and their subexecutions.

A run, ![]() , is a function mapping a history and time point to states. It gives a

complete description of how the state of the world evolves over time,

where time ranges over the positive real numbers.

, is a function mapping a history and time point to states. It gives a

complete description of how the state of the world evolves over time,

where time ranges over the positive real numbers.

Axiom 1 states that the

world is in the initial state at time zero.

Axiom 2 states that a predicate ![]() is true at time

is true at time ![]() if it was already true beforehand, or a plan asserts

if it was already true beforehand, or a plan asserts ![]() with an incondition or postcondition at

with an incondition or postcondition at ![]() , but (in either case) no plan asserts

, but (in either case) no plan asserts ![]() at

at ![]() . If a plan starts at

. If a plan starts at ![]() , then its inconditions are asserted right after the start,

, then its inconditions are asserted right after the start, ![]() , where

, where ![]() is a small positive real number.

Axiom 2 also indicates that both inconditions and postconditions are effects.

is a small positive real number.

Axiom 2 also indicates that both inconditions and postconditions are effects.

The state of a resource

is a level value (integer or real). For consumable resource

usage, a task that depletes a resource is modeled to instantaneously

deplete the resource (subtract ![]() from the current state) at the

start of the task by the full amount. For non-consumable resource

usage, a task also depletes the usage amount at the start of the

task, but the usage is restored (added back to the resource state) at

the end of execution. A task can replenish a resource by having a

negative

from the current state) at the

start of the task by the full amount. For non-consumable resource

usage, a task also depletes the usage amount at the start of the

task, but the usage is restored (added back to the resource state) at

the end of execution. A task can replenish a resource by having a

negative ![]() . We will refer to the level of a resource

. We will refer to the level of a resource ![]() at time

at time ![]() in a history

in a history ![]() as

as ![]() . Axioms 3

and 4 describe these calculations for consumable and

non-consumable resources, respectively.

. Axioms 3

and 4 describe these calculations for consumable and

non-consumable resources, respectively.

Now that we have described how CHiPs change the state, we can specify the conditions under which an execution succeeds or fails. As stated formally in Definition 1, an execution succeeds if: the plan's preconditions are met at the start; the postconditions are met at the end; the inconditions are met throughout the duration (not including the start or end); all used resources stay within their value limits throughout the duration; and all executions in the decomposition succeed. Otherwise, the execution fails.

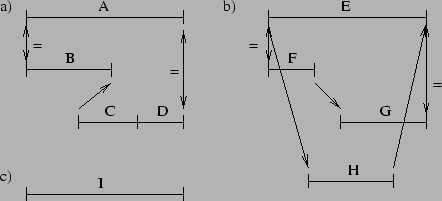

Conventional planning literature often speaks of clobbering and achieving preconditions of plans [Weld, 1994]. In CHiPs, these notions are slightly different since inconditions can clobber and be clobbered, as seen in the previous section. Formalizing these concepts and another, undoing postconditions, helps us define summary conditions (in Section 3.2). However, it will be convenient to define first what it means to assert a condition. Figure 5 gives examples of executions involved in these interactions, and we define these terms as follows:

Definition 2 states that an execution ![]() in a history

in a history ![]() asserts a literal at time

asserts a literal at time ![]() if that literal is an effect of

if that literal is an effect of ![]() that holds in the state at

that holds in the state at ![]() .

Note that that from this point on, beginning in Definition 3, we use

brackets [ ] as a shorthand when defining similar terms and procedures.

For example, saying ``[

.

Note that that from this point on, beginning in Definition 3, we use

brackets [ ] as a shorthand when defining similar terms and procedures.

For example, saying ``[![]() ,

, ![]() ] implies [

] implies [![]() ,

, ![]() ]'' means

]'' means ![]() implies

implies ![]() , and

, and ![]() implies

implies ![]() . This shorthand will help us avoid repetition,

at the cost of slightly more difficult parsing.

. This shorthand will help us avoid repetition,

at the cost of slightly more difficult parsing.

So, an execution achieves or clobbers a precondition if it is the last

(or one of the last) to assert the condition or its negation

(respectively) before it is required. Likewise, an execution undoes a postcondition if it is the first (or one of the first) to assert the negation of the condition after the condition is asserted. An execution ![]() clobbers an

incondition or postcondition of

clobbers an

incondition or postcondition of ![]() if

if ![]() asserts the negation of

the condition during or at the end (respectively) of

asserts the negation of

the condition during or at the end (respectively) of ![]() .

Achieving effects (inconditions and postconditions) does not make sense for this

formalism, so it is not defined.

Figure 5 shows different ways an execution

.

Achieving effects (inconditions and postconditions) does not make sense for this

formalism, so it is not defined.

Figure 5 shows different ways an execution ![]() achieves, clobbers, and undoes an execution

achieves, clobbers, and undoes an execution ![]() .

. ![]() and

and ![]() point to where they are asserted or required to be met.

point to where they are asserted or required to be met.

As recognized by tsuneto:98, external conditions are important

for reasoning about potential refinements of abstract plans. Although

the basic idea is the same, we define them a little differently and call

them external preconditions to differentiate them from other

conditions that we call external postconditions. Intuitively, an

external precondition of a group of partially ordered plans is a

precondition of one of the plans that is not achieved by another in

the group and must be met external to the group. External

postconditions, similarly, are those that are not undone by plans in

the group and are net effects of the group.

Definition 6 states that ![]() is an

external [pre, post]condition of an execution

is an

external [pre, post]condition of an execution ![]() if

if ![]() is a

[pre, post]condition of a subplan for which it is not [achieved,

undone] by some other subplan.

is a

[pre, post]condition of a subplan for which it is not [achieved,

undone] by some other subplan.

For the example in Figure 2, ![]() is not an external precondition because, although G must exist to produce H, G is supplied by the execution of the

is not an external precondition because, although G must exist to produce H, G is supplied by the execution of the

![]() plan. Thus,

plan. Thus, ![]() is met internally,

making

is met internally,

making ![]() an internal condition.

an internal condition.

![]() is an external precondition, an

internal condition, and an external postcondition because it is needed

externally and internally; it is an effect of

is an external precondition, an

internal condition, and an external postcondition because it is needed

externally and internally; it is an effect of ![]() which releases M1 when it is finished; and no other plan in the

decomposition undoes this effect.

which releases M1 when it is finished; and no other plan in the

decomposition undoes this effect.

Summary information can be used to find abstract solutions that are guaranteed to succeed no matter how they are refined because the information describes all potential conditions of the underlying decomposition. Thus, some commitments to particular plan choices, whether for a single agent or between agents, can be made based on summary information without worrying that deeper details lurk beneath that will doom the commitments. While HTN planners have used abstract conditions to guide search []<e.g.,>sacerdoti:74,tsuneto:98, they rely on a user-defined subset of constraints that can only help detect some potential conflicts. In contrast, summary information can be used to identify all potential conflicts.

Having the formalisms of the previous section, we can now define summary information and describe a method for computing it for non-primitive plans (in Section 3.4). Because there are many detailed definitions and algorithms in this section, we follow the same structure here as in the previous section, where we first give a more informal overview of the key concepts and notation, into which we then subsequently delve more systematically.

The

summary information of plan ![]() consists of summary pre-, in-, and

postconditions (

consists of summary pre-, in-, and

postconditions (![]() ,

, ![]() ,

, ![]() ),

summary resource usage (

),

summary resource usage (![]() ) for each resource

) for each resource ![]() ,

and whether the plan can be executed in any way successfully

(

,

and whether the plan can be executed in any way successfully

(![]() ).

).

A summary condition (whether pre, post, or in) specifies not only a positive

or negated literal, but additional modal information.

Each summary condition has an associated ![]() , whose value is either

, whose value is either ![]() or

or ![]() depending

on whether it must hold for all possible decompositions of the abstract operator

or just may hold depending on which decomposition is chosen. The

depending

on whether it must hold for all possible decompositions of the abstract operator

or just may hold depending on which decomposition is chosen. The

![]() of a summary condition is either

of a summary condition is either ![]() ,

, ![]() ,

, ![]() ,

or

,

or ![]() , specifying when the condition must hold in the plan's

interval of execution.

A plan

, specifying when the condition must hold in the plan's

interval of execution.

A plan ![]() must [achieve, clobber] summary

precondition

must [achieve, clobber] summary

precondition ![]() of

of ![]() if the execution of

if the execution of ![]() (or that of any plan with the same summary information) would [achieve, clobber] a condition summarized by

(or that of any plan with the same summary information) would [achieve, clobber] a condition summarized by ![]() (or any plan with the same summary information as

(or any plan with the same summary information as ![]() ).

).

The algorithm for deriving summary conditions for plan ![]() takes as

input the summary conditions of the immediate subplans of

takes as

input the summary conditions of the immediate subplans of ![]() and the

conditions defined for the CHiP

and the

conditions defined for the CHiP ![]() . The pre-, in-, and

postconditions of

. The pre-, in-, and

postconditions of ![]() become must first, must always, and must last

summary conditions, respectively. The algorithm retains the existence

and timing of subplan summary conditions in the parent depending on

whether the conditions are achieved, clobbered, or undone by siblings,

whether the decomposition is

become must first, must always, and must last

summary conditions, respectively. The algorithm retains the existence

and timing of subplan summary conditions in the parent depending on

whether the conditions are achieved, clobbered, or undone by siblings,

whether the decomposition is ![]() or

or ![]() , whether the subplan is

ordered first or last, and whether all subplans share the same

condition. Subplan first, always, and last conditions can become

sometimes conditions in the parent. The parent is computed as

, whether the subplan is

ordered first or last, and whether all subplans share the same

condition. Subplan first, always, and last conditions can become

sometimes conditions in the parent. The parent is computed as

![]() as long as all subplans are

as long as all subplans are ![]() , no subplan may

clobber a summary condition of another, and summarized resources do

not violate limits.

, no subplan may

clobber a summary condition of another, and summarized resources do

not violate limits.

We represent summary resource usage as three value ranges,

![]() ,

, ![]() ,

, ![]() ,

where the resource's local usage occurs within the task's execution,

and the persistent usage represents the usage that lasts after the

task terminates for depletable resources. The summarization algorithm

for an abstract task takes the summary resource usages of its

subtasks, considers all legal orderings of the subtasks, and all

possible usages for all subintervals within the interval of the

abstract task, to build multiple usage profiles. These profiles are

combined with algorithms for computing parallel, sequential, and

disjunctive usages to give the summary usage of the parent

task.

,

where the resource's local usage occurs within the task's execution,

and the persistent usage represents the usage that lasts after the

task terminates for depletable resources. The summarization algorithm

for an abstract task takes the summary resource usages of its

subtasks, considers all legal orderings of the subtasks, and all

possible usages for all subintervals within the interval of the

abstract task, to build multiple usage profiles. These profiles are

combined with algorithms for computing parallel, sequential, and

disjunctive usages to give the summary usage of the parent

task.

The summary information for a plan ![]() ,

, ![]() ,

is a tuple

,

is a tuple ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , whose

members are sets of summary conditions, summarized resource usage, and a

, whose

members are sets of summary conditions, summarized resource usage, and a ![]() flag indicating whether the plan will execute consistently internally.

flag indicating whether the plan will execute consistently internally.

![]() and

and

![]() are summary pre- and postconditions, which are the external pre- and postconditions of

are summary pre- and postconditions, which are the external pre- and postconditions of ![]() , respectively.

The summary inconditions of

, respectively.

The summary inconditions of ![]() ,

, ![]() , contain all

conditions that must hold within some execution of

, contain all

conditions that must hold within some execution of ![]() for it to be

successful. A condition

for it to be

successful. A condition ![]() in one of these sets is a tuple

in one of these sets is a tuple

![]() .

. ![]() is the literal of

is the literal of ![]() .

The

.

The ![]() of

of ![]() can be

can be ![]() or

or ![]() . If

. If

![]() , then

, then ![]() is called a

is called a ![]() condition because

condition because

![]() must hold for every successful plan execution.

For convenience we usually write

must hold for every successful plan execution.

For convenience we usually write ![]() .

. ![]() is a

is a ![]() condition (

condition (![]() is

is ![]() ) if

) if ![]() must hold for some successful execution.

must hold for some successful execution.

The ![]() of a summary condition

of a summary condition ![]() can

either be

can

either be ![]() ,

, ![]() ,

, ![]() , or

, or ![]() .

. ![]() is

is ![]() for

for ![]() if

if ![]() is an incondition that

must hold throughout all potential executions of

is an incondition that

must hold throughout all potential executions of ![]() (

(![]() holds

always); otherwise,

holds

always); otherwise, ![]() meaning

meaning ![]() holds at one point, at least, within an execution of

holds at one point, at least, within an execution of ![]() .

So, an

.

So, an ![]() condition is

condition is ![]() , and we do not define

, and we do not define ![]()

![]() inconditions because whether it is

inconditions because whether it is ![]() because of existence or timing, it is not significantly different from

because of existence or timing, it is not significantly different from ![]()

![]() in how a planner reasons about it. Whether a condition is

in how a planner reasons about it. Whether a condition is ![]()

![]() (however defined) or

(however defined) or ![]()

![]() , another plan can only have a

, another plan can only have a ![]()

![]() relationship with the condition (as defined in Section 3.3).

Note also that the incondition of a CHiP has the restricted meaning of a

relationship with the condition (as defined in Section 3.3).

Note also that the incondition of a CHiP has the restricted meaning of a ![]()

![]() summary incondition.

The

summary incondition.

The

![]() is

is ![]() for

for ![]() if

if ![]() holds at the

beginning of an execution of

holds at the

beginning of an execution of ![]() ; otherwise,

; otherwise, ![]() .

Similarly,

.

Similarly, ![]() is

is ![]() for

for ![]() if

if ![]() is

asserted at the end of a successful execution of

is

asserted at the end of a successful execution of ![]() ; otherwise, it is

; otherwise, it is ![]() .

Although

.

Although ![]() and

and ![]() syntactically only take one value,

semantically

syntactically only take one value,

semantically ![]() , and

, and ![]() .

.

We considered using modal logic operators to describe these concepts. While a mix of existing temporal logic and dynamic logic [Pratt, 1976] notation could be forced to work, we found that using our own terminology made definitions much simpler. We discuss this more at the end of Section 8.

Definitions 7, 8, and 9 give the formal semantics of ![]() and

and ![]() for a few representative condition types.

Summary conditions of a plan are defined recursively in that they

depend on the summary conditions of the plan's immediate subplans

instead of its complete decomposition.

Because a single description of summary information could represent

many different plan hierarchies, we quantify over plans

for a few representative condition types.

Summary conditions of a plan are defined recursively in that they

depend on the summary conditions of the plan's immediate subplans

instead of its complete decomposition.

Because a single description of summary information could represent

many different plan hierarchies, we quantify over plans ![]() , whose

subplans have the same summary information as those of the plan

, whose

subplans have the same summary information as those of the plan ![]() being summarized.

We could have defined the existence and timing

properties of conditions based on the entire hierarchy, but in doing

so, deriving summary conditions would be as expensive as solving the

planning problem, and one of the main purposes of summary information

is to reduce the computation of the planning problem. The reason why

it would be so expensive is that in the worst case all legal orderings of all plan steps must be explored to determine whether a

condition is

being summarized.

We could have defined the existence and timing

properties of conditions based on the entire hierarchy, but in doing

so, deriving summary conditions would be as expensive as solving the

planning problem, and one of the main purposes of summary information

is to reduce the computation of the planning problem. The reason why

it would be so expensive is that in the worst case all legal orderings of all plan steps must be explored to determine whether a

condition is ![]() or

or ![]() . We will discuss an example of this at the end of this subsection.

. We will discuss an example of this at the end of this subsection.

Definition 7 states that a ![]() precondition of

precondition of ![]() is an external

precondition that is always required at the beginning of the execution of any

is an external

precondition that is always required at the beginning of the execution of any

![]() that has the same conditions as

that has the same conditions as ![]() and the same summary information and ordering for its subplans as

and the same summary information and ordering for its subplans as ![]() .

A last postcondition is always asserted at the end of the execution

(substitute ``pre'' with ``post'' and

.

A last postcondition is always asserted at the end of the execution

(substitute ``pre'' with ``post'' and ![]() with

with ![]() in the last two lines of Definition 7). A

[must,may] sometimes precondition is a [must,may] external precondition

that is not a

in the last two lines of Definition 7). A

[must,may] sometimes precondition is a [must,may] external precondition

that is not a ![]() precondition. A sometimes postcondition

is defined similarly.

Definition 8 states that a literal

precondition. A sometimes postcondition

is defined similarly.

Definition 8 states that a literal ![]() is a

is a ![]() ,

, ![]() incondition of a plan

incondition of a plan ![]() if at any time during any isolated execution of any

if at any time during any isolated execution of any ![]() with the same summary information as

with the same summary information as ![]() , some executing plan

, some executing plan ![]() has incondition

has incondition ![]() .

Definition 9 states that a

[must, may] sometimes incondition of plan

.

Definition 9 states that a

[must, may] sometimes incondition of plan ![]() is a condition that is required during [any, some] execution of [any, some] plan

is a condition that is required during [any, some] execution of [any, some] plan ![]() that has the same summary information and ordering for its subplans as

that has the same summary information and ordering for its subplans as ![]() .

.

The ![]() flag is a boolean indicating whether the plan (or any

plan with the same summary information and ordering for subplans)

would execute successfully no matter how it was decomposed and no matter when

its subplans were executed. Definition 10 says that all

possible executions will succeed for a consistent plan. This is very similar to the

flag is a boolean indicating whether the plan (or any

plan with the same summary information and ordering for subplans)

would execute successfully no matter how it was decomposed and no matter when

its subplans were executed. Definition 10 says that all

possible executions will succeed for a consistent plan. This is very similar to the ![]() relation that will be defined in Section 4. We do not

include whether the plan will definitely not succeed in the summary

information because it requires an exponential computation to see whether

all conflicts in the subplans can be resolved. This computation can wait

to be done during planning after summary information is fully derived.

relation that will be defined in Section 4. We do not

include whether the plan will definitely not succeed in the summary

information because it requires an exponential computation to see whether

all conflicts in the subplans can be resolved. This computation can wait

to be done during planning after summary information is fully derived.

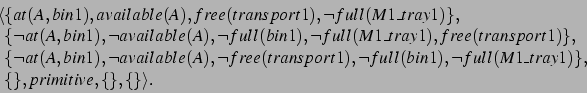

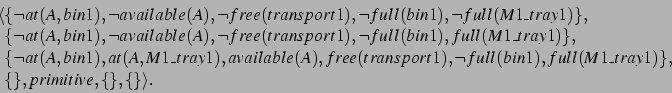

We show a subset of the summary conditions for

the production manager's top-level plan (of Figure 2) below.

Following each literal

are modal tags for ![]() and

and ![]() information. ``Mu'' is

information. ``Mu'' is

![]() ; ``Ma'' is

; ``Ma'' is ![]() ; ``F'' is

; ``F'' is ![]() ; ``L'' is

; ``L'' is ![]() ; ``S'' is

; ``S'' is

![]() ; and ``A'' is

; and ``A'' is ![]() .

.

Production manager's

The ![]() summary precondition is a

summary precondition is a ![]() condition

because the production manager may end up not using M1 if it chooses to use M2

instead to produce G.

condition

because the production manager may end up not using M1 if it chooses to use M2

instead to produce G. ![]() is a

is a ![]() summary

precondition because part A must be used at the beginning of execution

when it is transported to one of the machines.

Because the machines are needed sometime after the parts are transported, they are sometimes (and not first) conditions: they are needed at some point in time after the beginning of execution.

summary

precondition because part A must be used at the beginning of execution

when it is transported to one of the machines.

Because the machines are needed sometime after the parts are transported, they are sometimes (and not first) conditions: they are needed at some point in time after the beginning of execution.

Because the production manager may use M1 to produce G, ![]() is a summary

incondition of

is a summary

incondition of ![]() .

Having both

.

Having both ![]() and

and ![]() as inconditions

is consistent because they are

as inconditions

is consistent because they are ![]() conditions, implying that

they hold at different times during the plan's execution. In contrast, these

conditions would conflict if they were both

conditions, implying that

they hold at different times during the plan's execution. In contrast, these

conditions would conflict if they were both ![]() and

and ![]() (meaning that they must always hold throughout every possible execution of the plan).

(meaning that they must always hold throughout every possible execution of the plan).

The summary condition ![]() is a

is a ![]() postcondition of the top-level

plan because A will definitely be consumed by

postcondition of the top-level

plan because A will definitely be consumed by ![]() and is not

produced by some other plan in the decomposition of

and is not

produced by some other plan in the decomposition of

![]() . Even though

. Even though ![]() is an effect of

is an effect of

![]() , it is not an external postcondition of

, it is not an external postcondition of ![]() because it is undone by

because it is undone by ![]() , which consumes G to

make H.

, which consumes G to

make H. ![]() is a

is a ![]() summary postcondition because the

production manager releases H at the very end of execution.

summary postcondition because the

production manager releases H at the very end of execution.

![]() is not

is not ![]() because the manager finishes using M2

before moving H into storage.

because the manager finishes using M2

before moving H into storage.

Notice that ![]() is a

is a ![]() summary precondition. However,

no matter how the hierarchy is decomposed, M2 must be used to produce

H, so

summary precondition. However,

no matter how the hierarchy is decomposed, M2 must be used to produce

H, so ![]() must be established externally to the production

manager's plan. Because summary information is defined in terms of

the summary information of the immediate subplans, in the subplans of

must be established externally to the production

manager's plan. Because summary information is defined in terms of

the summary information of the immediate subplans, in the subplans of

![]() , we only see that

, we only see that ![]() has an

has an ![]() precondition and an

precondition and an ![]() postcondition that would

achieve the

postcondition that would

achieve the ![]() precondition of

precondition of ![]() .

This summary information does not tell us that the precondition of

.

This summary information does not tell us that the precondition of

![]() exists only when the postcondition exists, a necessary

condition to determine that the derived precondition of

exists only when the postcondition exists, a necessary

condition to determine that the derived precondition of ![]() is a

is a ![]() condition. Thus, it is

condition. Thus, it is ![]() . If we augmented summary

information with which subsets of conditions existed together, hunting

through combinations and temporal orderings of condition subsets among

subplans to derive summary conditions would basically be an

adaptation of an HTN planning algorithm, which summary information is

intended to improve. Instead, we derive summary information in

polynomial time and then use it to improve HTN planning exponentially

as we explain in Section 6.

This is the tradeoff we made at the beginning of this section in

defining summary conditions in terms of just the immediate subplans

instead of the entire hierarchy. Abstraction involves loss of

information, and this loss enables computational gains.

. If we augmented summary

information with which subsets of conditions existed together, hunting

through combinations and temporal orderings of condition subsets among

subplans to derive summary conditions would basically be an

adaptation of an HTN planning algorithm, which summary information is

intended to improve. Instead, we derive summary information in

polynomial time and then use it to improve HTN planning exponentially

as we explain in Section 6.

This is the tradeoff we made at the beginning of this section in

defining summary conditions in terms of just the immediate subplans

instead of the entire hierarchy. Abstraction involves loss of

information, and this loss enables computational gains.

In order to derive summary conditions according to their definitions, we need to be able to recognize achieve, clobber, and undo relationships based on summary conditions as we did for basic CHiP conditions. We give definitions and algorithms for these, which build on constructs and algorithms for reasoning about temporal relationships, described in Appendix A.

Achieving and clobbering are very similar, so we define them together.

Definition 11 states that plan ![]() must [achieve, clobber] summary

precondition

must [achieve, clobber] summary

precondition ![]() of

of ![]() if and only if for all executions of any

two plans,

if and only if for all executions of any

two plans, ![]() and

and ![]() , with the same summary information and

ordering constraints as

, with the same summary information and

ordering constraints as ![]() and

and ![]() , the execution of

, the execution of ![]() or

one of its subexecutions would [achieve, clobber] an external

precondition

or

one of its subexecutions would [achieve, clobber] an external

precondition ![]() of

of ![]() .

.

Achieving and clobbering in- and postconditions are defined the same as

Definition 11 but substituting ``in'' and ``post'' for ``pre'' and

removing the last line for inconditions. Additionally substituting

![]() for

for ![]() gives the definitions of may achieve

and clobber. Furthermore, the definitions of must/may-undo are

obtained by substituting ``post'' for ``pre'' and ``undo'' for

``achieve'' in Definition 11.

Note that, as mentioned in Section 2.5, achieving inconditions and

postconditions does not make sense for this formalism.

gives the definitions of may achieve

and clobber. Furthermore, the definitions of must/may-undo are

obtained by substituting ``post'' for ``pre'' and ``undo'' for

``achieve'' in Definition 11.

Note that, as mentioned in Section 2.5, achieving inconditions and

postconditions does not make sense for this formalism.

Algorithms for these interactions are given in

Figure 6 and Figure 7.

These algorithms build on others (detailed in Appendix B) that use interval point algebra to determine whether a plan must or

may assert a summary condition before, at, or during the time another

plan requires a summary condition to hold.

Similar to Definition 3 of must-achieve for CHiP conditions, Figure 6 says that ![]() achieves summary condition

achieves summary condition ![]() if it must asserts the condition before it must hold, and there are no other plans that may assert the condition or its negative in between.

The algorithm for may-achieve (in Figure 7) mainly differs in that

if it must asserts the condition before it must hold, and there are no other plans that may assert the condition or its negative in between.

The algorithm for may-achieve (in Figure 7) mainly differs in that ![]() may assert the condition beforehand, and there is no plan that must assert in between.

The undo algorithms are the same as those for achieve after swapping

may assert the condition beforehand, and there is no plan that must assert in between.

The undo algorithms are the same as those for achieve after swapping ![]() and

and ![]() in all

in all ![]() /

/![]() -

-![]() lines.

lines.

The complexity of determining must/may-clobber for inconditions and

postconditions is simply ![]() to check

to check ![]() conditions in

conditions in ![]() . If

the conditions are hashed, then the algorithm is constant time. For the

rest of the algorithm cases, the complexity of walking through the summary

conditions checking for

. If

the conditions are hashed, then the algorithm is constant time. For the

rest of the algorithm cases, the complexity of walking through the summary

conditions checking for ![]() and

and ![]() is

is ![]() for a maximum of

for a maximum of ![]() summary

conditions for each of

summary

conditions for each of ![]() plans represented in

plans represented in ![]() . In the

worst case, all summary conditions summarize the same propositional

variable, and all

. In the

worst case, all summary conditions summarize the same propositional

variable, and all ![]() conditions must be visited.

conditions must be visited.

Let's look at some examples of these relationships.

In Figure 8a, ![]() may-clobber

may-clobber ![]() =

= ![]() (M2)MaS

in the summary

preconditions of

(M2)MaS

in the summary

preconditions of ![]() because there is some history

where

because there is some history

where ![]() ends before

ends before ![]() starts, and

starts, and

![]() starts after

starts after ![]() starts.

In Figure 8b,

starts.

In Figure 8b, ![]() must-achieve

must-achieve ![]() =

= ![]() (H)MuF in the summary

preconditions of

(H)MuF in the summary

preconditions of ![]() . Here,

. Here, ![]() is

is

![]() (H)MuL in the summary postconditions of

(H)MuL in the summary postconditions of ![]() . In all

histories,

. In all

histories, ![]() attempts to assert

attempts to assert ![]() before

the

before

the ![]() requires

requires ![]() to be met, and there is no

other plan execution that attempts to assert a condition on

the availability of H.

to be met, and there is no

other plan execution that attempts to assert a condition on

the availability of H. ![]() does not

may-clobber

does not

may-clobber ![]() =

= ![]() (M2)MuF in the

summary preconditions of

(M2)MuF in the

summary preconditions of ![]() even though

even though ![]() asserts

asserts ![]() =

= ![]() (M2)MuL before

(M2)MuL before ![]() is required to be met. This is because

is required to be met. This is because

![]() must assert

must assert ![]() (M2)MuA between the time that

(M2)MuA between the time that ![]() asserts

asserts ![]() and when

and when ![]() is required.

Thus,

is required.

Thus, ![]() must-undo

must-undo ![]() 's summary postcondition.

Because

's summary postcondition.

Because ![]() cannot assert its postcondition

cannot assert its postcondition

![]() (M2)MuL before

(M2)MuL before ![]() requires

requires

![]() (M2)MuF,

(M2)MuF, ![]() must-clobber the summary

precondition.

must-clobber the summary

precondition.

|

Now that we have algorithms that determine interactions of abstract plans based on their summary conditions, we can create an algorithm that derives summary conditions according to their definitions in Section 3.2.

Figure 9 shows pseudocode for the algorithm.

The method for deriving the summary conditions of a plan ![]() is

recursive. First, summary information is derived for each of

is

recursive. First, summary information is derived for each of

![]() 's subplans. Then conditions are added based on

's subplans. Then conditions are added based on ![]() 's own conditions. Most of the rest of the algorithm

derives summary

conditions from those of

's own conditions. Most of the rest of the algorithm

derives summary

conditions from those of ![]() 's subplans.

Whether

's subplans.

Whether ![]() is

is ![]() depends on the consistency of its subplans and whether its own summary conditions and resource usages are in conflict. The braces '{' '}' used here have slightly different semantics than used before with the brackets. An expression {

depends on the consistency of its subplans and whether its own summary conditions and resource usages are in conflict. The braces '{' '}' used here have slightly different semantics than used before with the brackets. An expression {![]() ,

,![]() } can be interpreted simply as (

} can be interpreted simply as (![]() or

or ![]() , respectively).

, respectively).

Definitions and algorithms for temporal relationships such as ![]() -

-![]() and covers are in Appendix A.

When the algorithm adds or copies a condition to a set, only one condition can exist for any literal, so a condition's information may be overwritten if it has the same literal. In all cases,

and covers are in Appendix A.

When the algorithm adds or copies a condition to a set, only one condition can exist for any literal, so a condition's information may be overwritten if it has the same literal. In all cases, ![]() overwrites

overwrites ![]() ; and

; and ![]() ,

, ![]() , and

, and ![]() overwrite

overwrite ![]() ; but, not vice-versa.

Further, because it uses recursion, this procedure is assumed to work on plans whose expansion is finite.

; but, not vice-versa.

Further, because it uses recursion, this procedure is assumed to work on plans whose expansion is finite.

In this section, we

define a representation for capturing ranges of usage

both local to the task interval and the depleted usage lasting after

the end of the interval. Based on this we introduce a summarization

algorithm that captures in these ranges the uncertainty represented by

decomposition choices in ![]() plans and partial temporal orderings of

plans and partial temporal orderings of

![]() plan subtasks. This representation allows a coordinator or

planner to reason about the potential for conflicts for a set of tasks.

We will discuss this reasoning later in Section 4.2.

Although referred to as resources, these variables could be durations or

additive costs or rewards.

plan subtasks. This representation allows a coordinator or

planner to reason about the potential for conflicts for a set of tasks.

We will discuss this reasoning later in Section 4.2.

Although referred to as resources, these variables could be durations or

additive costs or rewards.

We start with a new example for simplicity that motivates our choice of representation. Consider the task of coordinating a collection of rovers as they explore the environment around a lander on Mars. This exploration takes the form of visiting different locations and making observations. Each traversal between locations follows established paths to minimize effort and risk. These paths combine to form a network like the one mapped out in Figure 10, where vertices denote distinguished locations, and edges denote allowed paths. Thinner edges are harder to traverse, and labeled points have associated observation goals. While some paths are over hard ground, others are over loose sand where traversal is harder since a rover can slip.

Figure 11 gives an example of an abstract task. Imagine a

rover that wants to make an early morning trip from point ![]() to point

to point

![]() on the example map. During this trip the sun slowly rises above

the horizon giving the rover the ability to progressively use soak rays tasks to provide more solar power (a non-consumable

resource3) to motors in the wheels. In addition to collecting photons,

the morning traverse moves the rover, and the resultant go tasks

require path dependent amounts of power. While a rover traveling from

point

on the example map. During this trip the sun slowly rises above

the horizon giving the rover the ability to progressively use soak rays tasks to provide more solar power (a non-consumable

resource3) to motors in the wheels. In addition to collecting photons,

the morning traverse moves the rover, and the resultant go tasks

require path dependent amounts of power. While a rover traveling from

point ![]() to point

to point ![]() can take any number of paths, the shortest

three involve following one, two, or three steps.

can take any number of paths, the shortest

three involve following one, two, or three steps.

A summarized resource usage consists of

ranges of potential resource usage amounts during

and after performing an abstract task, and we represent this

summary information for a plan ![]() on a resource

on a resource ![]() using the structure

using the structure

![]() where the resource's local usage occurs within

where the resource's local usage occurs within ![]() 's

execution, and the persistent usage represents the usage that lasts

after the execution terminates for consumable resources.

's

execution, and the persistent usage represents the usage that lasts

after the execution terminates for consumable resources.

The context for Definition 12 is the set of all

histories ![]() where the value of

where the value of ![]() is 0 in the initial state, and

is 0 in the initial state, and

![]() only contains the execution of

only contains the execution of ![]() and its subexecutions. Thus,

the

and its subexecutions. Thus,

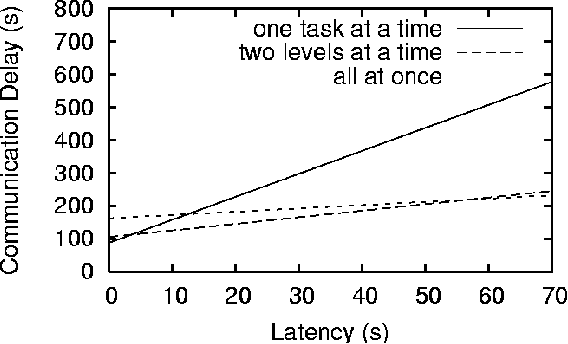

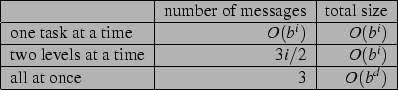

the ![]() term is the combined usage of