Overview

The goal of this project was to reconstruct colored images from the images in the Prokudin-Gorskii photo collection. There are three versions of each image: green filtered, blue filtered, and red filtered. Thus, using the illuminace levels from the different versions, we can reconstruct a fully colorized image.

The results below are obtained by running sum of squared differences (SSD) on the images, and realigning them based on the best offsets. Both small and large images were processed with a multiscale pyramid algorithm. The images were first coarsely matched at a lower resolution, and then using the approximated offset, the images were progressively matched on higher resolutions to get the final offset. This has the advantage of being extremely quick in runtime, as at each level, the search window is very small (here, a search window of [-5, 5] is used.)

Most of the images have fairly good results, with the exception of 00757v.jpg and 00087u.tif, possibly because the effective searching window is large. In order to prevent the black border and numbering from affecting the alignments, the images were cropped by 10%. SSD was done to align the green and red filtered images with the blue filtered image. Offsets are provided in the image captions.

To help with the bad results (eg 00757v.jpg), an alternate algorithm was implemeted as an extension, that used edges rather than overall illuminace. It also used repeated iterations and cropped borders to help lessen discrepencies at the edges. The results of this extended algorithm are shown and explained below.

Results

These are the required images given. The first ten images are lower resolution jpeg files. The next ten are generated from higher resolution tif images. For these, the jpegs are displayed below, and the tif images are linked to the respective jpegs.

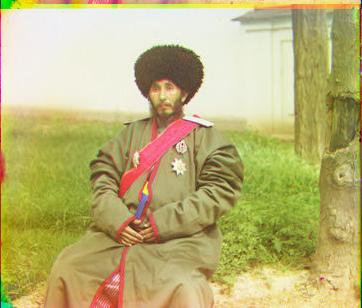

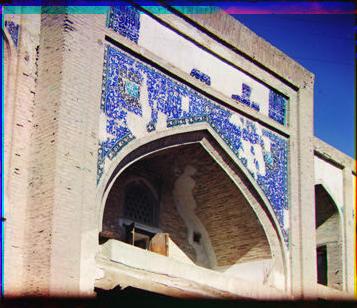

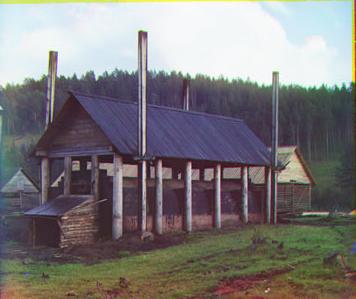

00088v G:(2, 3), R:(4, 5) |

00106v G:(4, 1), R:(9, -1) |

00757v G:(2, 3), R:(-104, 67) |

00888v G:(6, 0), R:(12, 0) |

00889v G:(2, 2), R:(5, 3) |

00907v G:(2, 0), R:(6, 0) |

00911v G:(1, -1), R:(13, -1) |

001031v G:(1, 1), R:(4, 2) |

01880v G:(6, 2), R:(14, 4) |

00137v G:(6, 5), R:(11, 8) |

||

Selected Images

These are some of the images not given in the dataset but selected from the image library. They were created using the extended algorithm (explained in the next section).

00163v G:(2, 3), R:(4, 5) |

00398v G:(5, 3), R:(11, 4) |

00149v G:(4, 2), R:(9, 2) |

00125v G:(6, 0), R:(10, 0) |

00640v G:(2, 0), R:(10, 0) |

00458u G:(40, 8), R:(84, 28) |

Extensions

As seen above, the simple approach of using SSD on the images directly does not always give the best results. Therefore, an extended algorithm was implemented to provide better images.

In order to create better matches, an edge map was created from the images and then fed through SSD. This ensured that the shape, rather than color, of the object would be considered in the matching.The images were first convolved with a Gaussian to remove noise that would create spurious edges. Then, they were processed using Canny edge detection. This method was chosen becuase it is the most sensitive form of edge detection, which was important due to the varying intensities of illumination over the color filtered images.

Another issue that arose was that the images did not match at the edges very well, since the matches were found by using circshift. Therefore, to remove these bad pixels, only the intersection of the two overlapping images were considered in the output.

The results of the extended algorithm can be seen below, compared to the simple approach used above.

|

BEFORE

|

AFTER

|

00757v G:(2, 3), R:(-104, 67) |

00757v G:(2, 3), R:(3, 2) |

00087u G:(48, 38), R:(111, 0) |

00087u G:(54, 37), R:(58, 19) |

00822u G:(58, 25), R:(125, 33) |

00822u (cropped top border) |

00153v G:(7, 2), R:(2, 0) |

00153v G:(7, 3), R:(14, 4) |

01112v G:(0, 0), R:(-72, -7) |

01112v G:(0, 0), R:(5, 1) |

31421v G:(8, 0), R:(13, 0) |

31421v (cropped top border) |

Yiling Tay - Carnegie Mellon University - Fall 2011