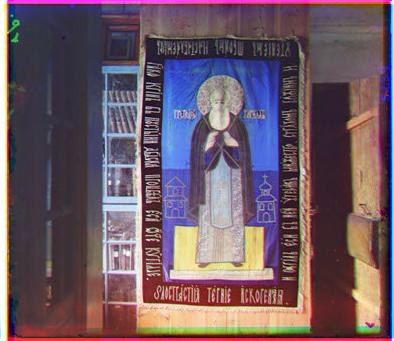

Images Of the Russian Empire

Computational Photography: Project 1 (and Project 1g)

Matt Mukerjee

(mukerjee at cs)

Overview:

In this project I seek to provide a software artifact capable of

providing an automated approximation of colored images from the

original Prokudin-Gorskii plates, whilst minimizing artifacts. To

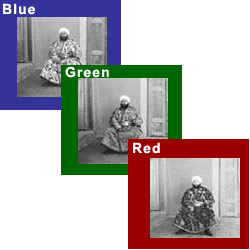

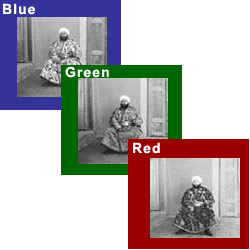

provide background, the original plates contain three separate

images (corresponding to the B, G, and R color channels) of various

scenes throughout the Russian empire in the very early 1900's.

Here is a small example:

In order to do this alignment process, I seek to find the alignment

that best satisfies some correctness metric across the color channels.

Initially, sum-of-square-difference (SSD) was used, but it didn't

provide good results. To provide much better results, normalized

cross-correlation (NCC) was instead implemented. Once a clear

correctness metric was defined, the question of performance came into

play. When operating on smaller images, a brute-force search over a

small window provides decent performance. In the context of this

project, a window of [-15, 15] was searched in both the x and y

direction (we assume a translation model is sufficient to model the

image discrepancies). This method will obviously not scale well as

image size (and proportionally, window size) increases. Thus, a much

smarter technique must be employed to reduce asymptotic runtime and

improve performance. Instead of brute-force searching for the

displacement vector, we simply create a Gaussian pyramid and estimate

the displacement vector over this multi-scale pyramid. We first find

the best displacement vector at the coarsest (smallest) scale, then

proceed to successively correct this estimation at each larger scale in

the pyramid. This leads to a clever observation: a window of size [-k,

k] over some image with scale 'n' covers the exact same part of the

image that a [-2k, 2k] window over that same image with scale '2n'.

This implies that moving towards smaller scales within the Gaussian

pyramid allows us to limit the window we need to search over. In this

vein, I choose to go up the pyramid 4 times, searching over a window of

size [-6, 6] in both x and y. This lead to seemingly correct alignment,

while taking a reasonable amount of time. Finally, one problem I ran

into was that the borders of the channel images tend to screw up the

similarity metric as they generally don't have a matching pixel in the

destination image. A simple solution to this is to sample a centered

i/2 x j/2 sized patch from each i x j sized image. This removes the

boarder entirely, eliminating this problem.

Results:

Small Images: (Green alignment, Red alignment)

(3,3) (4,5)

|

(4,1) (9, -1)

|

(6,6) (11,8)

|

(2,3) (5,5)

|

(6,1) (12,1)

|

(1,2) (4,3)

|

(2,0) (6,0)

|

(1, -1) (13, -1)

|

(1,1) (4,2)

|

(6,2) (14,4)

|

|

|

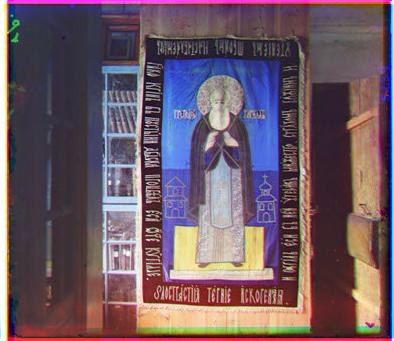

Large Images: (Green alignment, Red alignment)

(38,19) (91,37)

|

(48,40) (108,57)

|

(35,25) (52,37)

|

(15,6) (49,14)

|

(57,25) (125,34)

|

(16,3) (45,5)

|

(48,13) (113,19)

|

(-15,10) (11,19)

|

(42,32) (111,59)

|

(48,13) (113,19)

|

|

|

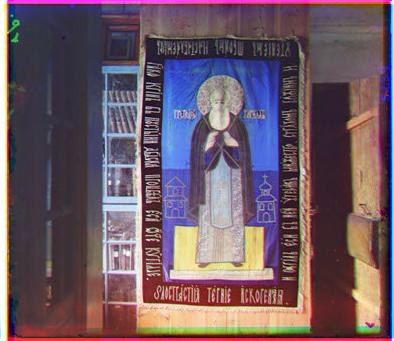

Additional Images: (Green alignment, Red alignment)

(72, -3) (143, -18)

|

(75, -7) (148, -28)

|