Unsupervised Learning of Visual Representations using Videos

People

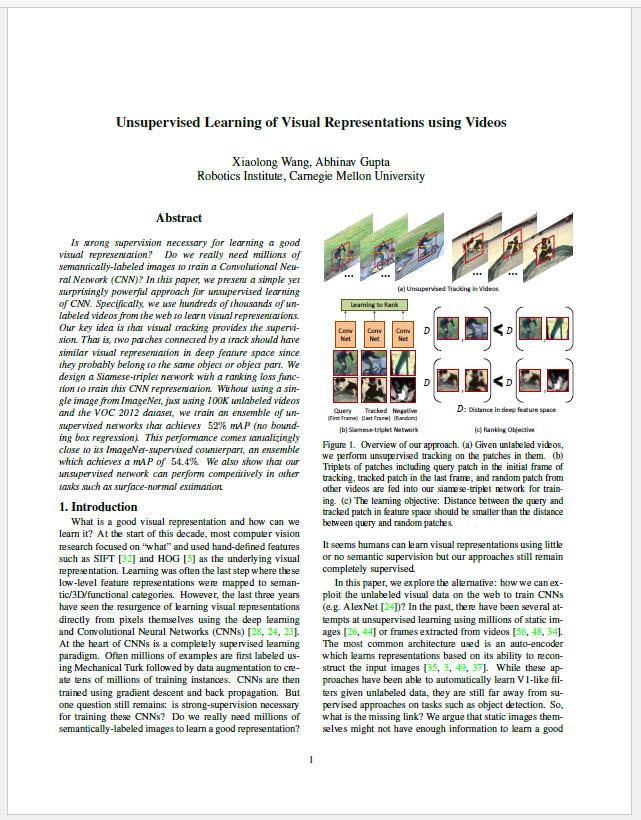

Paper

|

Xiaolong Wang and Abhinav Gupta Unsupervised Learning of Visual Representations using Videos Proc. of IEEE International Conference on Computer Vision (ICCV), 2015 [PDF] [code] [models] [mined_patches] |

Tracking Video Samples

Acknowledgements

This work was partially supported by ONR MURI N000141010934 and NSF IIS 1320083. This material was also based on research partially sponsored by DARPA under agreement number FA8750-14-2-0244. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of DARPA or the U.S. Government. The authors would like to thank Yahoo! and Nvidia for the compute cluster and GPU donations respectively.